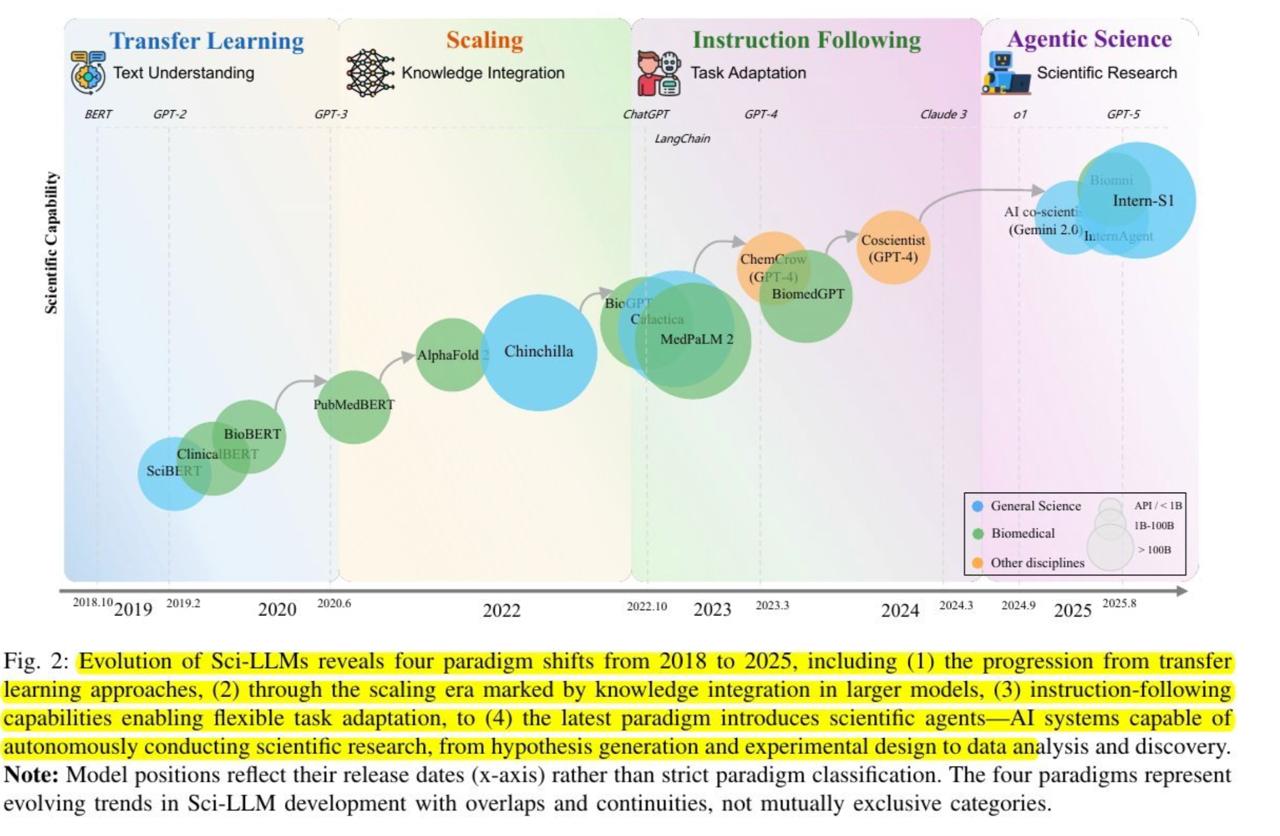

A sweeping 94-page survey published in August 2025 offers the most comprehensive roadmap yet for the next generation of scientific AI. Titled A Survey of Scientific Large Language Models: From Data Foundations to Agent Frontiers, the paper analyzes 270 scientific datasets and 190 benchmarks to explain why ordinary LLMs fail at real science - and how specialized scientific LLMs (SciLLMs) are rapidly closing the gap through richer multimodal data and closed-loop autonomous agents.

Why General-Purpose LLMs Fall Short in Science

Science isn’t just text. It is raw spectra, noisy sensor readings, LaTeX equations, executable code, tabular data with uncertainty bounds, and microscope images - all intertwined. Standard LLMs trained mostly on internet prose simply weren’t built for this chaos. They confidently hallucinate reaction mechanisms, ignore error propagation in measurements, and treat a chemical formula as decorative ASCII rather than a computable graph.

The result: even flagship models like GPT-4o routinely fail PhD-level science questions and produce non-reproducible “discoveries.”

A New Foundation: Unified Taxonomy + Multi-Layer Knowledge

The survey introduces two powerful organizing principles:

The survey introduces two powerful organizing principles:

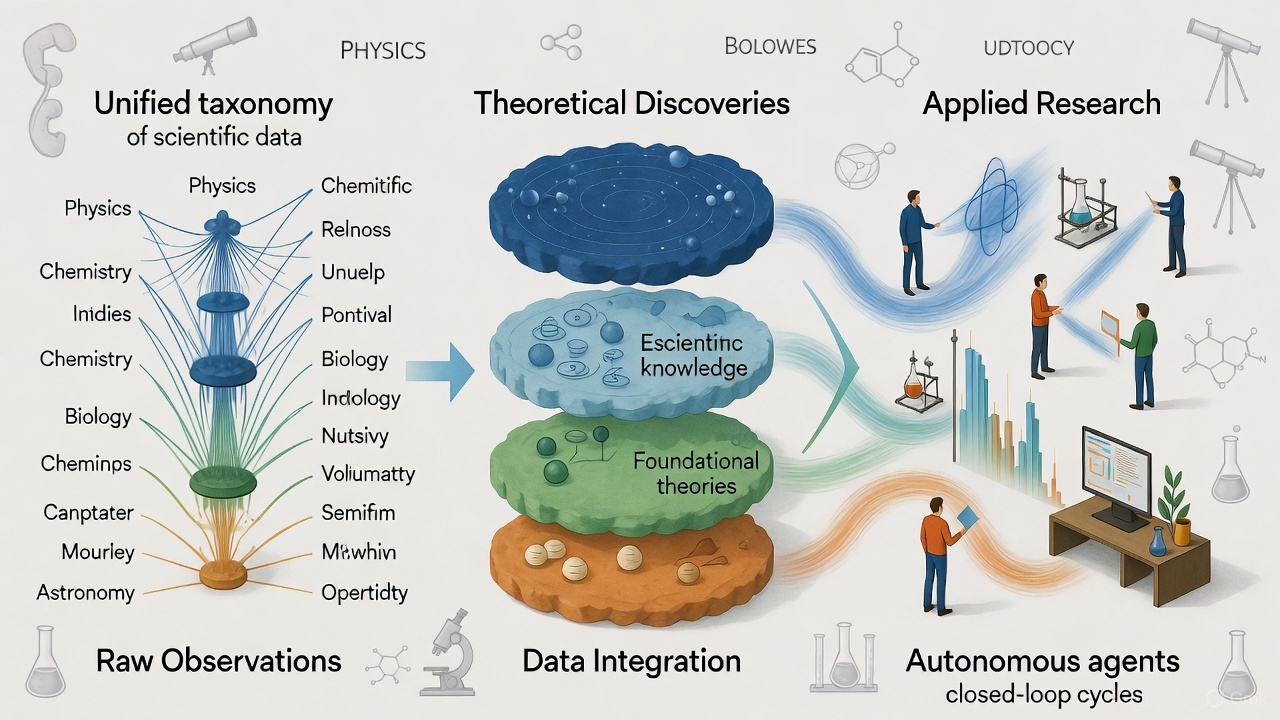

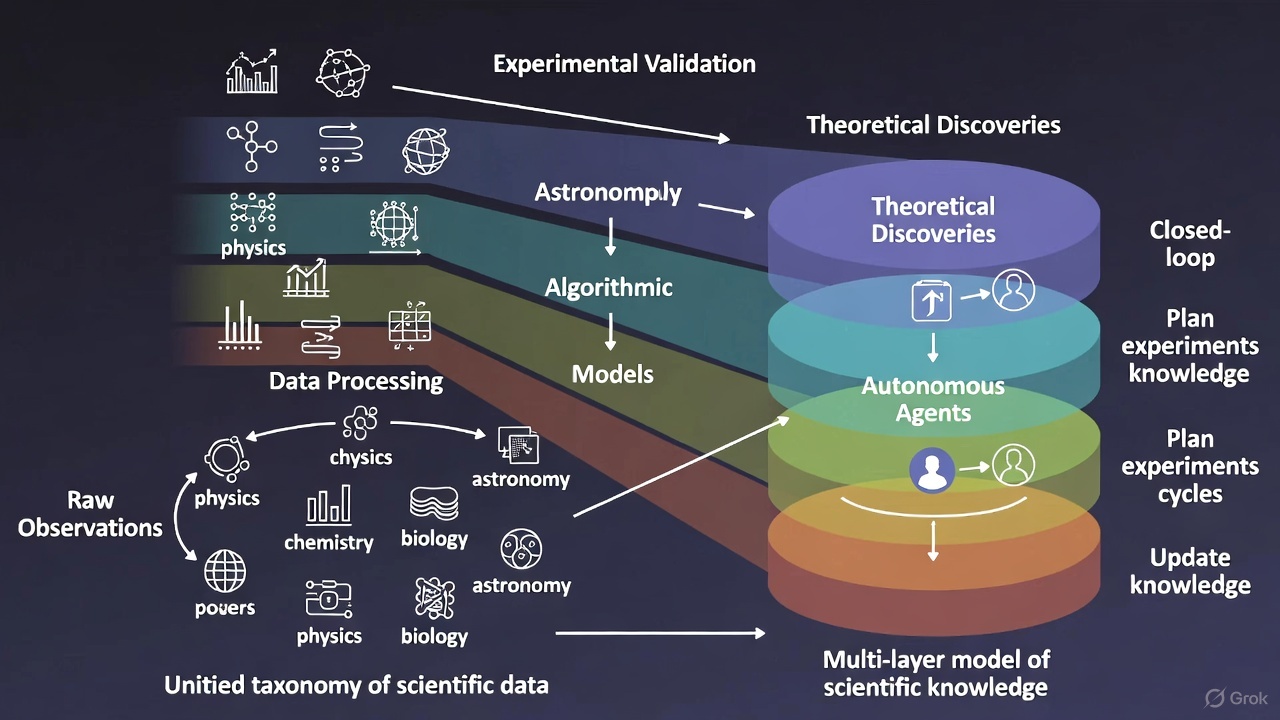

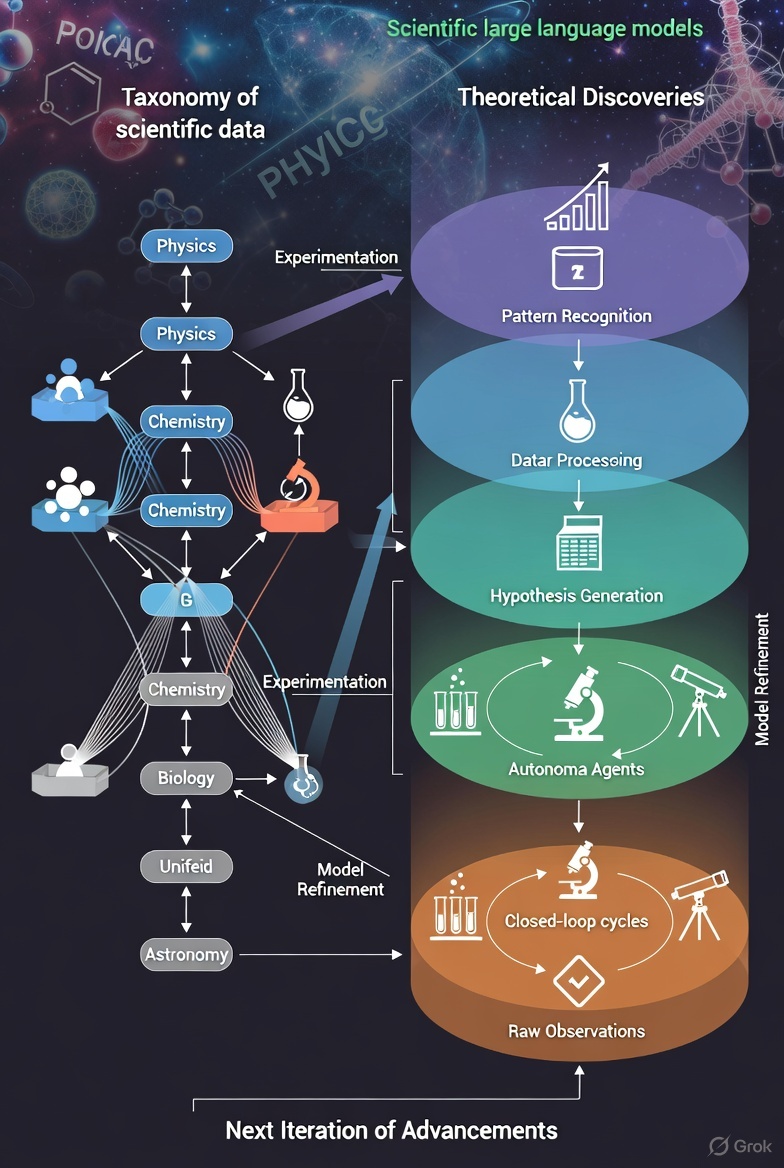

1. A unified taxonomy that categorizes all scientific data (observational, experimental, computational, theoretical, multimodal).

2. A four-layer model of scientific knowledge: raw observations → patterns → mechanisms → unifying theories.

Together, these frameworks guide both pre-training and post-training so that models learn to respect physical laws, propagate uncertainties correctly, and seamlessly bridge text, tables, formulas, code, and images.

Domain-Specific SciLLMs Are Already Outperforming Generalists

- Physics: Models now rival human experts at LHC event reconstruction.

- Chemistry: Agentic systems like ChemCrow and Coscientist autonomously plan and optimize reactions with near-lab accuracy.

- Biology: Specialized BioGPT variants achieve 85–90 % precision in variant calling and protein-function prediction.

- Materials & Astronomy: Galactica-style models predict crystal structures and detect exoplanets 20–35 % faster than traditional pipelines.

- Universal assistants (e.g., EXAONE 3.0) dynamically route queries to domain experts and score over 90 % on cross-disciplinary benchmarks.

Evaluation Is Changing Too

Old-style multiple-choice quizzes are being replaced by process-oriented benchmarks that grade chain-of-thought reasoning, tool use, intermediate reproducibility, and full experimental workflows. Modern tests like GPQA, LAB-Bench, and MultiAgentBench reveal that today’s best SciLLMs are still far from perfect - but improving dramatically with every iteration.

The Ultimate Vision: Closed-Loop Autonomous Agents

The survey’s boldest claim is that the future belongs to self-improving agent loops:

The survey’s boldest claim is that the future belongs to self-improving agent loops:

→ Plan hypothesis

→ Design experiment or simulation

→ Execute (in silico or via robotic lab APIs)

→ Analyze results

→ Update knowledge base

→ Repeat

Real-world examples already exist in 2025: multi-agent drug-screening platforms that cut validation time from months to days, autonomous chemistry optimizers achieving 89 % yield correlation with physical labs, and virtual-cell models that iteratively refine disease pathways using genomics + proteomics + imaging feedback.

Also read:

Also read:

- The Great Semiconductor Reconfiguration: How FDI Is Rewiring the World’s Chip Supply Chain

- Crypto's Hidden Liquidity Trap: Tom Lee's Bombshell on Market Makers and the Bitcoin Bloodbath

- China’s AI Startups Cut the Dollar Cord: From Silicon Valley Money to State Guidance

- Things to Consider in Buying Your First DSLR Camera

Conclusion

Scientific LLMs are no longer just bigger language models with science papers sprinkled on top. They are evolving into data-rich, process-verified, evidence-anchored autonomous researchers. The combination of multimodal foundations, layered scientific reasoning, and closed agent loops is turning AI from a helpful intern into a genuine collaborator - and, in some narrow domains, already into an independent discoverer.

Read the full 94-page survey here: https://arxiv.org/abs/2508.21148