In the high-stakes arena of AI hardware, where fortunes rise and fall on the back of silicon efficiency, Google's latest move has sent shockwaves through the industry. On November 6, 2025, Google Cloud announced the general availability of Ironwood, its seventh-generation Tensor Processing Unit (TPU).

This isn't just an incremental upgrade - it's a seismic shift. Ironwood delivers over four times the performance of its predecessor, the Trillium TPU, while packing a staggering 192 GB of high-bandwidth memory (HBM) per chip. What's more, it supports clustering up to 9,216 chips into a single superpod, enabling unprecedented scale for AI workloads.

This isn't just an incremental upgrade - it's a seismic shift. Ironwood delivers over four times the performance of its predecessor, the Trillium TPU, while packing a staggering 192 GB of high-bandwidth memory (HBM) per chip. What's more, it supports clustering up to 9,216 chips into a single superpod, enabling unprecedented scale for AI workloads.

For context, that's enough computational muscle to train models that could simulate entire economies or predict climate patterns with eerie precision.

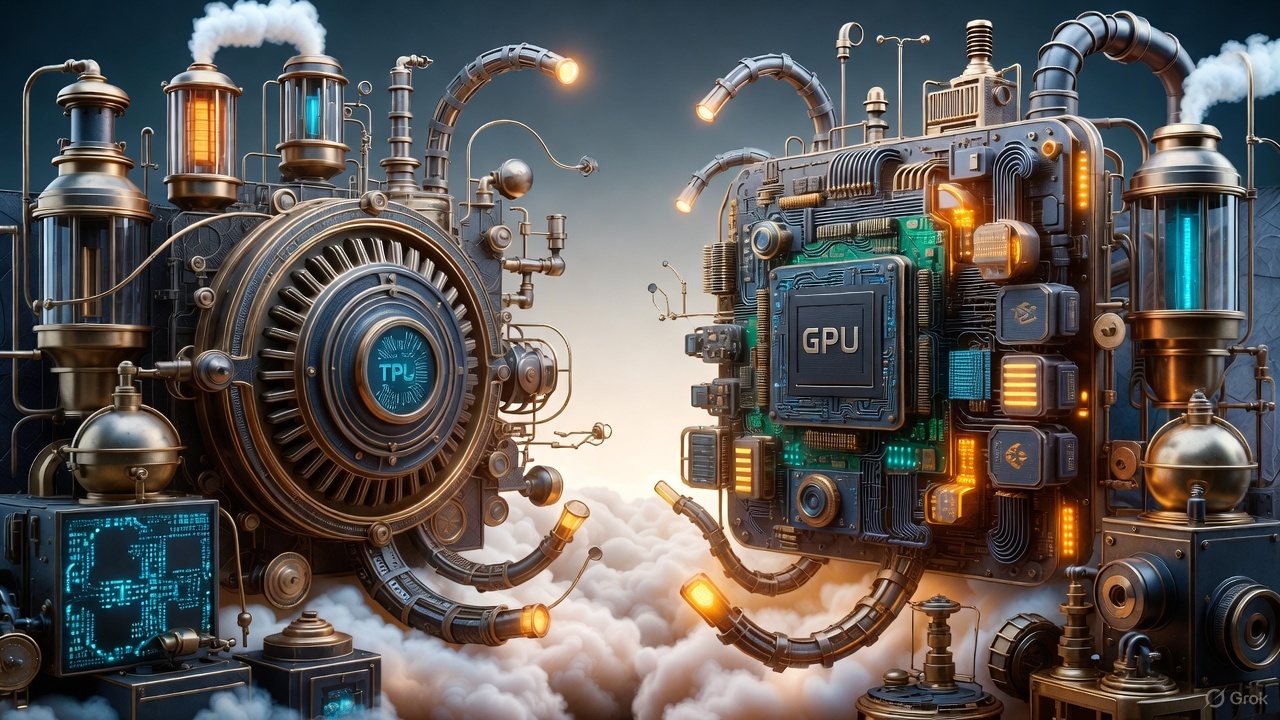

But here's the kicker: TPUs aren't your everyday graphics cards. Unlike Nvidia's versatile GPUs, which can handle everything from rendering photorealistic video games to crunching scientific simulations, TPUs are purpose-built beasts for AI alone. They're optimized for the two pillars of machine learning - training massive models from scratch and running inferences (that's the real-time predictions your chatbot relies on).

Sure, they lack the flexibility of GPUs; you won't be firing up Cyberpunk 2077 on a TPU cluster without some serious hacking. Yet, where they shine is in raw efficiency: Ironwood sips power at rates up to 2.5 times lower than comparable GPU setups for inference tasks, according to Google's benchmarks.

In an era where data centers guzzle more electricity than small countries - global AI energy demands projected to hit 1,000 terawatt-hours annually by 2026 - this isn't just a spec sheet flex; it's a sustainability mandate.

Historically, TPUs were Google's secret sauce, locked away in the Google Cloud ecosystem for internal use or rental. That changed dramatically with Ironwood. For the first time, Google is offering these chips for direct deployment in customer data centers, bypassing the cloud middleman. This opens the floodgates for hyperscalers and enterprises to build custom AI supercomputers without Nvidia's stranglehold on the supply chain.

Historically, TPUs were Google's secret sauce, locked away in the Google Cloud ecosystem for internal use or rental. That changed dramatically with Ironwood. For the first time, Google is offering these chips for direct deployment in customer data centers, bypassing the cloud middleman. This opens the floodgates for hyperscalers and enterprises to build custom AI supercomputers without Nvidia's stranglehold on the supply chain.

Early adopters are lining up: Anthropic, the brains behind the Claude AI series, inked a multibillion-dollar deal in October 2025 to tap up to one million TPUs. That's not hyperbole - it's a gigawatt-scale commitment to fuel next-gen Claude models, blending Google's hardware with Anthropic's frontier AI research.

And just days ago, reports emerged of Meta Platforms, under Mark Zuckerberg's watchful eye, negotiating a deal worth billions for TPU shipments starting in 2027. Meta, which already burns through Nvidia GPUs like candy, is eyeing TPUs for both training and inference, potentially diversifying its $100 billion-plus annual AI infrastructure spend.

Nvidia's response? A masterclass in corporate poker face. The GPU giant, now valued at a jaw-dropping $3.2 trillion, issued a statement via its newsroom: "We're delighted by Google's success - they've made impressive strides in AI—and we continue to supply them with our solutions." It's the kind of backhanded compliment that reeks of unease.

Nvidia's chips power Google's own TPUs in hybrid setups, after all, but this platitude came hot on the heels of a 5.2% stock plunge on November 25, wiping out $160 billion in market cap in a single session. Wall Street analysts piled on, with firms like Piper Sandler slashing price targets amid fears of eroding margins. When a company that's accustomed to dictating terms to the world feels compelled to clap back publicly, you know the ground is shifting.

So, why does this TPU offensive hit Nvidia harder than its perennial rival, AMD? It's all about the throne room. Nvidia commands 85-90% of the AI accelerator market, a monopoly forged on CUDA software ecosystem lock-in and relentless innovation cycles like the Blackwell series.

So, why does this TPU offensive hit Nvidia harder than its perennial rival, AMD? It's all about the throne room. Nvidia commands 85-90% of the AI accelerator market, a monopoly forged on CUDA software ecosystem lock-in and relentless innovation cycles like the Blackwell series.

Every major AI breakthrough - from OpenAI's GPT lineage to Tesla's Dojo - leans on Nvidia's GPUs. TPUs, as application-specific integrated circuits (ASICs), laser-focus on AI's sweet spot, undercutting Nvidia on cost per flop (floating-point operations) by 30-40% in optimized workloads.

Ironwood's inference prowess, in particular, targets the exploding $200 billion edge-AI market by 2030, where low-latency, power-thrifty chips rule. Hyperscalers like Meta and Anthropic aren't just buying hardware; they're betting on vertical integration to slash opex, and Google's end-to-end stack (chips + TensorFlow software) makes that seamless.

AMD, by contrast, is more of a scrappy challenger than a direct decapitation threat. Its Instinct MI300X GPUs offer solid bang-for-buck - up to 1.5x the performance of Nvidia's H100 at half the price - but AMD's AI market share hovers around 5-10%.

Without a comparable software moat (ROCm lags CUDA in developer adoption), AMD excels in cost-sensitive niches like high-performance computing (HPC) rather than pure AI dominance.

TPUs nibble at AMD's edges too, validating the ASIC model that could inspire more custom silicon from cloud giants. But for Nvidia, whose revenue is 80% AI-derived, losing even 10% of hyperscaler deals to TPUs could cascade into a $50 billion revenue hit by 2028, per analyst estimates from Barclays. AMD? It's already playing catch-up; a TPU ripple might even indirectly boost it by fragmenting Nvidia's pricing power.

Also read:

Also read:

- Upbit's $37 Million Solana Heist: A Stark Reminder of Crypto's Fragile Frontier Amid Naver's $10 Billion Embrace

- Polymarket Secures Full U.S. Regulatory Blessing: From Gray-Zone Outcast to CFTC-Licensed Futures Exchange

- China's Electric Truck Boom: A Green Freight Revolution Reshaping Global Energy Rivalries

In the end, Ironwood isn't just a chip - it's a manifesto. Google is weaponizing its AI-native DNA to reclaim control from the GPU overlord, proving that specialization trumps universality in the inference explosion ahead. Nvidia's engineers are no doubt burning the midnight oil on Hopper successors, but the genie's out: diverse silicon is the new normal.

For investors and innovators alike, the real game isn't GPU vs. TPU - it's who scales smartest in a world where AI isn't a luxury, but the infrastructure of tomorrow. As Zuckerberg reportedly quipped in internal memos, "Why rent the road when you can own the highway?" Nvidia better start paving alternatives, fast.

Author: Slava Vasipenok

Founder and CEO of QUASA (quasa.io) — the world's first remote work platform with payments in cryptocurrency.

Innovative entrepreneur with over 20 years of experience in IT, fintech, and blockchain. Specializes in decentralized solutions for freelancing, helping to overcome the barriers of traditional finance, especially in developing regions.