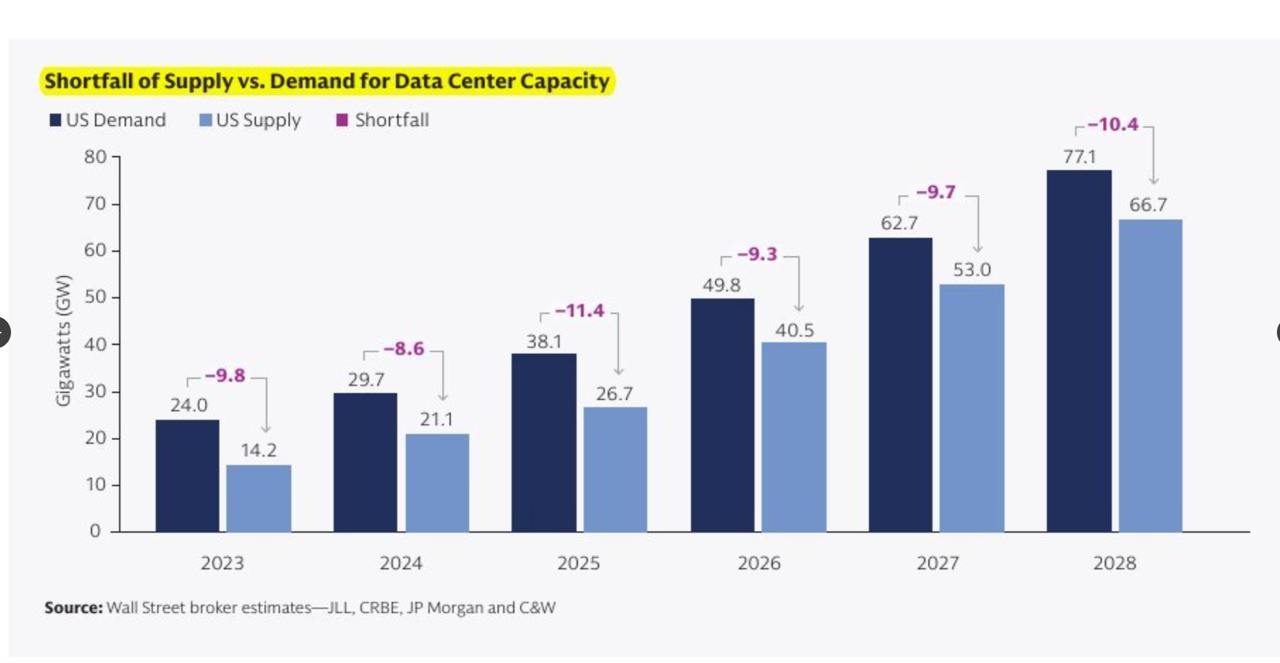

Goldman Sachs’ report, *Powering the AI Era*, highlights a critical challenge in the AI revolution: data centers powering AI workloads are consuming electricity faster than the energy sector can build new capacity.

The future of the AI industry depends not only on advanced chips but also on securing innovative financing and capital to fund massive infrastructure. This article summarizes the report’s key insights, focusing on AI’s energy demands, the strain on aging power grids, and the solutions operators are deploying to keep pace.

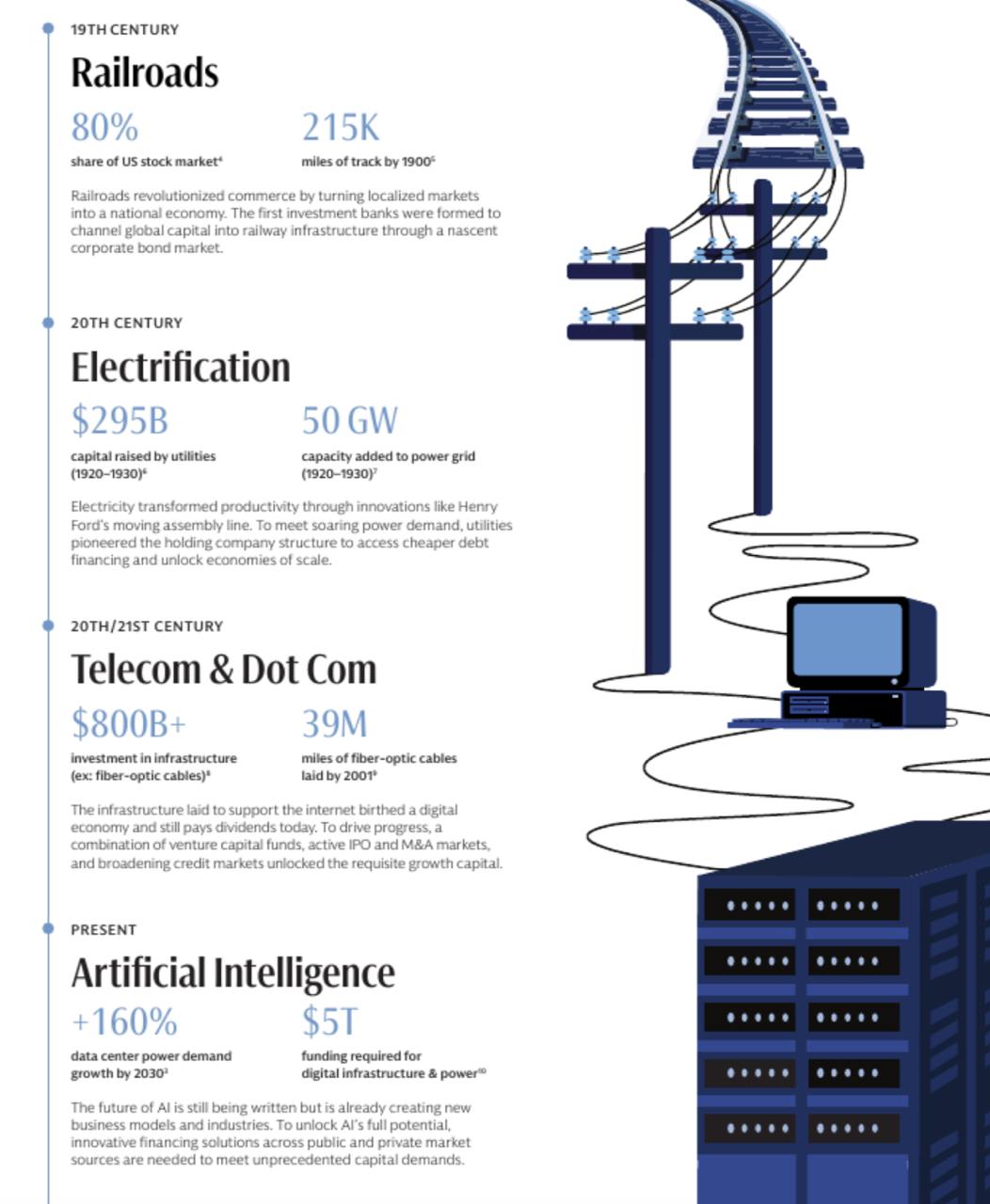

A Historical Parallel: Infrastructure as the Backbone of Technological Booms

Every major technological leap has relied on robust infrastructure:

Every major technological leap has relied on robust infrastructure:

- In the 19th century, railroads enabled industrial expansion.

- In the 1990s, fiber-optic networks powered the internet revolution.

- In the 2020s, GPU racks are the cornerstone of the AI era.

A single “AI factory” with a capacity of 250 MW costs approximately $12 billion to build, reflecting the immense capital investment required to sustain AI’s growth.

Why AI Training is So Energy-Intensive

AI’s voracious energy appetite stems from its computational demands:

AI’s voracious energy appetite stems from its computational demands:

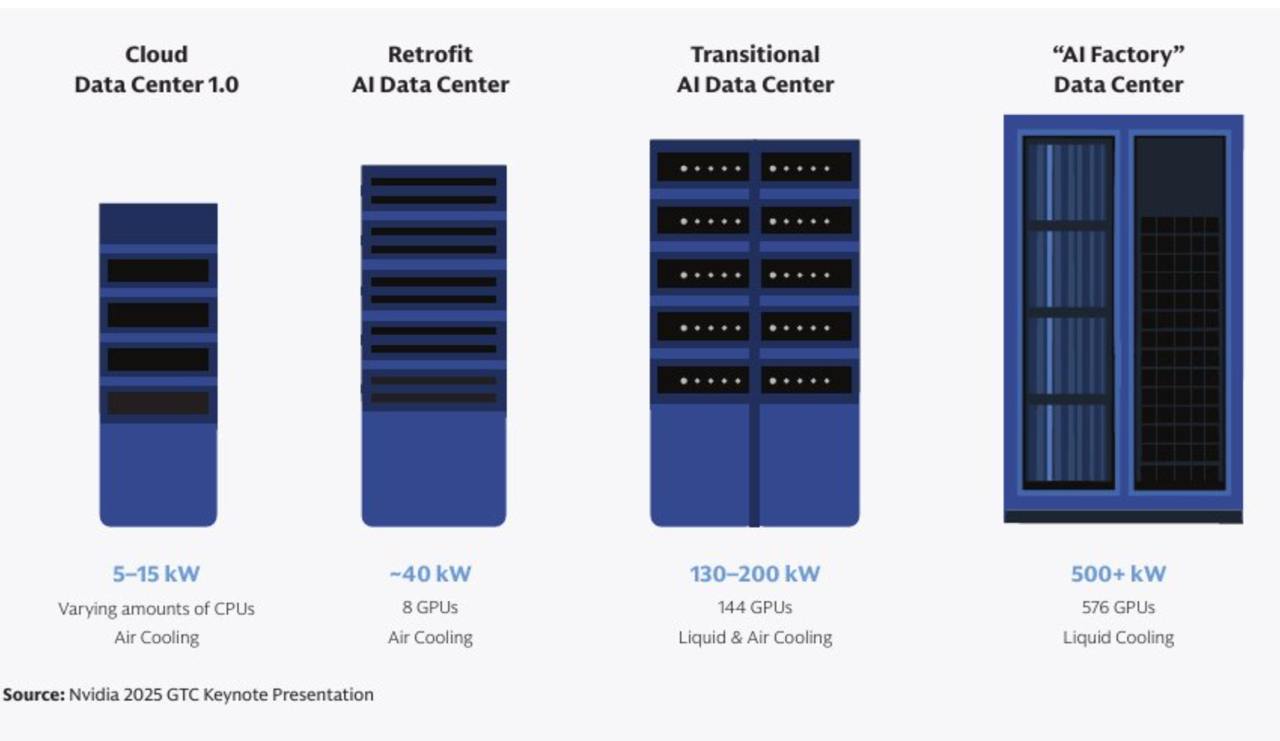

- Dense GPU Clusters: AI data centers house thousands of GPUs with liquid cooling systems to manage heat, significantly increasing power consumption.

- Surging Power Density: By 2027, a single AI server rack is projected to consume 50 times more energy than a 2022 cloud computing rack.

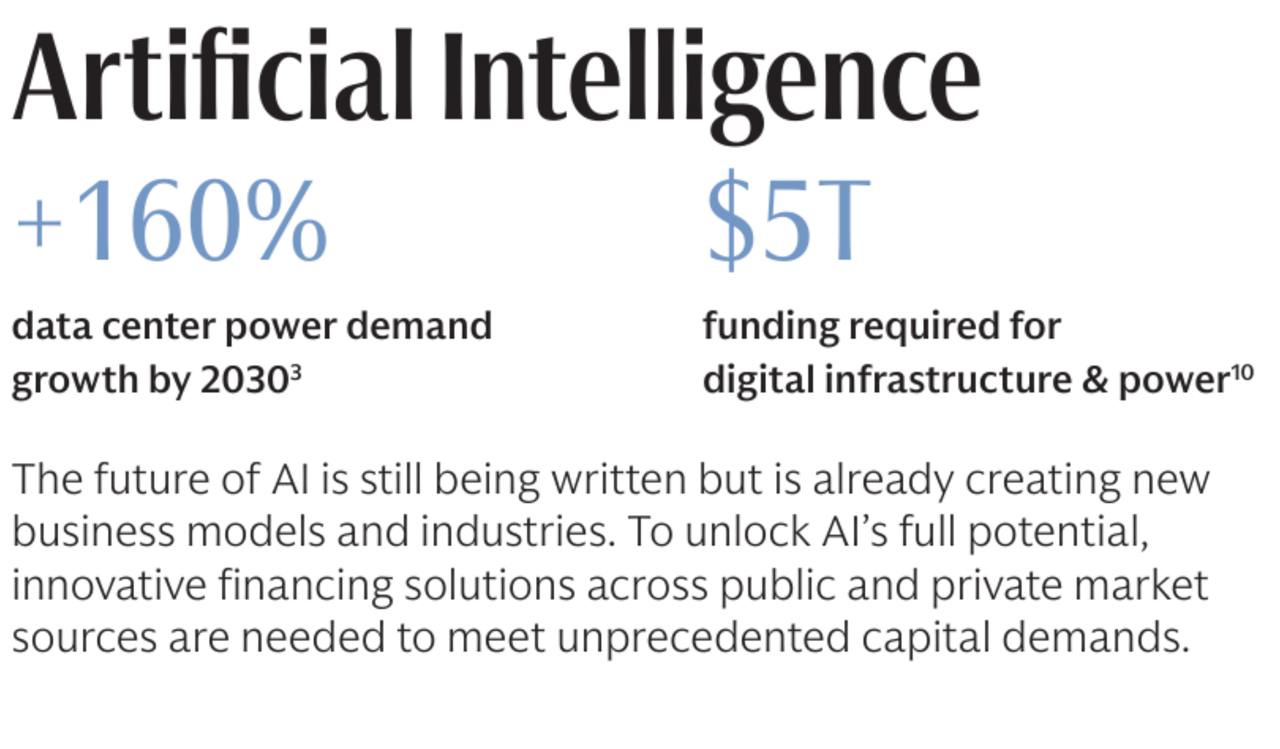

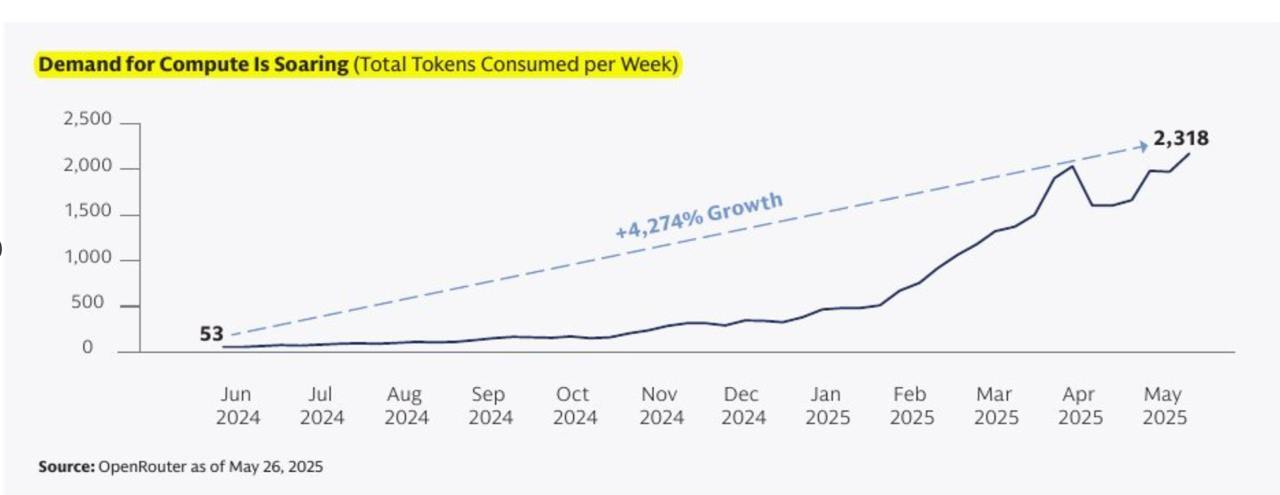

- Global Demand Surge: Even with efficiency improvements, global data center power demand is expected to grow by 160% by 2030, driven largely by AI workloads.

For context, a single ChatGPT query consumes 2.9 watt-hours of electricity, nearly ten times that of a Google search. As AI adoption accelerates, this energy intensity will reshape global power markets.

Aging Grids Under Pressure

The report highlights the unpreparedness of current energy infrastructure:

The report highlights the unpreparedness of current energy infrastructure:

- **Outdated Infrastructure**: The average age of U.S. power lines is 40 years, ill-equipped to handle AI-driven demand spikes.

- **Permitting Delays**: Securing permits for new gas-fired power plants can take up to seven years, creating significant bottlenecks.

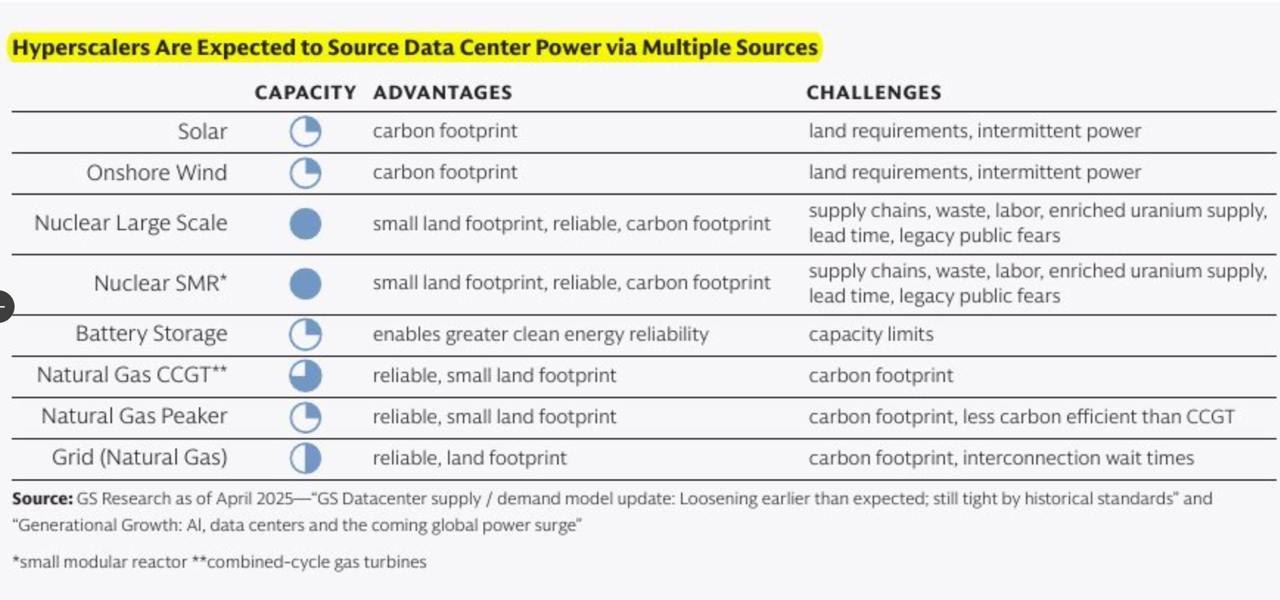

- **Power Source Mix**: Goldman Sachs projects that new energy capacity to meet data center demand by 2030 will come from:

- 30% combined-cycle gas plants

- 30% gas “peakers” (plants for peak demand)

- 27.5% solar energy

- 12.5% other sources (e.g., nuclear, geothermal)

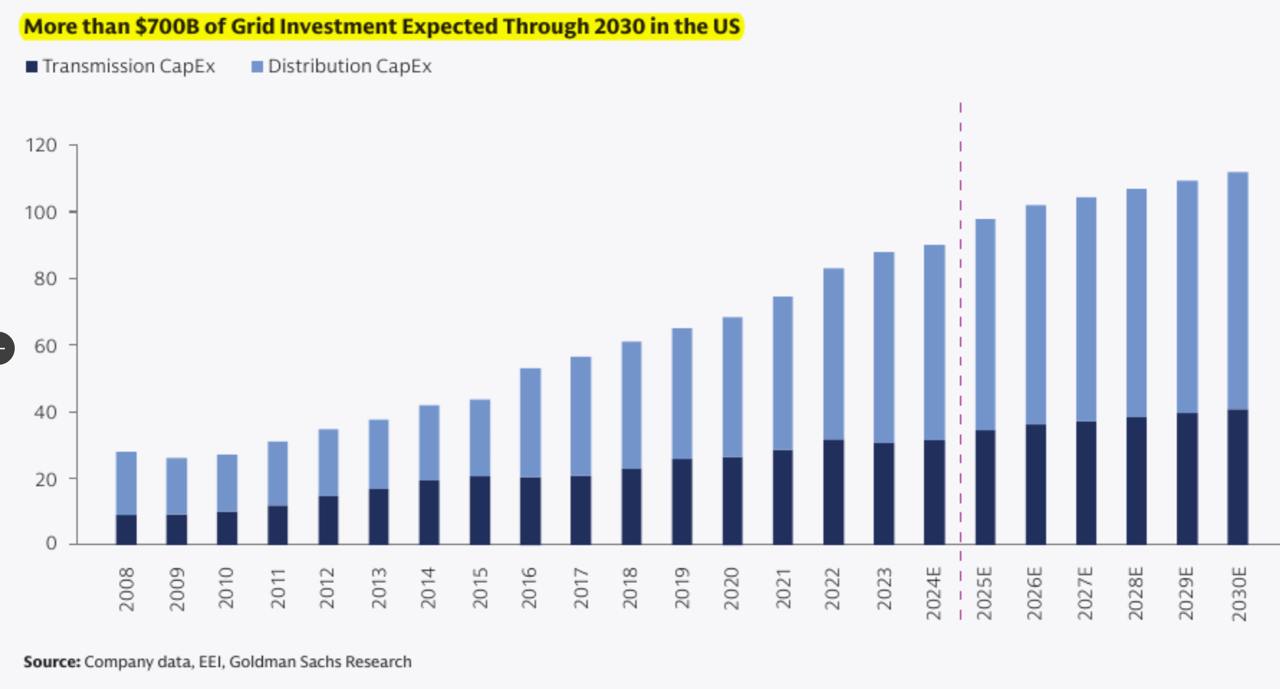

By 2030, data centers are expected to consume 8% of U.S. power, up from 3% in 2022, requiring approximately $50 billion in new generation capacity and $720 billion in grid upgrades.

How Operators Are Adapting

To address these challenges, data center operators are adopting innovative strategies:

To address these challenges, data center operators are adopting innovative strategies:

- Colocation with Power Sources: Building data centers directly beside power generators bypasses grid constraints and accelerates deployment.

- Microgrids for Stability: Operators are using microgrids to smooth out peak loads, ensuring reliable power supply without overburdening regional grids.

- Community Tensions: These solutions can create conflicts with local communities due to the constant noise from diesel or gas turbines operating round-the-clock.

Additionally, companies are investing in low-carbon solutions, such as nuclear and geothermal energy, to align with sustainability goals.

The Financing Imperative

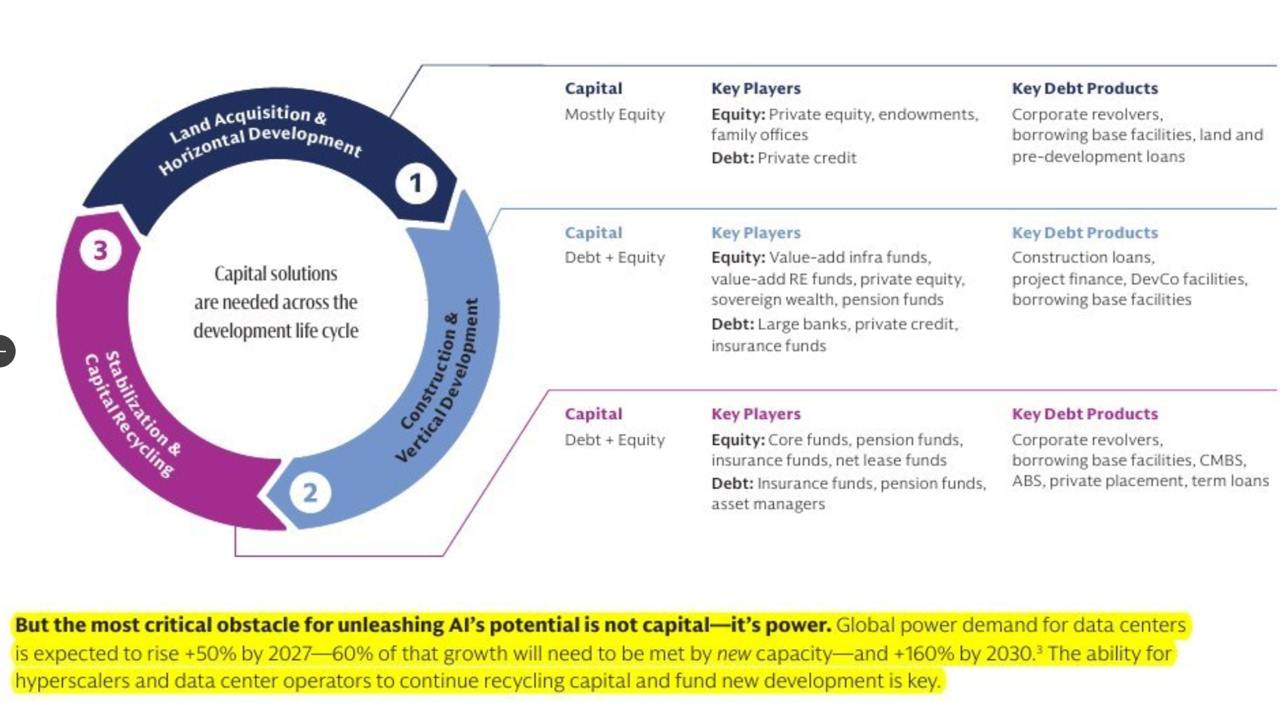

The report emphasizes that the AI industry’s future depends on creative financing to fund infrastructure. Goldman Sachs estimates $6.7 trillion in global capital expenditures by 2030, with $5.2 trillion allocated to AI-specific infrastructure.

The report emphasizes that the AI industry’s future depends on creative financing to fund infrastructure. Goldman Sachs estimates $6.7 trillion in global capital expenditures by 2030, with $5.2 trillion allocated to AI-specific infrastructure.

Key financing strategies include:

- Public-Private Partnerships: Initiatives like clean transition tariffs align utility incentives with data center needs.

- Strategic Investments: Hyperscalers and data center operators are leveraging joint ventures with public pension funds and sovereign wealth funds to secure capital.

- Regulatory Navigation: Overcoming permitting delays and outdated frameworks is critical to unlocking these investments.

Challenges and Risks

The path to powering the AI era is fraught with challenges:

- Regulatory Bottlenecks: Interconnection delays hinder rapid expansion.

- Environmental Concerns: Data center emissions could double by 2030 to 220 million tons of CO2, driven by reliance on natural gas.

- Social and Political Risks: Rising energy costs could disproportionately affect low-income households, creating political resistance to AI-driven power demand.

Also read:

Also read:

- YouTuber Ryan Trahan Hits Spotify Podcast Charts at #103—Without a Podcast

- A Dark Turn on Kick: CEO Ed Craven Among Top Donators to Streamer Who Died Live

- Heatwave on Twitch: Platform Cracks Down on Bots, Shaking Up Streamer Viewership

- Unplanned Expenses? It’s time to Generate Monetary Skills inside You

Conclusion

Goldman Sachs’ *Powering the AI Era* report makes it clear: AI’s transformative potential is constrained by the energy sector’s ability to keep up. The projected 160% surge in data center power demand by 2030 requires not only technological innovation but also unprecedented capital investment and creative financing. As operators build next-generation infrastructure and navigate regulatory and community challenges, the winners in the AI race will be those who can secure reliable power and sustainable funding. The stakes are high, but so are the opportunities for those who can bridge the gap between AI’s energy demands and the world’s aging power systems.