Pika Labs, a rising star in the AI video generation space, has introduced a groundbreaking new model that promises to revolutionize lip-sync technology.

The startup recently announced a system capable of generating high-definition (HD) videos with precise lip synchronization to audio tracks in just six seconds, regardless of clip length. According to the company, this innovative tool delivers "hyper-realistic facial expressions" and operates 20 times faster and 20 times cheaper than its previous generation model.

A Leap Forward in AI Video Generation

The new model marks a significant advancement in AI-driven video production, addressing one of the longstanding challenges in the field: syncing lip movements with audio in a seamless and efficient manner. By processing videos in near real-time, Pika Labs eliminates the lengthy rendering times typically associated with high-quality lip-syncing, making it an attractive option for creators, filmmakers, and content producers. The system’s ability to handle any clip length suggests versatility, catering to everything from short social media clips to extended narrative sequences.

Pika Labs claims that the model’s hyper-realistic output overcomes the "uncanny valley" effect, a common pitfall where AI-generated faces appear almost human but slightly off, causing discomfort. Early access users have praised the tool’s speed, accuracy, and ability to adapt to complex audio tracks, including those with multiple voices or intricate soundscapes.

Performance and Cost Efficiency

The standout feature of this new model is its dramatic improvement in performance and cost-effectiveness.

The standout feature of this new model is its dramatic improvement in performance and cost-effectiveness.

By achieving a 20-fold increase in speed and a similar reduction in cost compared to the previous iteration, Pika Labs is positioning itself as a leader in accessible AI video technology.

This efficiency could democratize high-quality video production, allowing independent creators and small studios to compete with larger production houses without breaking the bank.

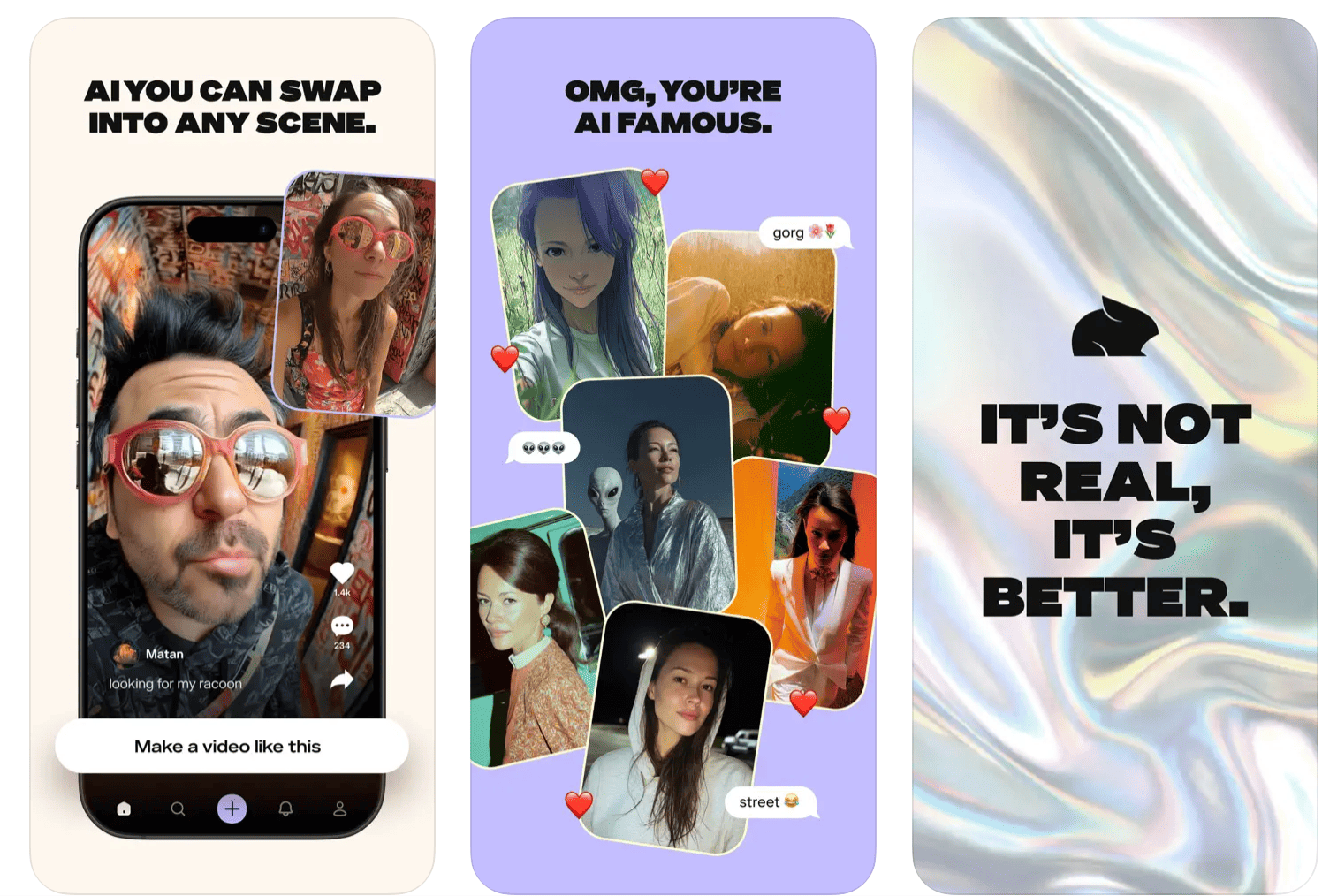

The six-second generation time is particularly impressive, especially when considering the model supports a range of styles — photorealistic, anime, pixel art, and fantasy —without compromising quality. This flexibility, combined with its affordability, could disrupt traditional video editing workflows and open new creative possibilities.

Real-Time Capability Confirmed

Early testing has validated the model’s ability to operate in real-time, even with complex audio tracks.

Early testing has validated the model’s ability to operate in real-time, even with complex audio tracks.

This capability is a game-changer for live applications, such as virtual avatars, interactive storytelling, or real-time dubbing. The technology’s robustness with intricate soundscapes suggests it could handle diverse use cases, from multilingual content to dynamic musical performances, further broadening its appeal.

While the model is still in early access, the initial feedback highlights its potential to transform AI filmmaking. Industry observers speculate that this could lead to longer, high-fidelity scenes generated from single prompts and even pave the way for actors to license their digital likenesses for automated content creation.

Also read:

Also read:

- End of the School Year in Numbers: ChatGPT Queries Drop 25–30% as Students Head to Summer Break

- K-Pop Demon Hunters Amplify the Global Reach of Korean Content

- World 13: The Forbidden Floor We Designed to Fear

Looking Ahead

As Pika Labs continues to refine this technology, the focus will likely shift toward expanding accessibility and integrating additional features, such as enhanced customization or support for even more complex audio inputs.

With its latest innovation, Pika Labs is not just keeping pace with the rapidly evolving AI video generation market but setting a new standard for speed, cost, and realism.

Creators eager to explore this tool will be watching closely as it moves from early access to wider availability, potentially reshaping the future of digital content creation.