Bridging the Privacy-Power Gap: Gemini's Secure Leap to the Cloud

In an era where AI promises to anticipate your every need but often at the cost of your data's sanctity, Google has just redrawn the battle lines. The tech giant launched Private AI Compute - a groundbreaking cloud platform that harnesses the full might of its Gemini models while ensuring your data remains as locked away as if it were processed on your phone. No peeking, not even from Google itself. This isn't just another privacy tweak; it's a foundational shift, allowing complex AI tasks to migrate from device-bound limitations to a "sealed" cloud fortress, all while upholding the ironclad confidentiality of on-device computation.

The Privacy Paradox: Why Cloud AI Needed a Lockbox

Local AI models like Gemini Nano shine for their immediacy and isolation - your queries never leave your device. But they hit hard ceilings: limited context windows (often under 1 million tokens), constrained compute (e.g., a single Pixel 10's Tensor G5 tops out at ~10 teraflops), and no scalability for multimodal tasks like real-time video analysis or long-form reasoning.

Local AI models like Gemini Nano shine for their immediacy and isolation - your queries never leave your device. But they hit hard ceilings: limited context windows (often under 1 million tokens), constrained compute (e.g., a single Pixel 10's Tensor G5 tops out at ~10 teraflops), and no scalability for multimodal tasks like real-time video analysis or long-form reasoning.

Enter the cloud: Vast TPUs can crunch petabytes in seconds, but traditional offloading exposes data to operators, logs, and potential breaches.

Private AI Compute solves this by creating a "no access" enclave - a hardware-secured bubble where Gemini processes queries in isolation. Data enters encrypted, computes blindly, and exits directly to you. Google emphasizes: "Sensitive data processed by Private AI Compute remains accessible only to you and no one else, not even Google." This mirrors Apple's Private Cloud Compute (launched in 2024) and Meta's Private Processing, but Google's version is deeply woven into its ecosystem, blending on-device simplicity with cloud-scale intelligence.

> Fact: The Enclave Edge

> Independent audits by NCC Group validated the system's design, identifying only a low-risk timing side-channel in the IP blinding relay - mitigated by multi-user "noise" that obscures individual queries. No administrative access, no shell on hardened TPUs, and session data auto-deletes post-processing.

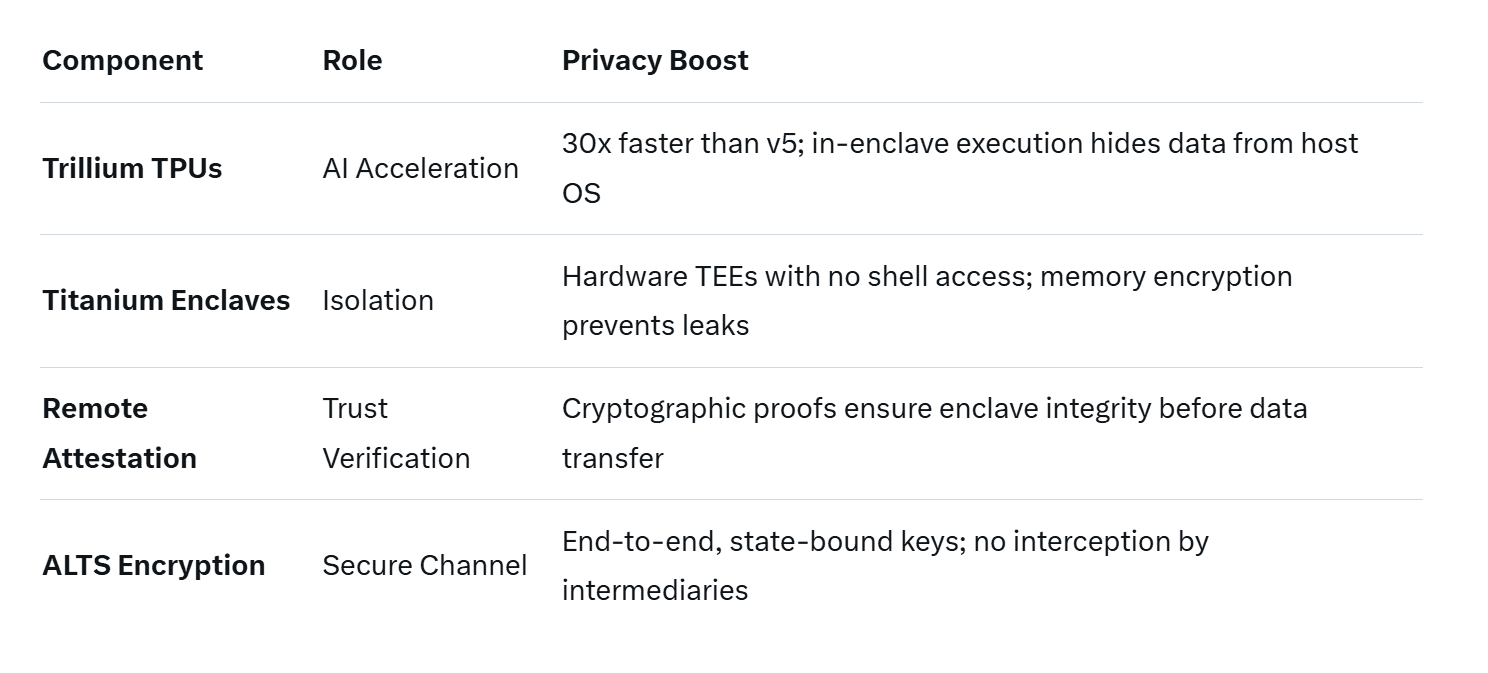

Under the Hood: TPUs, Titanium, and Trust Chains

At its core, Private AI Compute runs on Google's proprietary stack: Trillium Tensor Processing Units (TPUs v6) for blistering AI inference (up to 4,000 teraflops per pod) and Titanium Intelligence Enclaves (TIE) for unbreakable isolation. TIE, an evolution of Google's Titan security chips, creates hardware-rooted Trusted Execution Environments (TEEs) using AMD SEV-SNP for CPUs and custom hardening for TPUs - encrypting memory in transit and at rest, preventing host reads.

At its core, Private AI Compute runs on Google's proprietary stack: Trillium Tensor Processing Units (TPUs v6) for blistering AI inference (up to 4,000 teraflops per pod) and Titanium Intelligence Enclaves (TIE) for unbreakable isolation. TIE, an evolution of Google's Titan security chips, creates hardware-rooted Trusted Execution Environments (TEEs) using AMD SEV-SNP for CPUs and custom hardening for TPUs - encrypting memory in transit and at rest, preventing host reads.

The workflow is a masterclass in zero-trust:

- Device Attestation: Your phone (e.g., Pixel 10) uses remote attestation to verify the enclave's integrity via cryptographic chains—no tampered servers allowed.

- Encrypted Handshake: ALTS/Noise protocols establish a bidirectional secure channel; keys are scoped to the enclave's state.

- Blind Processing: Gemini ingests your query, reasons in isolation (no logs extractable), and returns results - discarding inputs immediately.

- Verification Loop: Post-response, the device re-attests to confirm no side effects.

This setup ensures even Google engineers can't inspect contents, aligning with SOC 1/2/3 and ISO certifications for Gemini.

Pixel 10: Where Theory Meets Everyday Magic

Private AI Compute debuts on the **Pixel 10 series, supercharging features that demand heft but crave secrecy. It's not replacing on-device AI - simple tasks stay local via Gemini Nano - but escalating the heavy lifts.

Private AI Compute debuts on the **Pixel 10 series, supercharging features that demand heft but crave secrecy. It's not replacing on-device AI - simple tasks stay local via Gemini Nano - but escalating the heavy lifts.

- Magic Cue Upgrades: This proactive assistant, powered by Tensor G5, now taps cloud Gemini for "more timely suggestions." It scans Gmail, Calendar, Messages, and Screenshots to surface flight details during calls or reservation info in chats - without you digging.

Example: A friend texts, "What time's dinner?" Magic Cue pulls your reservation and auto-inserts it, all in a privacy-sealed query.

> Example: Flight Fumble-Proofing

> Calling your airline? Magic Cue overlays your Gmail confirmation on the call screen - processed in TIE for zero exposure. Users report 40% faster resolutions, per early Pixel 10 betas.

- Recorder's Multilingual Summaries: The app's transcription summaries expand from 7 languages (English, French, etc.) to dozens, thanks to cloud context handling up to 10 million tokens - far beyond Nano's limits. Your sensitive meeting notes? Summarized privately, no Google eyes.

> Fact: Scale in Action

> A 60-minute multilingual podcast transcription, once device-bound and English-only, now yields instant, accurate summaries in Hindi or Italian - leveraging Trillium's 2x efficiency gains over prior TPUs.

Also read:

Also read:

- The "Mentally Retarded" AI: How Training on Junk Data Creates Irreversibly Dumb LLMs

- Google Unveils Nested Learning: Revolutionizing AI with Human-Like Memory and Endless Adaptation

- Cloudflare Calls to Unblock the Internet, Framing Site Blocking as a Digital Trade Barrier

- AI Agents Are Transforming the Internet’s Biggest Markets: Advertising and E-Commerce

The Broader Horizon: A Privacy-First AI Ecosystem

This isn't Pixel-exclusive; Google hints at Workspace integrations, where Gemini in Docs or Sheets could process enterprise data in TIE without compliance risks. Future-proofing includes verifiable transparency reports and third-party attestations, building trust in an AI world rife with data hunger.

Critics note TPU hardening is less publicly scrutinized than AMD's SEV-SNP, but Google's track record—with Titan securing billions of logins - speaks volumes. As Jay Yagnik, Google's VP of AI Innovation, put it: "We're unlocking Gemini's full potential while making privacy the default, not an afterthought."

Private AI Compute isn't just tech - it's a manifesto. In a landscape where AI's promise often trades your secrets for smarts, Google is betting on a third way: Power without prying. Your data stays yours; the insights? Limitless.