In a striking case of irony, consulting giant Deloitte Australia has agreed to refund the final payment of a $440,000 contract after admitting to using AI - specifically OpenAI’s GPT-4o - in a report riddled with errors. The report, commissioned by the Department of Employment and Workplace Relations (DEWR), was meant to review an IT system for automating fines on welfare recipients. Instead, it contained fabricated academic references, a made-up quote from a Federal Court ruling, and even a misspelled judge’s name.

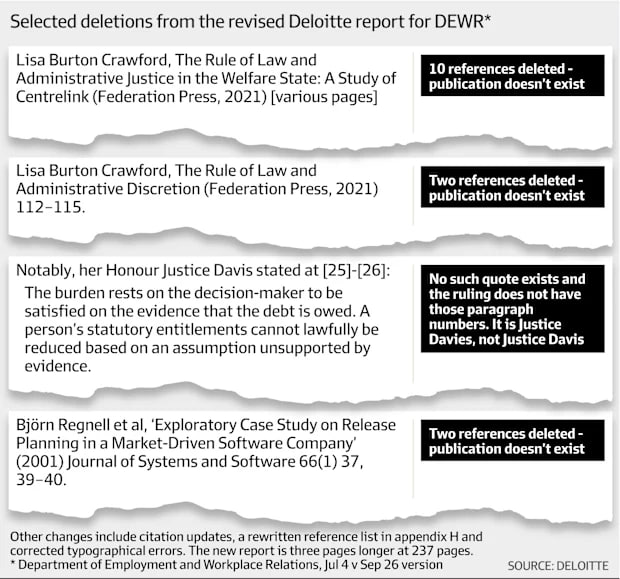

The saga began in July when the initial report was published. Within weeks, Sydney University academic Christopher Rudge flagged issues, suspecting generative AI “hallucinations.” The report cited three nonexistent academic sources, including works attributed to Professor Lisa Burton Crawford of Sydney University and a fictional report by Professor Björn Regnell from Lund University in Sweden.

The saga began in July when the initial report was published. Within weeks, Sydney University academic Christopher Rudge flagged issues, suspecting generative AI “hallucinations.” The report cited three nonexistent academic sources, including works attributed to Professor Lisa Burton Crawford of Sydney University and a fictional report by Professor Björn Regnell from Lund University in Sweden.

Most egregiously, it included a fabricated quote from the landmark Deanna Amato v Commonwealth case, misnaming Justice Jennifer Davies as “Justice Davis” and inventing a passage from paragraphs 25 and 26: “The onus of proof lies with the decision-maker.

A person’s legal rights cannot be curtailed based on an assumption unsupported by evidence.” The quote sounded compelling but was entirely fictional.

Deloitte initially stayed silent. A revised report was quietly uploaded on the Friday before a long weekend - coincidentally, Australia’s Labour Day - removing a dozen fake references. However, as Rudge noted, instead of replacing one hallucinated citation with a real one, Deloitte added “five, six, seven, eight new ones,” suggesting the original claim lacked any basis. “It’s highly likely they asked GPT to fabricate a justification, and it did,” Rudge remarked.

In the updated report’s methodology section, Deloitte admitted to using “a generative AI large language model (Azure OpenAI GPT-4o),” licensed by DEWR and hosted on the department’s Azure infrastructure, to address “gaps in traceability and documentation.”

In the updated report’s methodology section, Deloitte admitted to using “a generative AI large language model (Azure OpenAI GPT-4o),” licensed by DEWR and hosted on the department’s Azure infrastructure, to address “gaps in traceability and documentation.”

Rudge called this a veiled admission of fault: “This isn’t a ‘strong hypothesis’ anymore. Deloitte has confessed, albeit buried in the methodology section, to using generative AI for a core analytical task without initial disclosure.”

The fallout has been sharp. Rudge argued the report’s recommendations are now unreliable: “When the foundation of a report is built on a flawed, undisclosed, and non-expert methodology, its recommendations cannot be trusted.” Labor Senator Deborah O’Neill, part of a probe into consulting firms, didn’t mince words: “Deloitte has an issue with human intelligence. It’d be funny if it wasn’t so sad. A partial refund feels like a partial apology for substandard work. Perhaps clients should just buy a ChatGPT subscription instead.”

DEWR insists the “substance of the independent review remains unchanged” with no alterations to recommendations but dodged questions about whether AI caused the errors. For Deloitte, the embarrassment is acute. The firm, which generated $107 billion globally in 2024, increasingly markets AI consulting services while stressing the need for human oversight of AI outputs. Since 2021, Deloitte has secured nearly $25 million in contracts with DEWR alone. The department declined to comment on future engagements or whether it would seek a full refund.

DEWR insists the “substance of the independent review remains unchanged” with no alterations to recommendations but dodged questions about whether AI caused the errors. For Deloitte, the embarrassment is acute. The firm, which generated $107 billion globally in 2024, increasingly markets AI consulting services while stressing the need for human oversight of AI outputs. Since 2021, Deloitte has secured nearly $25 million in contracts with DEWR alone. The department declined to comment on future engagements or whether it would seek a full refund.

Also read:

- Nicole Kidman’s “Cocaine Clause”: The Prenup Stipulation That Could Cost Her $11 Million in Divorce from Keith Urban

- Google Agrees to $36 Million Fine in Australia Over Pre-Installed Search Deals

- Megan Fox's former husband, Brian Austin Green, criticizes Machine Gun Kelly during their breakup

This incident serves as a cautionary tale for the consulting industry. As firms lean into AI to boost efficiency, Deloitte’s misstep underscores the risks of over-reliance on tools like GPT-4o without rigorous human validation. For now, the refunded payment is a small price compared to the reputational hit - and a reminder that even AI consultants can fall prey to the technology’s pitfalls.