In a move that's exciting for AI enthusiasts and creators, ComfyUI has announced a significant update to its cloud service, effectively increasing the amount of content users can generate without raising subscription prices.

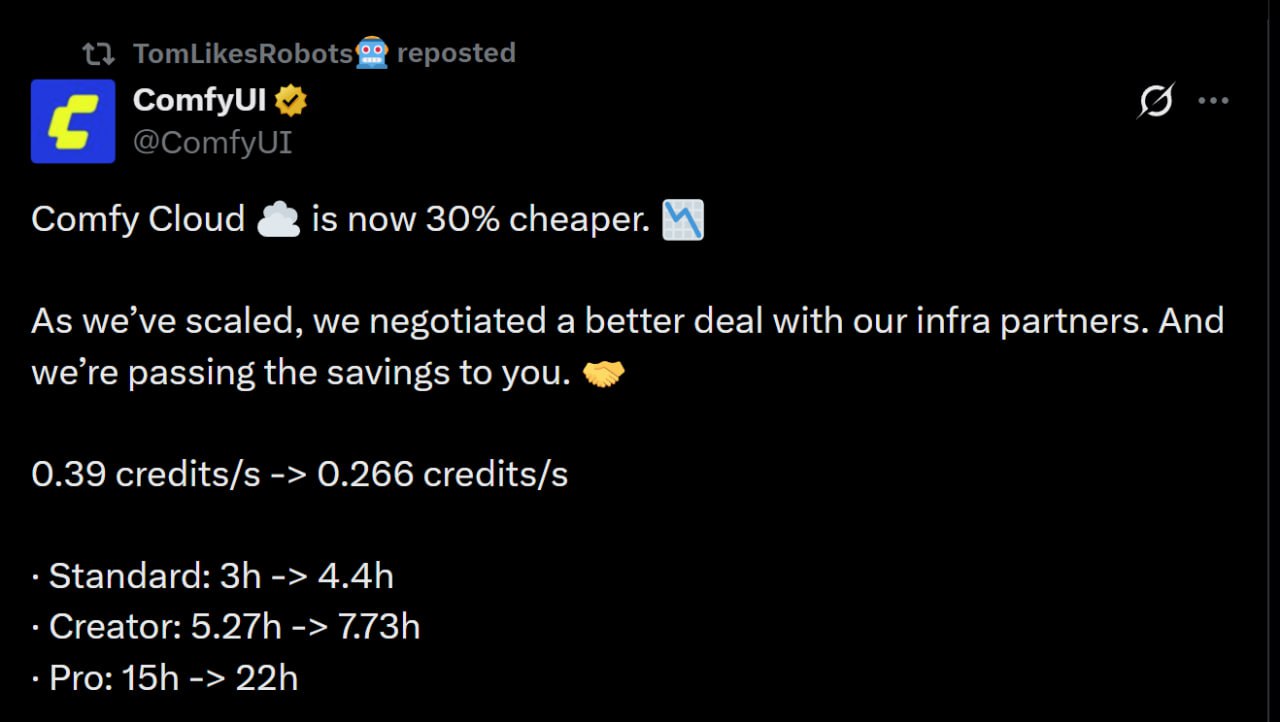

As revealed in a recent X post, the platform has negotiated better deals with infrastructure partners, passing on the savings to users by reducing the credit consumption rate by 30%.

As revealed in a recent X post, the platform has negotiated better deals with infrastructure partners, passing on the savings to users by reducing the credit consumption rate by 30%.

This adjustment means more GPU time for the same cost, making Comfy Cloud an even more attractive option for those leveraging advanced AI workflows. But as with any update, there are nuances in how these credits are calculated, and we'll dive into the details, supplemented with the latest facts from official sources.

The Update: More Bang for Your Buck

ComfyUI's cloud platform, accessible at cloud.comfy.org, now operates at a reduced rate of 0.266 credits per second of GPU usage, down from the previous 0.39 credits per second.

This translates to substantial increases in effective generation time across all plans:

This translates to substantial increases in effective generation time across all plans:

- Standard Plan: From 3 hours to 4.4 hours of GPU time.

- Creator Plan: From 5.27 hours to 7.73 hours.

- Pro Plan: From 15 hours to 22 hours.

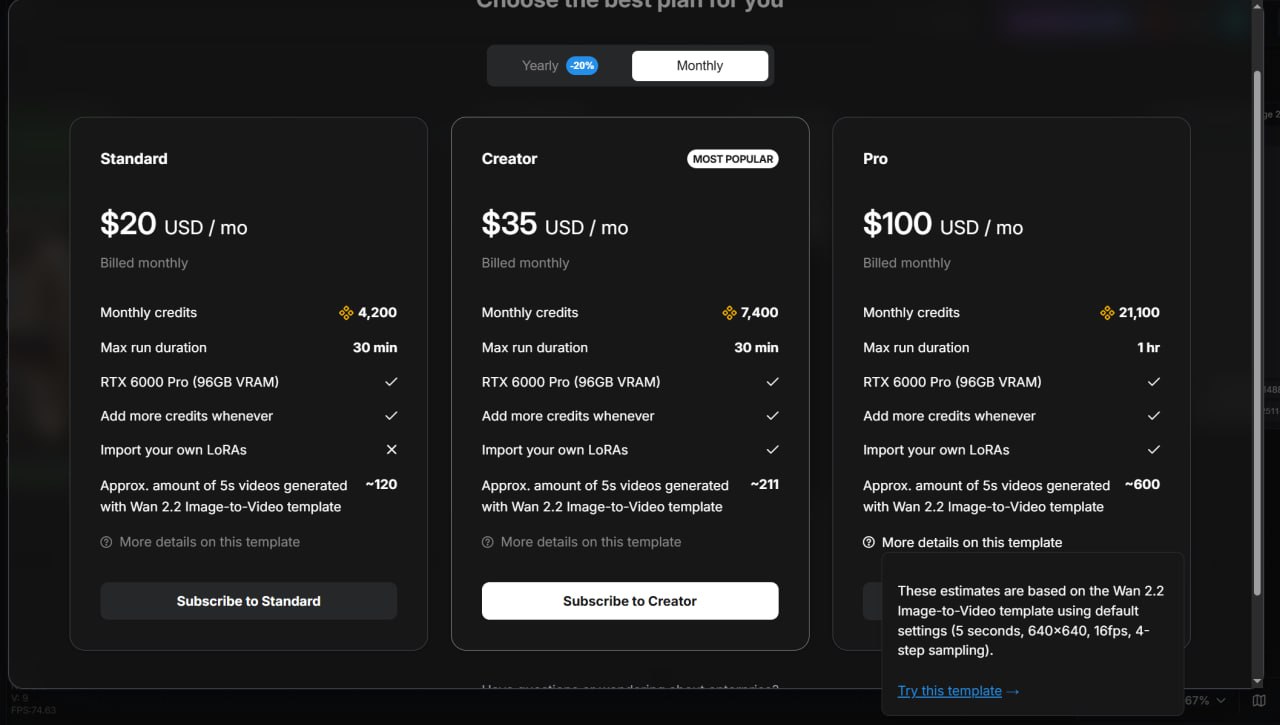

These figures are based on the unified credit system introduced in late 2025, where monthly subscriptions grant a pool of credits (e.g., 4200 for Standard, with Founder's Edition users getting a bumped 5460 credits). The update, effective as of January 23, 2026, stems from a 30% drop in GPU costs, allowing ComfyUI to scale efficiently while maintaining high-performance hardware.

However, some users have noted discrepancies between the announced figures and those on the website. This could be due to ongoing site updates or variations in how generation time is estimated. Official documentation clarifies that credit usage is charged solely for the duration a workflow runs on the GPU, not idle time.

Estimates often assume baseline scenarios, such as generating at 640x640 resolution, 16 frames per second (fps), and using a 4-step LoRA (Low-Rank Adaptation) model. In practice, more complex workflows—like higher resolutions, additional steps, or advanced models — will consume credits faster, potentially reducing effective hours. For instance, workflows can now run up to 1 hour (up from 30 minutes), but this is exclusive to the Pro plan.

Power Under the Hood: Hardware and Integration Features

One of ComfyUI Cloud's standout advantages is its robust infrastructure. The platform runs on Blackwell RTX 6000 Pro GPUs, boasting 96GB of VRAM and 180GB of RAM—roughly twice as fast as previous A100 setups. This high-end hardware supports demanding AI tasks, making it ideal for users without access to powerful local machines.

Integration is another strong suit. New models often launch with ComfyUI-compatible workflows first, and API nodes are added swiftly. Users on Creator plans or higher can import models directly from Hugging Face and CivitAI, supporting .safetensor formats up to 100GB.

Integration is another strong suit. New models often launch with ComfyUI-compatible workflows first, and API nodes are added swiftly. Users on Creator plans or higher can import models directly from Hugging Face and CivitAI, supporting .safetensor formats up to 100GB.

The process is straightforward: Paste the model link into the Model Library, select the type and folder, and the import handles the rest—keeping models private and accessible under "My Models." Hugging Face support was added shortly after CivitAI in late 2025, enhancing the all-in-one appeal.

Additionally, bring-your-own LoRAs became available in December 2025, allowing custom fine-tuning without local setup. For those prioritizing convenience, this ecosystem — combining zero-setup access, rapid updates, and seamless imports—positions Comfy Cloud as a compelling choice over fragmented alternatives.

Exclusive Cloud Perks: The Simplified 'Simple' Interface

A key draw for cloud users is the exclusive "Simple" interface, currently available only in the online version. This streamlined UI simplifies workflow management, making it easier for beginners to create AI-generated content without diving into complex node-based editing.

Features include intuitive queue management, node organization, and quick model switching, reducing the learning curve associated with ComfyUI's full interface. While the desktop version offers feature parity in core functionality, the cloud's Simple mode enhances accessibility, particularly for on-the-go creators.

Flexible Alternatives: Pay-Per-Use for Light Users

Not everyone needs a full subscription. If you anticipate underutilizing monthly credits or already own a capable machine, ComfyUI offers pay-per-API-call options in the local version.

Not everyone needs a full subscription. If you anticipate underutilizing monthly credits or already own a capable machine, ComfyUI offers pay-per-API-call options in the local version.

This a la carte approach lets users top up credits as needed, avoiding commitment while still accessing cloud-powered features via Partner Nodes. It's a cost-effective "honest work" solution for occasional users, echoing the meme often shared in AI communities: "It ain't much, but it's honest work."

In fact, community feedback on platforms like Reddit highlights mixed reactions to pricing evolutions, with some praising the value while others note initial hikes in 2025 were offset by these recent reductions. As AI inference costs continue to dominate cloud spending—projected at 55% by 2026—platforms like ComfyUI are adapting with efficient models to keep prices accessible.

Also read:

- AI Turns Scientists into "Paper Factories": Productivity Soars, But Discovery Flattens

- Tencent's HunyuanImage 3.0-Instruct: The Thinking Multimodal Model That Redefines Precise Image Editing

- The New Oil is Human: Cloudflare and Wikimedia Pivot to AI Licensing

Looking Ahead: Is Comfy Cloud Right for You?

This update solidifies ComfyUI Cloud as a powerhouse for AI generation, blending affordability, performance, and convenience. Whether you're a pro churning out high-res videos or a hobbyist experimenting with models, the increased limits provide more creative freedom. However, always factor in your workflow complexity when estimating usage — real-world generation might vary from baselines.

For the latest, check cloud.comfy.org. As the AI landscape evolves, updates like this ensure open-source tools remain competitive, democratizing advanced creation for all.