Tencent has taken a major step forward in open-source image generation with the release of HunyuanImage 3.0-Instruct, a native multimodal model specifically tuned for highly accurate, instruction-driven image editing and generation.

Unlike traditional text-to-image models that treat prompts as simple directives, this version introduces genuine "thinking" capabilities — analyzing inputs deeply before generating or modifying visuals.

Unlike traditional text-to-image models that treat prompts as simple directives, this version introduces genuine "thinking" capabilities — analyzing inputs deeply before generating or modifying visuals.

Released in late 2025 and fully open-sourced on Hugging Face and GitHub, HunyuanImage 3.0-Instruct builds on Tencent's Hunyuan-A13B foundation and stands out as the largest open-source image generation Mixture-of-Experts (MoE) model to date.

Architecture: Massive Yet Efficient MoE Design

At its core lies a decoder-only Mixture-of-Experts (MoE) architecture with over 80 billion total parameters, but only ~13 billion active per token during inference (8 out of 64 experts activated). This sparsity delivers high capacity while keeping compute manageable — making it one of the most powerful yet inference-efficient open models available.

At its core lies a decoder-only Mixture-of-Experts (MoE) architecture with over 80 billion total parameters, but only ~13 billion active per token during inference (8 out of 64 experts activated). This sparsity delivers high capacity while keeping compute manageable — making it one of the most powerful yet inference-efficient open models available.

The model operates in a unified autoregressive framework that natively handles both multimodal understanding and generation. Instead of relying on separate diffusion transformers (DiT) or cascaded pipelines, it integrates text and image modalities directly, allowing seamless reasoning over interleaved text, dialogue, and visual inputs.

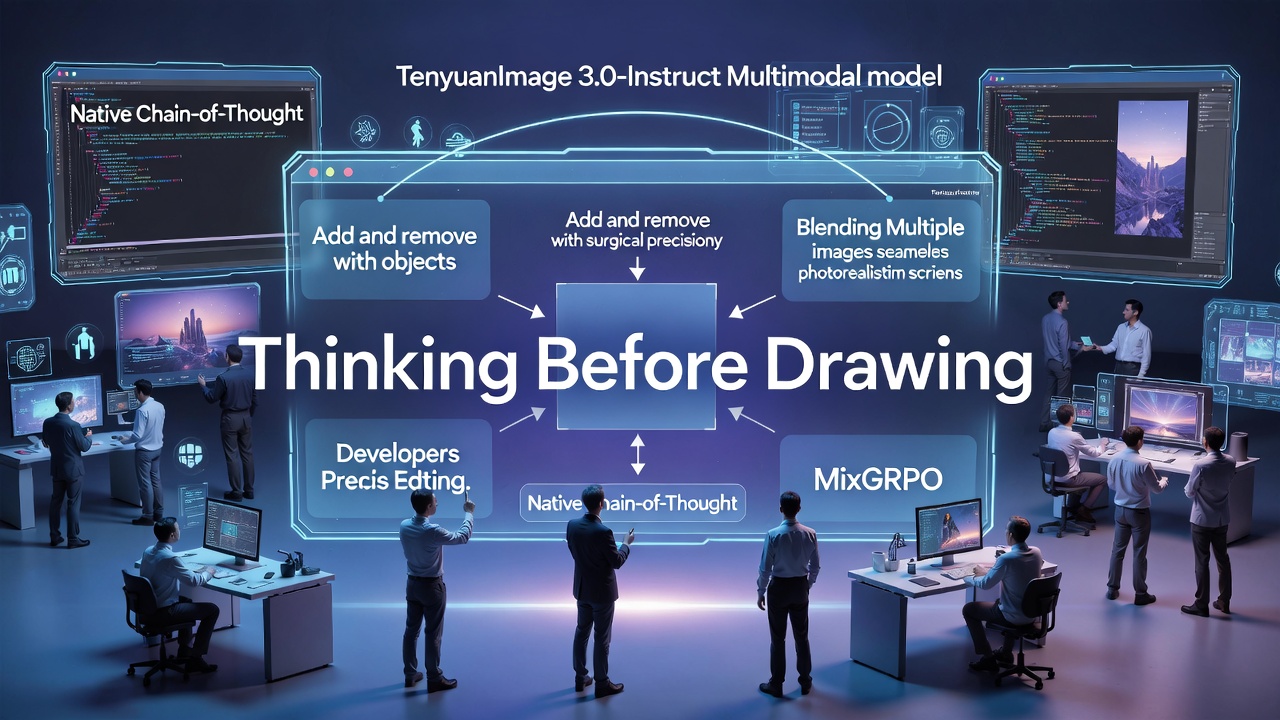

"Thinking" Before Drawing: Native Chain-of-Thought + MixGRPO

What truly sets HunyuanImage 3.0-Instruct apart is its built-in reasoning process. The model employs a native Chain-of-Thought (CoT) schema during inference, where it internally "thinks through" the user's intent step-by-step before producing pixels.

This is reinforced by MixGRPO, a custom online reinforcement learning algorithm that optimizes for aesthetics, realism, alignment, and reduced artifacts.

During post-training, the model learns to translate abstract instructions into detailed visual specifications via explicit reasoning traces, leading to:

- Stronger adherence to user intent;

- Better preservation of non-edited regions;

- Fewer illogical artifacts or structural distortions;

- Outputs that align more closely with human aesthetic preferences.

The result is a system that doesn't just follow prompts—it interprets, plans, and executes with deliberation.

Precision Editing & Multi-Image Fusion: Where It Shines

HunyuanImage 3.0-Instruct excels at surgical image editing:

- Add, remove, or replace objects while keeping the rest of the scene perfectly intact;

- Modify fine details (clothing, lighting, expressions, backgrounds) with minimal leakage;

- Handle complex instructions like "restore this old photo while making the person look 20 years younger and add modern clothing".

A standout feature is **advanced multi-image fusion**: the model can extract and blend elements from several reference images into a coherent, photorealistic scene — as if the composition had always existed that way. This enables powerful creative workflows such as portrait collages, style transfers, or hybrid scene construction.

The Instruct variant is particularly tuned for these editing-heavy use cases, outperforming the base model on tasks requiring deep image understanding and controlled modification.

SOTA Performance Claims & Benchmarks

According to Tencent's technical report (arXiv:2509.23951) and community evaluations:

- HunyuanImage 3.0 achieves text-image alignment and visual quality comparable to — or surpassing — leading closed-source models in human blind tests (e.g., GSB evaluations).

- It ranks highly on leaderboards like LMArena for text-to-image generation, occasionally topping open-source categories.

- In structured editing benchmarks, it demonstrates strong semantic consistency and realism, often rivaling proprietary systems like Flux, Midjourney, or SD3 variants in controlled modification tasks.

While not every comparison declares outright dominance, the model consistently places among the very top open-source contenders, especially in instruction-following and photorealism.

Ecosystem Ambitions & Accessibility

Tencent is clearly building toward a broader multimodal ecosystem. By open-sourcing weights, inference code, and a technical report under the Hunyuan Community License, they invite developers to build applications, fine-tune variants, and integrate the model into creative pipelines.

Tencent is clearly building toward a broader multimodal ecosystem. By open-sourcing weights, inference code, and a technical report under the Hunyuan Community License, they invite developers to build applications, fine-tune variants, and integrate the model into creative pipelines.

You can try HunyuanImage 3.0-Instruct directly via the official demo at:

https://hunyuan.tencent.com/chat/HunyuanDefault?from=modelSquare&modelId=Hunyuan-Image-3.0-Instruct

For local or API use:

- Hugging Face: tencent/HunyuanImage-3.0-Instruct;

- GitHub repo: https://github.com/Tencent-Hunyuan/HunyuanImage-3.0.

(Note: Running the full 80B model locally requires significant VRAM—multi-GPU setups with ≥3×80GB cards are recommended, though quantized versions and optimized inference like vLLM/FlashInfer help.)

Also read:

- The Great Switch: LinkedIn Emerges as the World's Top Dating Network, While Dating Apps Turn into Job Hunt Hotspots

- What is the Price of AGI?

- Copying the Uncopyable: Waterloo Scientists Unlock Quantum Data Backups

The Bigger Picture

HunyuanImage 3.0-Instruct represents a shift from prompt-and-generate tools toward **intelligent, reasoning-driven visual creation**. By making the model "think" natively about edits and compositions, Tencent is pushing the frontier of controllable, high-fidelity image manipulation in open-source AI.

Whether you're a designer seeking pixel-perfect edits, a researcher exploring multimodal reasoning, or a creator experimenting with fusion, this release raises the bar for what's possible with openly available foundation models. The era of truly thoughtful image AI is accelerating — and Tencent just threw down a very large gauntlet.