In the high-stakes world of frontier AI, two titans, Anthropic and OpenAI, are charting vastly different courses. While OpenAI has captured the consumer imagination with its massive scale and high-profile partnerships, Anthropic is quietly pursuing a strategy centered on efficiency and economic sustainability. This focus on doing more with less computing power is creating a substantial, and potentially decisive, long-term competitive edge.

The core of Anthropic's approach is a deliberate choice to prioritize computational efficiency over sheer scale, a departure from the prevailing narrative that bigger models are always better. This is reflected starkly in their projected spending on compute resources and their respective financial targets.

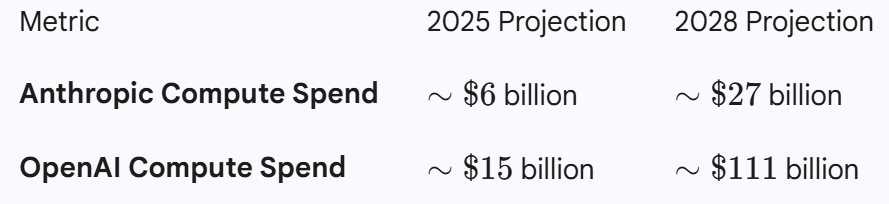

The Compute Cost Divide

Anthropic is planning to spend significantly less on infrastructure than its rival, implying a much lower cost-per-token for its models. This cost efficiency translates directly into a more sustainable business model and allows for aggressive pricing.

The predicted fourfold difference in compute costs by 2028 highlights a fundamental difference in architecture or training methodology.

Financial Trajectories: Profitability Race

This operational efficiency gives Anthropic a major lead in the race to profitability. While both companies have ambitious revenue goals, their paths to positive cash flow diverge significantly.

This operational efficiency gives Anthropic a major lead in the race to profitability. While both companies have ambitious revenue goals, their paths to positive cash flow diverge significantly.

- Anthropic is expected to achieve positive cash-flow by 2027, a crucial milestone for sustainability.

- OpenAI, despite a higher projected revenue of $\sim\$100$ billion by 2028 (compared to Anthropic's target of $\sim\$70$ billion), is not expected to reach profitability until 2029.

Fact Check: Recent reports corroborate this, suggesting Anthropic is indeed on a faster path to break-even, potentially two years ahead of OpenAI, which is projected to incur large operating losses due to soaring compute costs.

How Anthropic Achieves Technical Efficiency

Anthropic’s cost advantage is rooted in a strategic, multi-platform compute architecture:

Anthropic’s cost advantage is rooted in a strategic, multi-platform compute architecture:

- Diversified Infrastructure: Anthropic avoids vendor lock-in by distributing its workloads across a mix of infrastructure providers, including Google TPUs, Nvidia GPUs, and Amazon's Trainium/AWS. This allows the company to optimize for cost and performance on a per-workload basis.

- The TPU Advantage: A fresh contract with Google grants Anthropic access to up to one million TPUs and more than 1 GW of power capacity by 2026. Google's Tensor Processing Units (TPUs), particularly the next-gen "Ironwood" chips, are custom-designed for the unique matrix multiplication tasks of AI, offering superior price-performance and energy efficiency compared to general-purpose GPUs (which power much of the competition). This massive scale, combined with the innate efficiency of the hardware, significantly drives down the cost of processing a single token when utilized at high capacity.

Also read:

- Google Unveils Nested Learning: Revolutionizing AI with Human-Like Memory and Endless Adaptation

- Cloudflare Calls to Unblock the Internet, Framing Site Blocking as a Digital Trade Barrier

- The AI Arms Race Heats Up: Anthropic Uncovers China-Sponsored Autonomous Cyberattacks Using Claude Code

- AI Agents Are Transforming the Internet’s Biggest Markets: Advertising and E-Commerce

The Monetization Model: Enterprise-First

A critical financial divergence is their respective monetization strategies.

A critical financial divergence is their respective monetization strategies.

- OpenAI has invested billions into infrastructure to serve free ChatGPT users. While this built brand recognition, the sheer volume of free traffic creates an immense, continuous operational expense—the "consumer-scale and high-cost dilemma."

- Anthropic operates on an "enterprise-first" model, deriving about 80% of its revenue from its paid API and corporate clients. This approach eliminates the heavy, loss-making compute burden of catering to a massive free user base, leading to a much stronger gross margin and a more predictable, sustainable revenue stream.

"Anthropic represents a 'sustainable AI business model' focused on stable cash flow, while OpenAI faces the 'consumer-scale and high-cost dilemma.'"

In conclusion, Anthropic is building a less-hyped but considerably more resilient and economical growth model. By leveraging technical efficiency and prioritizing a paying enterprise customer base, the company is positioning itself not just as an innovative competitor, but as the benchmark for a sustainable, profitable AI frontier business. The race may not be won by the biggest models, but by the most efficient ones.