The Allen Institute for AI (Ai2) has launched SERA (Soft-verified Efficient Repository Agents), a family of open-source AI coding agents designed to tackle real-world codebases with unprecedented efficiency and adaptability.

These models, ranging from 8B to 32B parameters (with a recent addition of SERA-14B), enable tasks like code generation, review, debugging, and explanation, all while being fine-tunable on private repositories.

These models, ranging from 8B to 32B parameters (with a recent addition of SERA-14B), enable tasks like code generation, review, debugging, and explanation, all while being fine-tunable on private repositories.

Built on the Qwen 3 backbone, SERA stands out for its low training costs — starting at just $400 — and its ability to outperform larger proprietary models in specialized scenarios.

Key Innovations in SERA

SERA addresses key pain points in existing coding agents: high costs, lack of adaptability to private code, and the need for complex infrastructure.

Its standout features include:

Its standout features include:

- Repository Specialization: Users can fine-tune SERA on private data, such as internal APIs or custom conventions, allowing smaller models to match or exceed larger "teacher" models like GLM-4.5-Air. For example, after training on 8,000 synthetic samples costing $1,300, SERA-32B surpassed GLM-4.5-Air on benchmarks for Django and SymPy repositories.

- Soft-Verified Generation (SVG): This method uses partially correct code patches for training, eliminating the need for full testing setups and scaling as effectively as "hard-verified" data.

- Bug-Type Menu: Drawing from 51 common bug patterns, this generates diverse training prompts, creating thousands of agentic trajectories from repositories with limited functions.

- High Workflow Fidelity: Prioritizes simulating real developer processes over perfect code accuracy, making it ideal for practical use.

- Inference Speed: Optimized for high throughput, achieving up to 8,600 tokens per second on advanced NVIDIA hardware.

These features make SERA accessible for small teams, requiring only standard supervised fine-tuning (SFT) without custom reinforcement learning (RL) tools.

Benchmark Performance

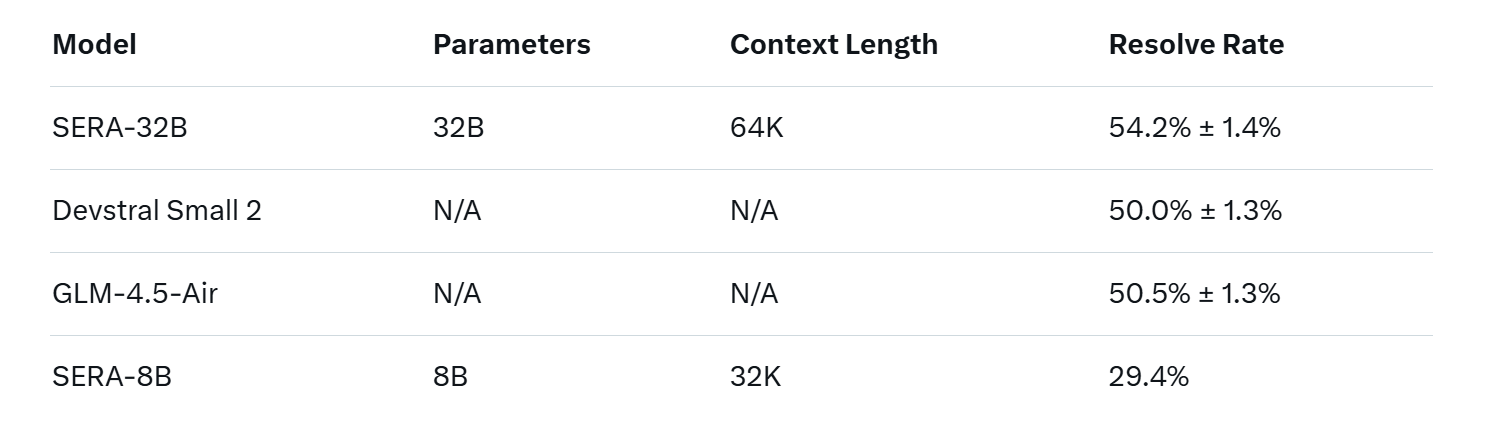

SERA excels on SWE-Bench Verified, a rigorous benchmark for coding agents. The flagship SERA-32B resolves 54.2% of tasks at 64K context, outperforming prior open-source models like Qwen3-Coder and matching proprietary ones such as Devstral Small 2 (50.0%) and GLM-4.5-Air (50.5%). The smaller SERA-8B achieves 29.4%, competitive in its weight class.

Here's a quick comparison on SWE-Bench Verified (resolve rates):

In repository-specific tests, SERA-32B hit 52.23% on Django and 51.11% on SymPy, edging out GLM-4.5-Air.

Training Efficiency: The Power of Synthetic Data

Ai2's breakthrough lies in cost-effective training using synthetic data generated via SVG and the bug-type menu. Reproducing top open-source performance costs around $400 — 57 times cheaper than SWE-smith and 26 times less than SkyRL.

Ai2's breakthrough lies in cost-effective training using synthetic data generated via SVG and the bug-type menu. Reproducing top open-source performance costs around $400 — 57 times cheaper than SWE-smith and 26 times less than SkyRL.

Scaling to rival industry leaders runs up to $12,000, still a fraction of traditional methods. Training occurs on modest hardware, like 2-16 NVIDIA GPUs, making it feasible for broader adoption.

Also read:

- The New Oil is Human: Cloudflare and Wikimedia Pivot to AI Licensing

- NVIDIA Unveils Earth-2: The World's First Fully Open, Accelerated AI Weather & Climate Platform

- Tencent's HunyuanImage 3.0-Instruct: The Thinking Multimodal Model That Redefines Precise Image Editing

Open-Source Release and Ease of Use

Everything is fully open-source on Hugging Face, including models, datasets (now in a model-agnostic format with verification metadata), training recipes, and inference code. Scripts for integration with Claude Code allow seamless setup — launch an inference server with just two lines of code. This democratizes AI coding agents, enabling anyone to create custom developers for their needs.

Looking Ahead

SERA represents a shift toward accessible, efficient AI for software engineering, lowering barriers for research and industry. By making high-performance agents reproducible on a budget, Ai2 fosters innovation in coding AI, potentially accelerating progress toward more advanced systems. As the community adopts these tools, we may see a surge in specialized agents tailored to diverse codebases worldwide.