In the fast-evolving landscape of software engineering, AI coding assistants have emerged as game-changers, promising to supercharge developer productivity. Tools like GitHub Copilot, Cursor, and specialized platforms such as Greptile and CodeRabbit are now staples in many dev teams, automating everything from code generation to reviews.

Yet, as adoption surges — with over 70% of developers using AI tools by late 2025 according to industry surveys — the trade-offs are becoming clearer. While these systems enable teams to churn out more code at unprecedented speeds, they also introduce a higher volume of bugs, raising questions about long-term code quality and maintainability.

Yet, as adoption surges — with over 70% of developers using AI tools by late 2025 according to industry surveys — the trade-offs are becoming clearer. While these systems enable teams to churn out more code at unprecedented speeds, they also introduce a higher volume of bugs, raising questions about long-term code quality and maintainability.

Drawing from recent reports, this article explores the dual nature of AI in coding: a booster for output, but a potential hazard for reliability.

Productivity Surge: More Code, Bigger Pull Requests

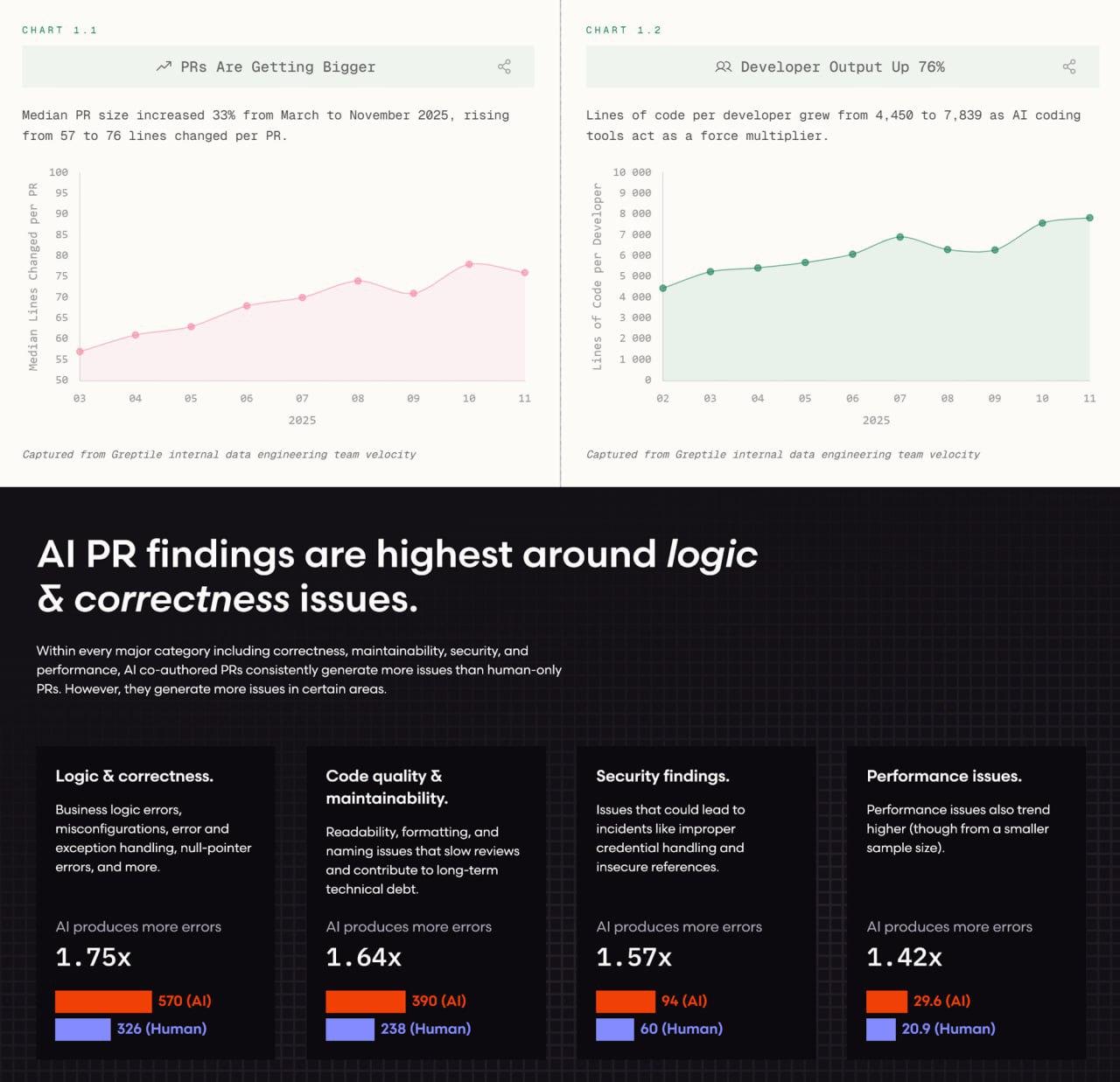

One of the most touted benefits of AI coding tools is their ability to amplify developer output. According to Greptile's "State of AI Coding 2025" report, which analyzed internal engineering metrics from March to November 2025, the median pull request (PR) size grew by 33%, jumping from 57 to 76 lines changed per PR.

One of the most touted benefits of AI coding tools is their ability to amplify developer output. According to Greptile's "State of AI Coding 2025" report, which analyzed internal engineering metrics from March to November 2025, the median pull request (PR) size grew by 33%, jumping from 57 to 76 lines changed per PR.

This isn't just about quantity; it reflects developers tackling more complex changes efficiently, thanks to AI's role as a "force multiplier." Overall, lines of code per developer skyrocketed by 76%, from 4,450 to 7,839.

Medium-sized teams (6-15 developers) saw even steeper gains, with an 89% increase in output, reaching 13,227 lines per developer. Additionally, the median lines changed per file rose by 20%, from 18 to 22, indicating denser, more comprehensive modifications.

These figures align with broader trends. Greptile, which raised $25 million in funding in September 2025 to expand its AI code validation capabilities, has reviewed over 500,000 lines of code for companies like Substack and Brex, preventing more than 180,000 bugs from reaching production.

The tool's graph-based approach to understanding entire codebases enables faster reviews — up to 11 times quicker than competitors— while adapting to team-specific coding standards. Independent benchmarks from September 2025 showed Greptile catching 82% of test issues, outperforming rivals like CodeRabbit (44%).

The Bug Boom: Quality Takes a Hit

However, this productivity boom comes with a caveat: AI-generated code is riddled with more errors. CodeRabbit's "State of AI vs Human Code Generation Report," released in December 2025, analyzed 470 open-source GitHub pull requests — 320 AI-co-authored and 150 human-only — using a structured issue taxonomy.

The findings are stark: AI PRs contained 1.7 times more issues overall (10.83 per PR versus 6.45 for humans), with more high-issue outliers that burden reviewers. Critical and major findings were 1.4 to 1.7 times more prevalent in AI-assisted work.

The findings are stark: AI PRs contained 1.7 times more issues overall (10.83 per PR versus 6.45 for humans), with more high-issue outliers that burden reviewers. Critical and major findings were 1.4 to 1.7 times more prevalent in AI-assisted work.

AI struggles most in nuanced areas. Logic and correctness issues, including business logic flaws, incorrect dependencies, and misconfigurations, were 75% more common.

Readability problems spiked over three times, as AI often ignores local naming conventions and structural patterns.

Error handling gaps, such as missing null checks or guardrails, doubled. Security vulnerabilities surged up to 2.74 times, particularly in handling sensitive data like passwords.

Performance issues, though less frequent, were heavily skewed toward AI, with excessive I/O operations eight times more common due to simplistic patterns.

Concurrency and dependency problems also doubled, while formatting and naming inconsistencies rose 2.66 and nearly two times, respectively.

Interestingly, while the report doesn't highlight areas where AI outright excels over humans in error reduction, it notes that no issue types are unique to AI — most are simply more frequent and variable. This variability underscores the need for robust review processes. CodeRabbit itself positions as a solution, standardizing quality and reducing reviewer fatigue, which has been linked to missed bugs in traditional workflows.

Also read:

Also read:

- O-Ring Automation: Why AI Might Not Destroy Jobs Gradually — But Could End Them Suddenly

- Cryptocurrency Becomes Integral to Traditional Finance: Insights from 2026

- Netflix's Approach to Storytelling: Deliberately Simplifying Dialogues and Breaking Traditional Film Structures?

Striking a Balance: Human Oversight in the AI Era

The competition between tools like Greptile and CodeRabbit highlights an evolving ecosystem. Greptile claims to catch over 50% more bugs than CodeRabbit in head-to-head tests on 50 open-source PRs, emphasizing concise, high-signal reviews. Meanwhile, CodeRabbit focuses on integrating into IDEs, CLIs, and PR workflows to catch issues early. Broader lists of 2025's top AI dev tools, including Sourcegraph Cody and DeepCode AI, praise these for debugging and error resolution, saving hours on trial-and-error fixes.

Ultimately, AI's role in coding isn't about replacement but augmentation — with caveats. As Greptile's CEO Daksh Gupta noted, the explosion in code volume from AI generators like Claude Code necessitates advanced validation layers to prevent bottlenecks.

Ultimately, AI's role in coding isn't about replacement but augmentation — with caveats. As Greptile's CEO Daksh Gupta noted, the explosion in code volume from AI generators like Claude Code necessitates advanced validation layers to prevent bottlenecks.

Developers must implement guardrails: providing context, enforcing policies, and leveraging AI-aware reviews.

Without them, the 76% output boost could be undermined by escalating bugs, leading to higher maintenance costs down the line. As 2026 unfolds, the key to harnessing AI will be blending its speed with human judgment, ensuring innovation doesn't come at the expense of stability.