At 10:17 AM CEST on July 26, 2025, the Qwen team, backed by Alibaba, has dropped a bombshell in the AI world with the release of Qwen3-235B-A22B-Thinking-2507, a model laser-focused on deep reasoning.

After three months of scaling and refinement, this powerhouse targets logic, mathematics, science, and programming, challenging the notion that AI progress is slowing. With a suite of key upgrades, it promises to redefine how machines tackle complex thought processes — raising both excitement and skepticism about its real-world impact.

A Tailored Boost for Reasoning

The model’s development over the past three months has honed its reasoning capabilities, making it a standout for tasks requiring step-by-step logic and analysis. It excels at solving intricate problems, from mathematical proofs to scientific queries, and boasts enhanced accuracy in following instructions and leveraging tools. This focus on structured thinking sets it apart from general-purpose models, suggesting a deliberate shift toward specialized AI. However, the establishment’s tendency to overhype such advancements invites caution — can it truly deliver across diverse, unpredictable scenarios, or is this optimization narrow in scope?

Native 256K Context for Deep Thought

One of the headline features is its native support for a 256K token context, a massive leap that enables handling extended chains of reasoning. This long-context capability allows the model to process and connect ideas over vast datasets or multi-step problems, a boon for researchers and developers wrestling with complex code or long-form scientific texts. Yet, this comes with a caveat: the computational demand could limit accessibility, potentially confining its use to well-resourced entities and reinforcing existing tech disparities.

Thinking Mode by Default

Perhaps the most intriguing aspect is its default reasoning mode — there’s no need to toggle it on. Qwen3-235B-A22B-Thinking-2507 autonomously constructs lengthy logical chains, aiming for maximum depth and precision without user intervention. This built-in thinking process mimics human deliberation, a step toward more transparent AI decision-making. Still, the lack of an off switch for this mode raises questions: will it overcomplicate simple tasks, or is the team confident in its adaptability? The narrative of seamless AI reasoning is compelling, but real-world testing will reveal if it’s a feature or a limitation.

A Critical Take

The Qwen team’s push with this model aligns with a broader trend of open-source AI challenging closed giants like OpenAI or Google. Its Mixture-of-Experts architecture, activating 22 billion of its 235 billion parameters, balances power and efficiency — a design choice that could democratize advanced reasoning if hardware barriers are addressed.

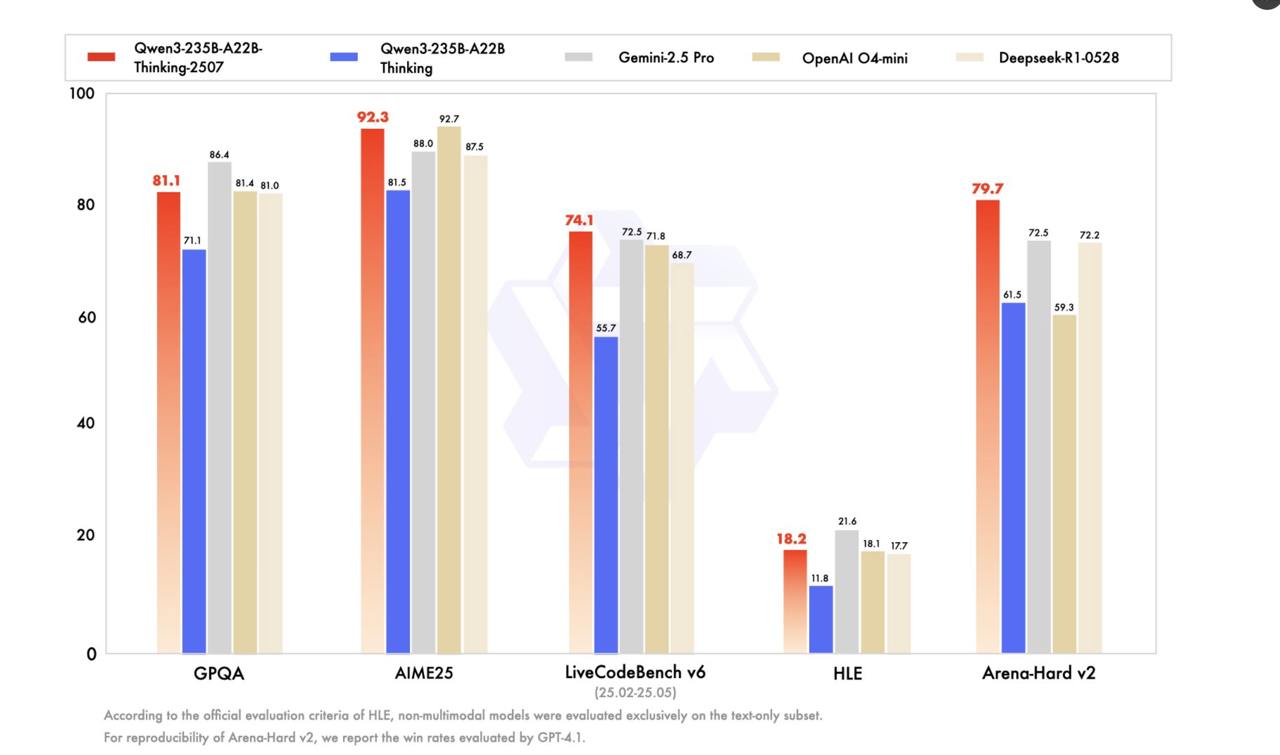

Sentiment online, including posts found on X, reflects enthusiasm about its potential in math, coding, and science, yet these remain anecdotal until independent benchmarks confirm the claims. The establishment might laud this as progress, but critics could argue it’s another step toward AI over-dependence, sidelining human expertise.

Also read:

- Unitree Unveils a Game-Changing Chinese Robot: Affordable Powerhouse or Bakery Line Intruder?

- A Dream Job Unearthed on Social Media for HR Pros: BBC Studios Hires a Head of Fandom

- Sam Altman’s Bold Predictions: Key Takeaways from His Latest Podcast

What’s Next?

Qwen3-235B-A22B-Thinking-2507 is available through platforms like Hugging Face, inviting developers to explore its depths. Its focus on deep reasoning could accelerate fields like academic research or software development, but its success hinges on practical deployment and user feedback. As the AI community digs in, this model might either cement Qwen’s reputation or expose the limits of specialized thinking AI. For now, it’s a provocative addition to the landscape — promising much, but proving its worth remains a task ahead.