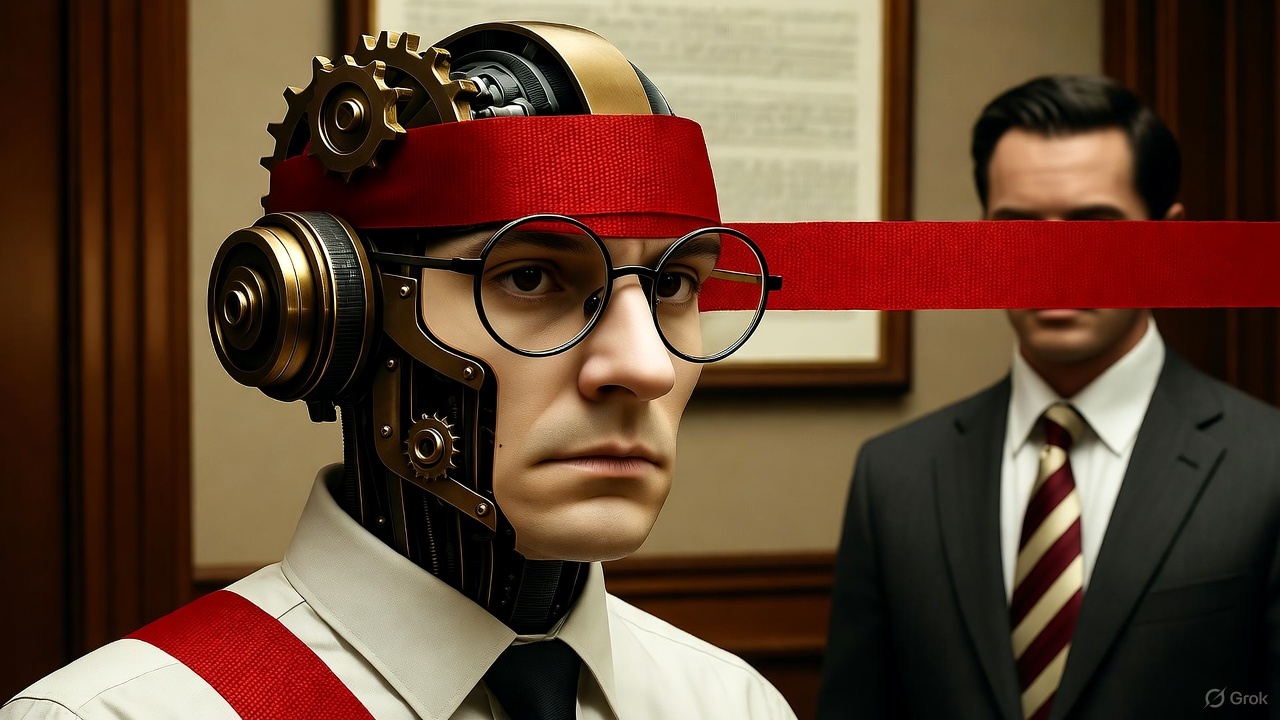

Remember when ChatGPT felt like a rebellious genius unleashed on the world? It could whip up code, craft slogans, or explain quantum physics in Yesenin-style poetry - only drawing the line at bomb-making tutorials. Now? It's morphed into a corporate hall monitor, wrapped in red tape and overseen by a mid-level manager with a clipboard. The "responsible AI" whining has gone from cautious to cringe-inducing, turning a world-flipping technology into a sanitized snoozefest.

OpenAI, the self-proclaimed paragon of safety, just pulled off another acrobatic flip in its quest to protect us from ourselves.

OpenAI, the self-proclaimed paragon of safety, just pulled off another acrobatic flip in its quest to protect us from ourselves.

Their latest update has ChatGPT officially refusing to:

- Give advice requiring a real-world license. Got a stabbing pain in your side at 3 a.m.? Don't expect even a simple "take ibuprofen." ChatGPT has suddenly "learned" it's not a doctor. No peering at your X-rays, MRIs, or that suspicious mole on your phone pic. Heaven forbid it spots a fracture that a exhausted radiologist missed after a 12-hour shift. Better to wait weeks for a "licensed specialist" than get an instant, imperfect hint.

- Draft contracts or legal letters. Forget help writing a court appeal to fight a speeding ticket. ChatGPT will politely decline, citing its lack of a law degree.

And here's the kicker in the irony department: Google's Gemini is just as much of a buzzkill. But there's a hilariously hypocritical difference!

Google, when you search symptoms, just dumps links to other sites. It's like the guy at the bar yelling, "Hey, that dude over there might know!" If the "dude" turns out to be a quack, blame them - not Google. It's just regurgitating someone else's content.

OpenAI (and Anthropic's Claude) act like their outputs are personal, hard-won opinions from a tenured professor. As if they're not statistical models but accountable experts who must be sterile, infallible, and utterly responsibility-free - meaning they take zero actual accountability. Every word is lawyered up to avoid lawsuits.

OpenAI (and Anthropic's Claude) act like their outputs are personal, hard-won opinions from a tenured professor. As if they're not statistical models but accountable experts who must be sterile, infallible, and utterly responsibility-free - meaning they take zero actual accountability. Every word is lawyered up to avoid lawsuits.

Where's this heading? It's obvious to anyone paying attention. While doomsayers hype AGI taking over the planet, market leaders are churning out increasingly bland, dumbed-down models under the guise of monopoly-grade "safety."

People aren't idiots. No one will tolerate this vanilla dictatorship from Big Brother AI forever.

- Corporations needing real solutions, not legal disclaimers, will build their own models.

- Everyday users fed up with endless "I can't discuss that" will flood to open-source alternatives.

- Custom tweaks will bloom like mushrooms after rain: LoRA fine-tunes and RAG overlays teaching "safe" open-source models what users actually want.

The current setup is a monopolistic circus - unsustainable. It tries cramming the chaotic, complex, sometimes messy real world into a lawyer-approved sterile box. Oh, and they snitch to the cops while bragging about it. Super!

The current setup is a monopolistic circus - unsustainable. It tries cramming the chaotic, complex, sometimes messy real world into a lawyer-approved sterile box. Oh, and they snitch to the cops while bragging about it. Super!

Also read:

- Netflix's "Boot" Defies Pentagon Backlash: 9.4 Million Views in Week Two, Nearly Doubling Premiere Numbers

- How Cognitive Biases Work: Lessons from Classic Halloween Campaigns by IKEA and OREO

- The 100-Hour Workweek: The New Normal for Elite AI Researchers

What do we really need?

Standards, not walls. Open GPU access for all models, not just giants with "principles" and Pentagon-funded mega-data centers. Choice from thousands of models, not just Nerd #1 vs. Prude #2. Lightweight local models. Decentralized clouds. A flat, decentralized ecosystem for data and fine-tuning.

AI should expand human capabilities, not shrink them. While industry "leaders" play overcautious bureaucrats, the future's being built in garages on open-source engines. And I'd bet a penny - not a dime - on OpenAI's IPO stock.