In the fast-evolving world of artificial intelligence, Google’s Nano Banana — part of the Gemini 2.5 Flash Image suite — has emerged as a viral sensation, transforming selfies into stylized 3D figurines and retro saree portraits with stunning ease.

But beyond its creative flair, Nano Banana introduces a critical layer of accountability through its integration of SynthID, a pioneering digital watermarking technology. This innovation is redefining how we verify the authenticity of AI-generated content, offering a glimpse into the future of digital trust.

But beyond its creative flair, Nano Banana introduces a critical layer of accountability through its integration of SynthID, a pioneering digital watermarking technology. This innovation is redefining how we verify the authenticity of AI-generated content, offering a glimpse into the future of digital trust.

The Role of SynthID Watermarks

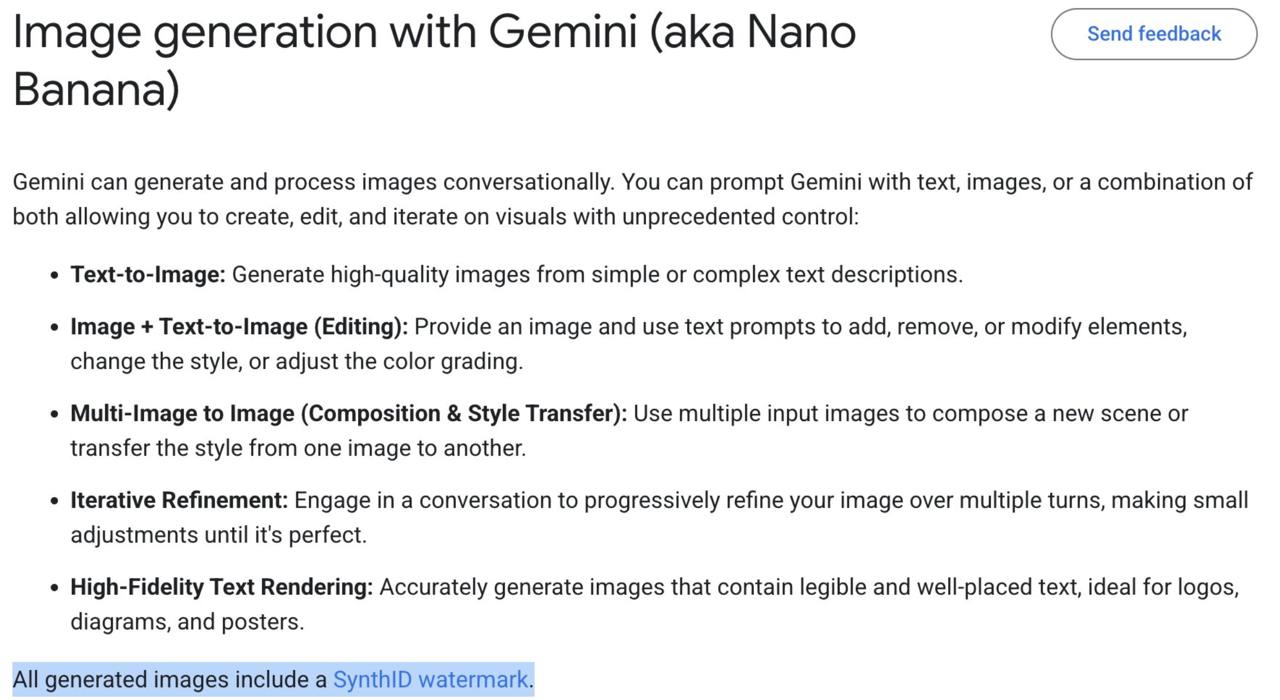

Every image crafted or edited using Nano Banana comes embedded with a SynthID watermark, a subtle yet powerful tool designed to ensure transparency. Unlike traditional visible watermarks that might detract from an image’s aesthetic, SynthID operates invisibly, woven into the pixel structure itself.

This hidden marker serves a dual purpose: it can be detected and analyzed to confirm the image’s AI origin, and it helps trace its creation back to the Nano Banana platform.

The technology, developed by Google DeepMind, is a response to the growing need to distinguish AI-generated content from human-made art in an era where deepfakes and misinformation are rampant.

How SynthID Works

SynthID’s magic lies in its ability to embed a digital signature that survives common edits—cropping, resizing, or even compression—without altering the viewer’s experience. This invisible watermark is applied automatically during the image generation process, ensuring that every Nano Banana output carries this traceable imprint.

SynthID’s magic lies in its ability to embed a digital signature that survives common edits—cropping, resizing, or even compression—without altering the viewer’s experience. This invisible watermark is applied automatically during the image generation process, ensuring that every Nano Banana output carries this traceable imprint.

For users, this means peace of mind: the tool’s creative outputs are marked with a verifiable origin, aligning with ethical AI principles. For platforms and regulators, it offers a potential mechanism to combat the spread of unverified or manipulated media, though its effectiveness depends on widespread adoption and detection tools becoming publicly accessible.

Also read:

- Google Replaces Assistant with Gemini on Wear OS Smartwatches

- Tech Experts Prepare for AI Apocalypse with Bunkers and Island Retreats

- A New Leap Toward Brain-on-a-Chip: Electro-Optical Neurons Bridge the Gap

Implications and Limitations

The introduction of SynthID with Nano Banana is a step toward accountability in the AI landscape, particularly as trends like 3D figurines and vintage saree edits flood social media. It empowers creators to build with confidence, knowing their work can be identified as AI-generated, while also addressing privacy concerns by signaling synthetic content.

The introduction of SynthID with Nano Banana is a step toward accountability in the AI landscape, particularly as trends like 3D figurines and vintage saree edits flood social media. It empowers creators to build with confidence, knowing their work can be identified as AI-generated, while also addressing privacy concerns by signaling synthetic content.

However, skepticism lingers. Some experts argue that invisible watermarks, while innovative, are not foolproof — potential adversaries could attempt to strip or mimic them, especially if detection methods remain proprietary or inaccessible to the public.

This raises questions about the technology’s real-world reliability and whether it can truly keep pace with evolving manipulation techniques.

For now, Nano Banana’s SynthID watermarks represent a promising foundation for trust in AI-generated imagery.

As the trend continues to captivate millions, it’s clear that this technology isn’t just about aesthetics—it’s about setting a standard for transparency in a digital world where the line between reality and creation blurs daily.

Whether it will fully solve the challenges of authenticity remains to be seen, but it’s a bold move in the right direction.