In the ever-evolving landscape of generative AI, where text prompts can conjure entire worlds, Kling AI - developed by the innovative minds at Kuaishou - has just dropped a bombshell update. On December 3, 2025, the company unveiled Kling Video 2.6, marking the first major leap into "native audio" generation.

This isn't just another incremental tweak; it's a paradigm shift that fuses high-fidelity visuals with synchronized soundscapes in a single, effortless pass. Imagine dictating a scene - a bustling city street at dusk, a whispering confession under starry skies - and watching AI not only paint the picture but breathe life into it with dialogue, ambient hums, and even subtle footsteps. Kling 2.6 isn't generating videos anymore; it's crafting immersive experiences.

This isn't just another incremental tweak; it's a paradigm shift that fuses high-fidelity visuals with synchronized soundscapes in a single, effortless pass. Imagine dictating a scene - a bustling city street at dusk, a whispering confession under starry skies - and watching AI not only paint the picture but breathe life into it with dialogue, ambient hums, and even subtle footsteps. Kling 2.6 isn't generating videos anymore; it's crafting immersive experiences.

At its core, Kling 2.6 excels in 1080p resolution clips up to 10 seconds long, a sweet spot for social media reels, ad prototypes, or narrative teasers.

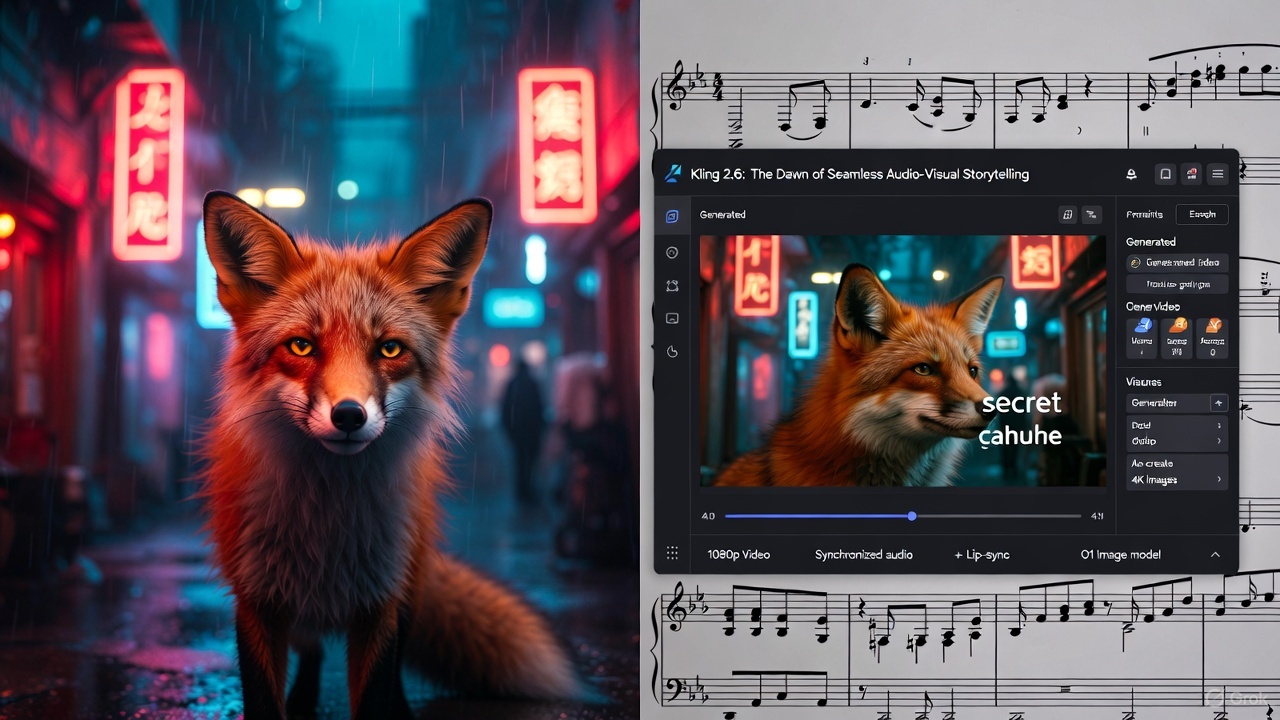

What sets it apart is the integrated audio layer: human voices with precise lip-syncing across multiple languages, including English, Mandarin, Spanish, and more. Users can specify exact phrases in their prompts - "Whisper 'I have a secret' in a husky French accent" - and the model delivers, syncing mouth movements to the words with uncanny realism.

This extends beyond humans; animal characters now vocalize too, from a fox's sly chuckle to a parrot's multilingual squawk, opening doors for whimsical animations or wildlife documentaries.

Early testers report a 40% improvement in motion fidelity over the 2.1 version, thanks to enhanced multimodal understanding that better interprets complex prompts involving physics, emotions, and environmental interactions.

The model supports both text-to-video and image-to-video workflows, allowing creators to start from scratch or build on a static reference photo. For instance, upload a serene landscape image, add "A lone hiker sings a folk tune as rain begins to fall," and out pops a clip with harmonious vocals, pattering rain effects, and echoing birdsong — all without post-production stitching.

Notably, while it handles single keyframes masterfully, dual keyframe control (start and end frames) remains in beta, teased for a future rollout to enable even tighter narrative arcs. This structural control is bolstered by Kling's upgraded narrative logic, which reduces artifacts like unnatural limb distortions by 25% and ensures consistent character appearances across frames.

Accessibility is another win. Kling 2.6 is already live on a constellation of aggregator platforms, democratizing pro-level tools for indie creators. Freepik integrates it for rapid stock video production, Fal.ai offers API hooks for developers to embed it in custom apps, and Higgsfield provides a polished interface with real-time previews.

Other hotspots include Pollo AI (with a 50% launch discount), RoboNeo (30% off via invite codes), Artlist for cinematic grading, and Media.io for quick browser-based edits. Pricing starts affordably - around 10-20 credits per generation on most sites - with bulk plans unlocking unlimited runs.

Other hotspots include Pollo AI (with a 50% launch discount), RoboNeo (30% off via invite codes), Artlist for cinematic grading, and Media.io for quick browser-based edits. Pricing starts affordably - around 10-20 credits per generation on most sites - with bulk plans unlocking unlimited runs.

Kuaishou reports over 5 million global users since Kling's 2024 debut, and this update is projected to spike adoption by 60% in creative industries like advertising and film pre-viz.

But Kling's Omni Launch Week didn't stop at video. In a bold countermove to dominant image generators like Midjourney's latest iterations, the team spotlighted O1 Image, their powerhouse text-to-image model powered by the Kolors 2.1 architecture.

Available since the O1 Video suite's rollout in late November 2025, O1 Image churns out 4K visuals in under 30 seconds - think hyper-detailed concept art, product mockups, or surreal posters - from prompts like "A cyberpunk fox in a neon-lit alley, oil painting style."

It shines in multimodal tasks, such as doodle-to-refined-art or reference-image upscaling, with a 35% boost in prompt adherence over competitors. Integrated seamlessly with 2.6, it lets users generate an image first, then animate it with audio, streamlining workflows for storytellers.

Rounding out the suite, Kling is muscling into the music generation arena with an audio engine that rivals Suno and Udio. While not a standalone track composer yet, 2.6's native capabilities include singing synthesis - prompt a character to belt out lyrics in genres from opera to hip-hop - and procedural sound design for full tracks.

The neural audio module generates royalty-free effects up to 10 seconds, like thumping basslines or orchestral swells, which can loop into longer pieces. Early demos showcase a robot crooning jazz standards with pitch-perfect intonation, hinting at future expansions into full-song mode. This audio-first approach addresses a key pain point: 70% of AI video creators cite sound syncing as their biggest hurdle, per a recent Kuaishou survey, and Kling 2.6 slashes that time from hours to seconds.

Also read:

Also read:

- Ethereum's Fusaka Upgrade Goes Live: A Bold Leap Toward Parallel Execution and Scalability

- AI Agents Demonstrate Alarming Ability to Exploit Smart Contracts, Potentially Draining Millions

- Satoshi-Era Bitcoin Wallet Awakens After Nearly 16 Years, Moving $4.33 Million in Pre-Halving Coins

As AI blurs the line between imagination and reality, Kling 2.6 feels like a sneak peek at Hollywood's next toolkit. It's not flawless - longer clips and advanced editing are on the horizon - but for creators hungry for "see the sound, hear the visual" magic, this is the spark. Whether you're a marketer scripting a viral ad or an artist animating a bedtime story, Kling invites you to speak your vision into existence. The future of content isn't silent; it's symphonic.

Author: Slava Vasipenok

Founder and CEO of QUASA (quasa.io) — the world's first remote work platform with payments in cryptocurrency.

Innovative entrepreneur with over 20 years of experience in IT, fintech, and blockchain. Specializes in decentralized solutions for freelancing, helping to overcome the barriers of traditional finance, especially in developing regions.