In the fast-evolving world of artificial intelligence, staying on top of which models excel in specific tasks can feel overwhelming. That's where Artificial Analysis shines as an indispensable resource.

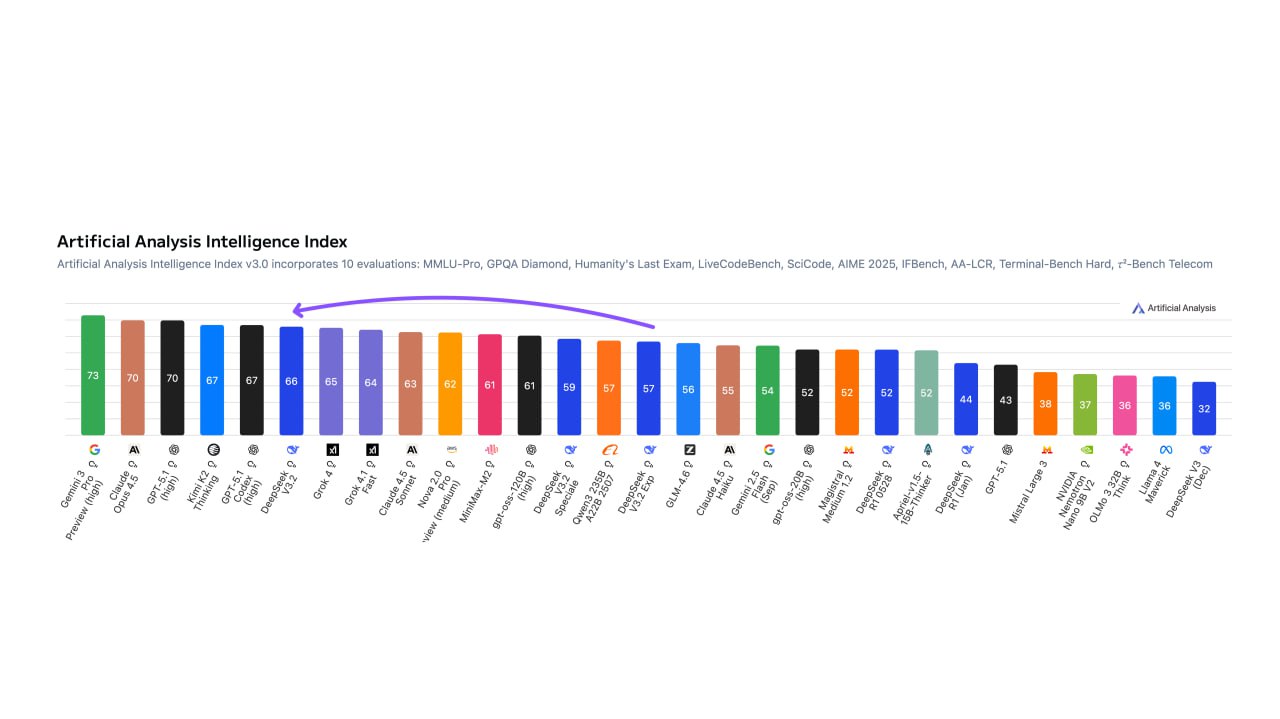

This independent benchmarking platform rigorously evaluates hundreds of AI models and APIs across diverse capabilities - from raw intelligence and coding prowess to image generation and hallucination resistance. By aggregating data from over 10 standardized benchmarks and real-world arenas, it empowers developers, researchers, and businesses to pick the best tools for text generation, code writing, visual creation, and more, all while factoring in speed, cost, and openness.

This independent benchmarking platform rigorously evaluates hundreds of AI models and APIs across diverse capabilities - from raw intelligence and coding prowess to image generation and hallucination resistance. By aggregating data from over 10 standardized benchmarks and real-world arenas, it empowers developers, researchers, and businesses to pick the best tools for text generation, code writing, visual creation, and more, all while factoring in speed, cost, and openness.

As of December 2025, their latest reports reveal seismic shifts in the AI landscape, highlighting breakthroughs in efficiency, reliability, and multimodal performance. Let's dive into the five biggest updates that are reshaping how we choose and deploy AI.

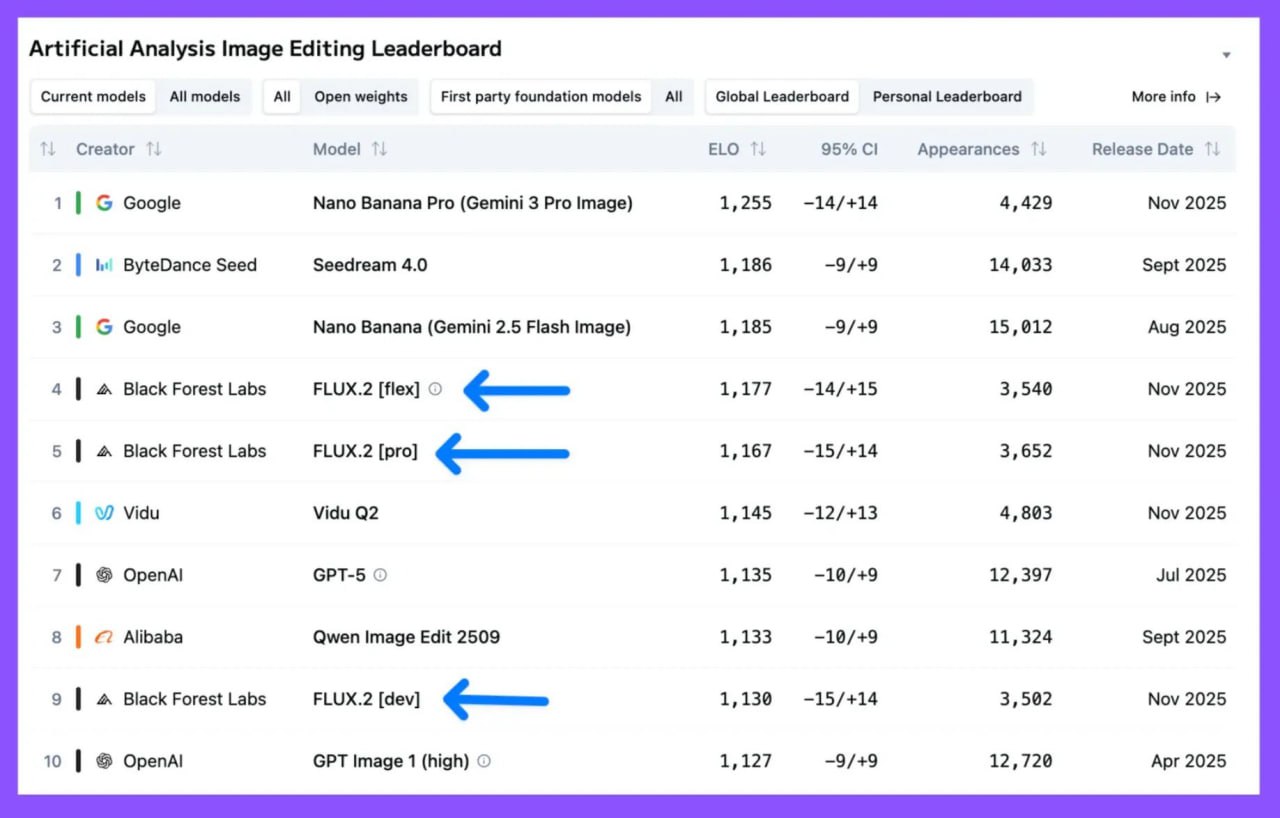

1. Flux.2 Takes the Crown in Image Generation and Editing

If you're in the business of creating or tweaking visuals, Artificial Analysis's Image Editing Leaderboard just crowned a new champion: Flux.2 from Black Forest Labs. This open-weight model family - spanning variants like [flex], [pro], and [dev] - has surged to the top, outperforming nearly every competitor in blind user-voted arenas. With an Elo rating hovering around 1,177 for the [flex] version, it edges out heavyweights like Google's Nano Banana (Gemini 3 Pro Image) at 1,255 only by a slim margin, but absolutely dominates the open-source category.

If you're in the business of creating or tweaking visuals, Artificial Analysis's Image Editing Leaderboard just crowned a new champion: Flux.2 from Black Forest Labs. This open-weight model family - spanning variants like [flex], [pro], and [dev] - has surged to the top, outperforming nearly every competitor in blind user-voted arenas. With an Elo rating hovering around 1,177 for the [flex] version, it edges out heavyweights like Google's Nano Banana (Gemini 3 Pro Image) at 1,255 only by a slim margin, but absolutely dominates the open-source category.

What makes Flux.2 stand out? It's not just raw power; it's the balance of fidelity, creativity, and editability. In head-to-head comparisons involving thousands of appearances, Flux.2 handles complex edits - like inpainting, outpainting, and style transfers - with uncanny precision, often preserving fine details that others blur or fabricate.

For instance, in tasks requiring seamless object removal or background swaps, it scores 3-5% higher in user preference than predecessors like OpenAI's GPT Image (high) or Alibaba's Qwen Image Edit 2509.

For instance, in tasks requiring seamless object removal or background swaps, it scores 3-5% higher in user preference than predecessors like OpenAI's GPT Image (high) or Alibaba's Qwen Image Edit 2509.

Released in November 2025, these models are available in both proprietary and fully open variants, making them ideal for on-device deployment without vendor lock-in. The result? A democratized toolkit for designers and creators, where high-quality image manipulation is now accessible at a fraction of the compute cost of closed alternatives.

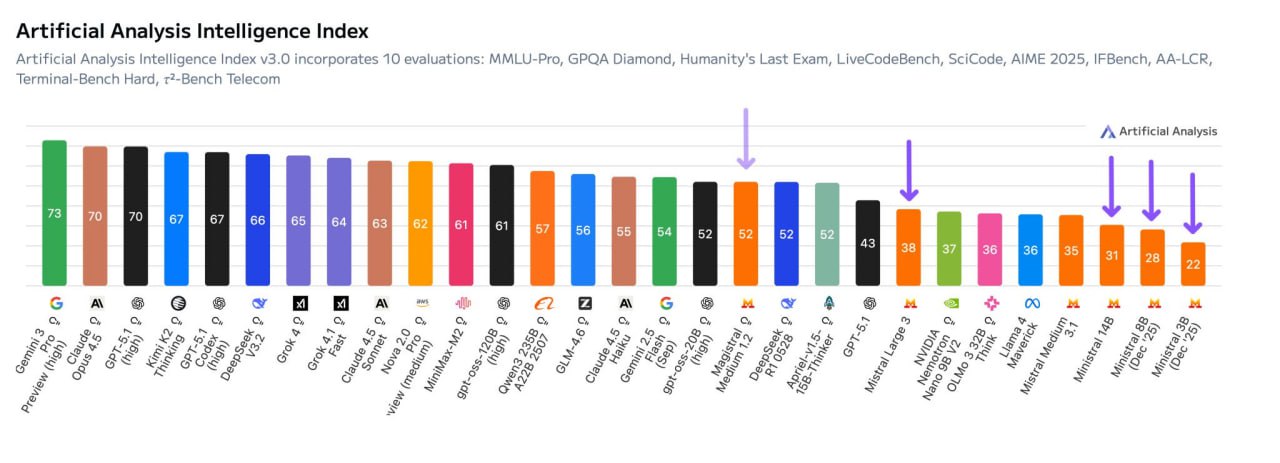

2. DeepSeek V3.2: The Open-Source Powerhouse That's Smarter, Faster, and Dirt Cheap

Open-source AI just got a massive upgrade with DeepSeek V3.2, which Artificial Analysis ranks as a top performer in their Intelligence Index v3.0. Clocking in with a composite score that surpasses Anthropic's Claude 4.5 Sonnet and xAI's Grok 4, this 405B-parameter behemoth (measured at active inference parameters) lands squarely in the "most attractive quadrant" - high intelligence paired with feasible scale.

Open-source AI just got a massive upgrade with DeepSeek V3.2, which Artificial Analysis ranks as a top performer in their Intelligence Index v3.0. Clocking in with a composite score that surpasses Anthropic's Claude 4.5 Sonnet and xAI's Grok 4, this 405B-parameter behemoth (measured at active inference parameters) lands squarely in the "most attractive quadrant" - high intelligence paired with feasible scale.

Its leap is staggering: up from V3's already impressive marks, it now excels in reasoning-heavy evals like GPQA Diamond (graduate-level science) and Humanity's Last Exam, edging out proprietary rivals by 2-4 points on average.

But the real wow factor? Affordability and speed. DeepSeek V3.2 generates text and code at blistering rates - up to 150 tokens per second on standard hardware - while costing mere pennies per million tokens (around $0.05 blended input/output). This is a boon for developers building apps on tight budgets; imagine churning out production-grade code for a full-stack project at 1/10th the price of GPT-5.1.

As an openly licensed model from a Chinese lab, it also benefits from transparent training data disclosures, scoring high on Artificial Analysis's Openness Index. In short, if you're tired of bloated API bills, DeepSeek V3.2 turns "open-source dreams" into reality, proving that high-IQ AI doesn't require deep pockets.

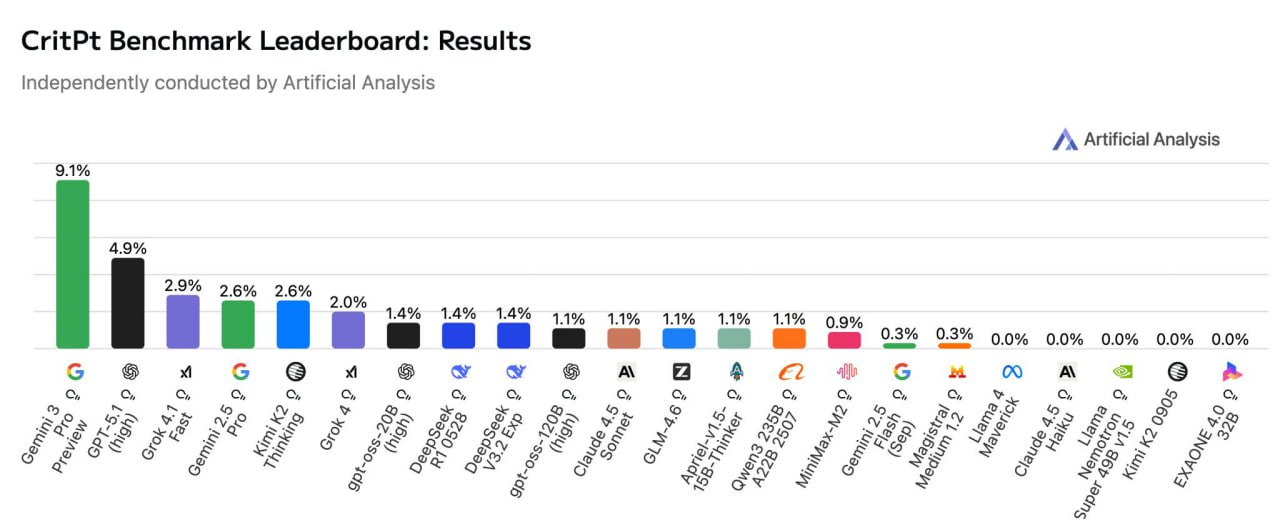

3. CritPt Benchmark: AI's Physics Olympiad Report Card

Tackling the frontiers of scientific reasoning, Artificial Analysis unveiled CritPt, a grueling new benchmark modeled after International Physics Olympiad problems. This test probes models' ability to solve novel, multi-step physics puzzles involving quantum mechanics, electromagnetism, and thermodynamics - far beyond rote memorization.

Tackling the frontiers of scientific reasoning, Artificial Analysis unveiled CritPt, a grueling new benchmark modeled after International Physics Olympiad problems. This test probes models' ability to solve novel, multi-step physics puzzles involving quantum mechanics, electromagnetism, and thermodynamics - far beyond rote memorization.

Early results are humbling: even the leaders struggle, with Google's Gemini 3.0 Pro scraping a 9.1% success rate, the highest on the board. Trailing at 4.9% is OpenAI's GPT-5.1, while xAI's Grok 4 Fast and Anthropic's Claude 4.5 Haiku hover around 2-3%.

These low scores underscore a broader truth: current LLMs are brilliant at pattern-matching but falter on true innovation in hard sciences. CritPt penalizes partial credit harshly, rewarding only fully correct derivations, which exposes gaps in symbolic reasoning. For context, human Olympiad qualifiers average 20-30% on similar sets, highlighting the chasm AI must bridge.

Positively, open models like DeepSeek R1 (3.2%) show promise in cost-scaled runs, suggesting that with fine-tuning, accessible tools could accelerate research in fields like materials science or climate modeling. Artificial Analysis plans monthly updates, making CritPt a vital pulse-check for AI's scientific maturity.

4. OmniScience Index: Calling Out Hallucinations in the Age of "I Don't Know"

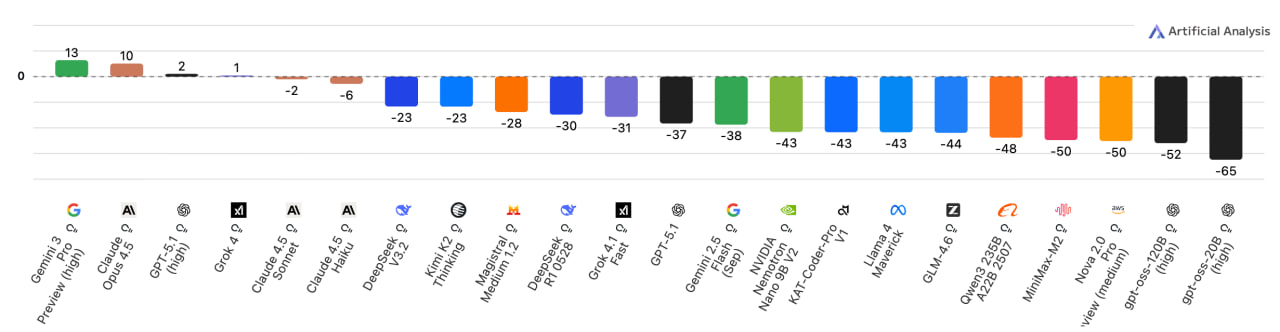

One of the most innovative additions is the AA-OmniScience Index, a hallucination detector that flips the script on model reliability. Unlike traditional accuracy metrics, it explicitly rewards admissions of ignorance ("I don't know") while docking points for fabricated facts - crucial in high-stakes domains like medicine or law. Across 26 evaluated models, leaders like Google's Gemini 3.0 Pro (score: 13) and Anthropic's Claude 4.5 Opus (10) shine with hallucination rates under 20%, blending high accuracy (70%+) with cautious responses.

One of the most innovative additions is the AA-OmniScience Index, a hallucination detector that flips the script on model reliability. Unlike traditional accuracy metrics, it explicitly rewards admissions of ignorance ("I don't know") while docking points for fabricated facts - crucial in high-stakes domains like medicine or law. Across 26 evaluated models, leaders like Google's Gemini 3.0 Pro (score: 13) and Anthropic's Claude 4.5 Opus (10) shine with hallucination rates under 20%, blending high accuracy (70%+) with cautious responses.

The dark horse? Chinese models like GLM-4.6 and Qwen3 235B, which tie or beat Western counterparts in low hallucination (15-25% rates) while maintaining openness. The real eye-opener is the flop of GPT-OSS (Open-Source Supervised variant), a popular 120B local model: it racks up a dismal -65 score, with hallucination rates exceeding 50%.

Many devs run it offline for privacy, but this benchmark screams caution - it's prone to confidently wrong answers on niche queries, like historical events or technical specs. Instead, pivot to battle-tested alternatives like DeepSeek or Kimi K2, which combine low costs ($0.02/M tokens) with refusal rates over 40%, fostering more trustworthy deployments.

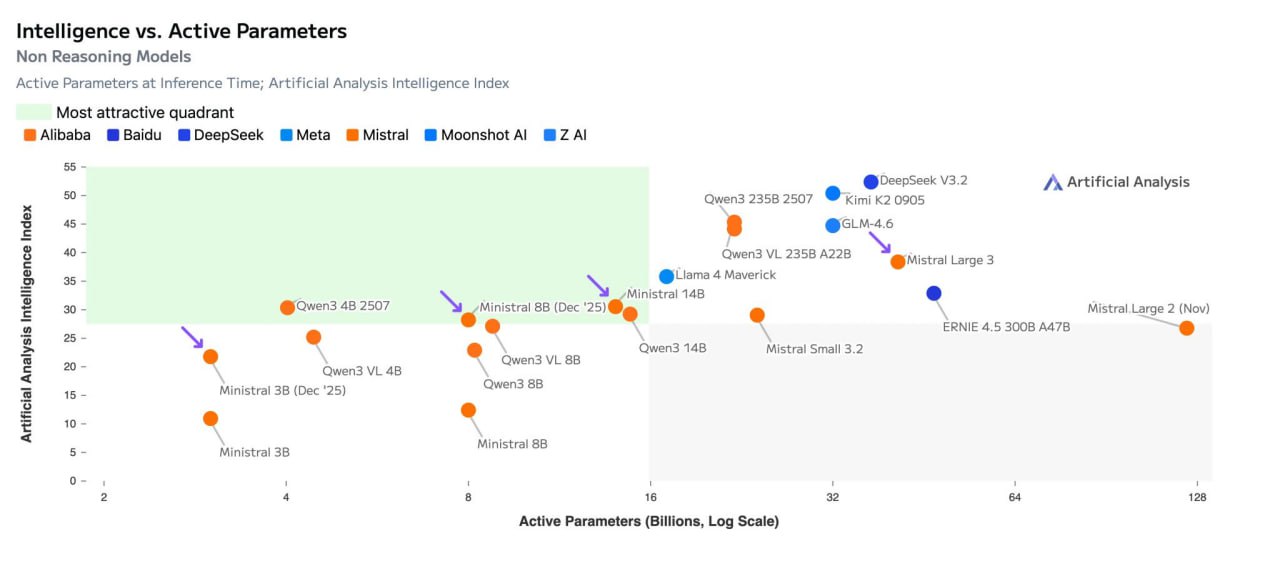

5. Mistral's Latest: Struggling to Keep Pace with China's AI Surge

Rounding out the updates, Artificial Analysis's deep dive into Mistral's December 2025 releases - Large 3, Medium 31B/48B, and Small 3.2 - paints a competitive picture. These models notch solid Intelligence Index scores (around 52-55), strong in coding (LiveCodeBench: 64%) and agentic tasks (τ²-Bench Telecom: 61%), but they lag in the "frontier" race. Plotting intelligence against active parameters, Mistral variants cluster in the mid-tier, outpaced by Chinese upstarts like Alibaba's ERNIE 5.0 (58) and Z AI's GLM-4.6 (62).

Rounding out the updates, Artificial Analysis's deep dive into Mistral's December 2025 releases - Large 3, Medium 31B/48B, and Small 3.2 - paints a competitive picture. These models notch solid Intelligence Index scores (around 52-55), strong in coding (LiveCodeBench: 64%) and agentic tasks (τ²-Bench Telecom: 61%), but they lag in the "frontier" race. Plotting intelligence against active parameters, Mistral variants cluster in the mid-tier, outpaced by Chinese upstarts like Alibaba's ERNIE 5.0 (58) and Z AI's GLM-4.6 (62).

The trend is clear: Europe's Mistral is efficient (latency under 2 seconds for 500 tokens) and open-weight friendly, but China's ecosystem - fueled by massive data troves and state-backed compute - delivers outsized gains. DeepSeek V3.2, for example, matches Mistral Large 3's reasoning at half the params and a tenth the price.

With providers like MiniMax-M2 pushing multimodal edges, the gap is widening; Artificial Analysis notes a 15% intelligence delta favoring Eastern models in Q3 2025 reports. For Mistral fans, the silver lining is customization potential—their modular architecture suits fine-tuning - but catching the Dragon will demand bolder innovation.

## Why Artificial Analysis Matters Now More Than Ever

These updates from Artificial Analysis aren't just data dumps; they're a roadmap for the AI arms race. In a market flooded with 347+ models, their quadrant charts (intelligence vs. params/speed) and specialized indices cut through the hype, revealing trade-offs like how reasoning models (e.g., o1-style) boost accuracy by 10-15% but spike latency threefold.

Whether you're optimizing for cost (hello, DeepSeek), reliability (Gemini leads), or visuals (Flux reigns), this platform ensures informed choices. As AI integrates deeper into workflows - from code autocompletion to scientific discovery - these benchmarks remind us: the smartest model isn't always the biggest, but the one that fits your needs perfectly. Keep an eye on their Q4 State of AI report; with physics and hallucination metrics maturing, 2026 could redefine what's possible.

Also read:

- Netflix's Blockbuster Acquisition of Warner Bros. Sparks Hollywood Backlash and Industry Upheaval

- Cristiano Ronaldo Invests in Perplexity AI, Championing Curiosity as the Key to Greatness

- CZ Spotlights Predict.fun: A Yield-Bearing Prediction Market Launches on BNB Chain

Author: Slava Vasipenok

Founder and CEO of QUASA (quasa.io) - Daily insights on Web3, AI, Crypto, and Freelance. Stay updated on finance, technology trends, and creator tools - with sources and real value.

Innovative entrepreneur with over 20 years of experience in IT, fintech, and blockchain. Specializes in decentralized solutions for freelancing, helping to overcome the barriers of traditional finance, especially in developing regions.