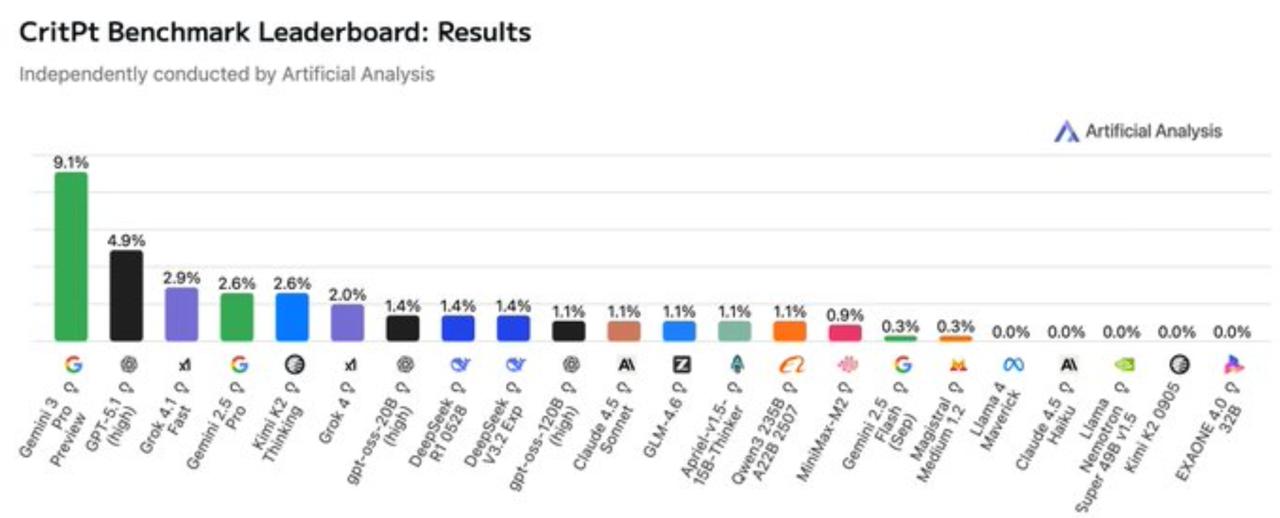

In the relentless race to mimic human intellect, artificial intelligence has aced trivia nights and crushed code marathons, but when it comes to unraveling the universe's deepest secrets, even the sharpest models are stumbling in the dark. Enter CritPt, the groundbreaking benchmark that's just exposed the chasm between today's language models and the rigorous reasoning demanded by cutting-edge physics research. Clocking in at a mere 9.1% accuracy, Google's Gemini 3.0 Pro Preview has claimed the top spot on this leaderboard - a record that's as humbling as it is historic.

Crafted by an international consortium of over 60 physicists from more than 30 institutions, including heavyweights like Argonne National Laboratory and the University of Illinois Urbana-Champaign, CritPt isn't your garden-variety quiz. Dubbed "Complex Research using Integrated Thinking - Physics Test" (playfully pronounced "Critical Point," a nod to phase transitions in thermodynamics), this evaluation thrusts AI into the trenches of graduate-level inquiry. Launched just days ago on November 21, 2025, it comprises 71 intricate "composite research challenges" and 190 modular checkpoints, each a self-contained puzzle mimicking the standalone projects a capable junior PhD student might tackle over weeks or months.

What sets CritPt apart from flashier benchmarks like MMLU or GPQA? It's unapologetically frontier-focused. The problems - 70 in the public test set, with answers held private to thwart data leaks - are entirely novel, drawn from unpublished corners of physics and vetted by postdocs and professors in their respective fields. No recycling of textbook tropes here; these are fresh conundrums requiring not just recall, but genuine synthesis of concepts across domains.

What sets CritPt apart from flashier benchmarks like MMLU or GPQA? It's unapologetically frontier-focused. The problems - 70 in the public test set, with answers held private to thwart data leaks - are entirely novel, drawn from unpublished corners of physics and vetted by postdocs and professors in their respective fields. No recycling of textbook tropes here; these are fresh conundrums requiring not just recall, but genuine synthesis of concepts across domains.

Spanning 11 subfields, from the quantum weirdness of atomic, molecular, and optical (AMO) physics to the cosmic sprawl of astrophysics and the chaotic swirls of fluid dynamics, the challenges demand models to derive equations from scratch, simulate nonlinear phenomena, or predict outcomes in high-energy particle collisions without a safety net.

The verdict? A collective facepalm for the AI world. Gemini 3.0 Pro's 9.1% score - achieved without external tools or multiple retries - barely cracks the surface, solving just six or seven of the 70 challenges outright. Trailing in its wake: Anthropic's Claude 4.1 at 4.9%, OpenAI's GPT-5.1 (high) at 2.9%, and xAI's Grok 4 Fast at 2.6%. Even heavy-hitters like DeepSeek-V2 (1.4%) and Qwen2.5-Max (1.1%) hover near single digits, with many models failing to crack a single problem across five attempts.

For context, this isn't rote computation; it's the kind of integrated thinking that powers breakthroughs, like modeling statistical mechanics in disordered materials or forecasting biophysically inspired protein folding under extreme conditions.

Digging deeper into the leaderboard reveals intriguing patterns. Efficiency emerges as a dark horse: While Grok 4 burned through a staggering 4.9 million tokens - averaging nearly 60,000 per challenge for exhaustive chain-of-thought deliberation - Gemini 3.0 Pro pulled off its lead with about 10% fewer tokens than GPT-5.1, hinting at smarter, more concise reasoning pathways.

Digging deeper into the leaderboard reveals intriguing patterns. Efficiency emerges as a dark horse: While Grok 4 burned through a staggering 4.9 million tokens - averaging nearly 60,000 per challenge for exhaustive chain-of-thought deliberation - Gemini 3.0 Pro pulled off its lead with about 10% fewer tokens than GPT-5.1, hinting at smarter, more concise reasoning pathways.

This token thriftiness could prove crucial as models scale toward trillion-parameter behemoths, where computational costs skyrocket. Yet, the raw numbers underscore a stark reality: Even with prompts engineered to elicit verbose explanations, AI's grasp on physics remains embryonic. In nuclear physics tasks, for instance, models often misapply conservation laws; in nonlinear dynamics, they fabricate unstable equilibria that defy real-world stability.

CritPt's architects, led by trailblazers like MiniHui Zhu and Minyang Tian - veterans of benchmarks such as SciCode and SWE-Bench - envision it as a litmus test for "research assistant" viability. Each challenge is scoped to be solvable by a motivated grad student armed with domain knowledge, yet invisible to training corpora. This deliberate opacity ensures evaluations mirror authentic discovery, not regurgitation.

Early adopters, including indie labs and enterprise teams, are already queuing up for the public grading server API, which promises blind, contamination-free scoring. The open dataset, released alongside a technical report detailing asymmetric denoising techniques and flow-matching innovations in model training, invites global tinkering—potentially accelerating hybrid AI-human workflows in labs worldwide.

So, what does 9.1% really mean? It's a sobering checkpoint in the AGI odyssey, reminding us that while models like Gemini dazzle in multimodal tasks or creative coding, true scientific reasoning - blending intuition, error-correction, and interdisciplinary leaps - lies tantalizingly out of reach. Physics, with its unforgiving math and empirical anchors, exposes the brittleness: A 91% failure rate isn't hype deflation; it's a call to arms. As one contributor quipped in the benchmark's development notes, "We've built the wall; now watch the climbers slip."

Also read:

Also read:

- Let the "Hunger Games" Begin: Paramount, Netflix, and Comcast Throw Down the Gauntlet in Warner Bros. Discovery Bidding War

- Make Joe Rogan Great Again: The King of Podcasts Reclaims His Throne in 2025

- Robert Kiyosaki Cashes Out $2.25 Million in Bitcoin: A Strategic Pivot or a Bet Against the Hype?

Looking ahead, CritPt could reshape the frontier. With updates slated for emerging models like rumored Grok 4 Heavy variants or Llama 3.2 iterations, the leaderboard might tick upward - but don't bet on double digits anytime soon. For now, it's a badge of humility: AI as promising apprentice, not tenured sage. In the grand quantum foam of progress, this benchmark isn't just measuring models; it's recalibrating our expectations for what intelligence, artificial or otherwise, truly demands.