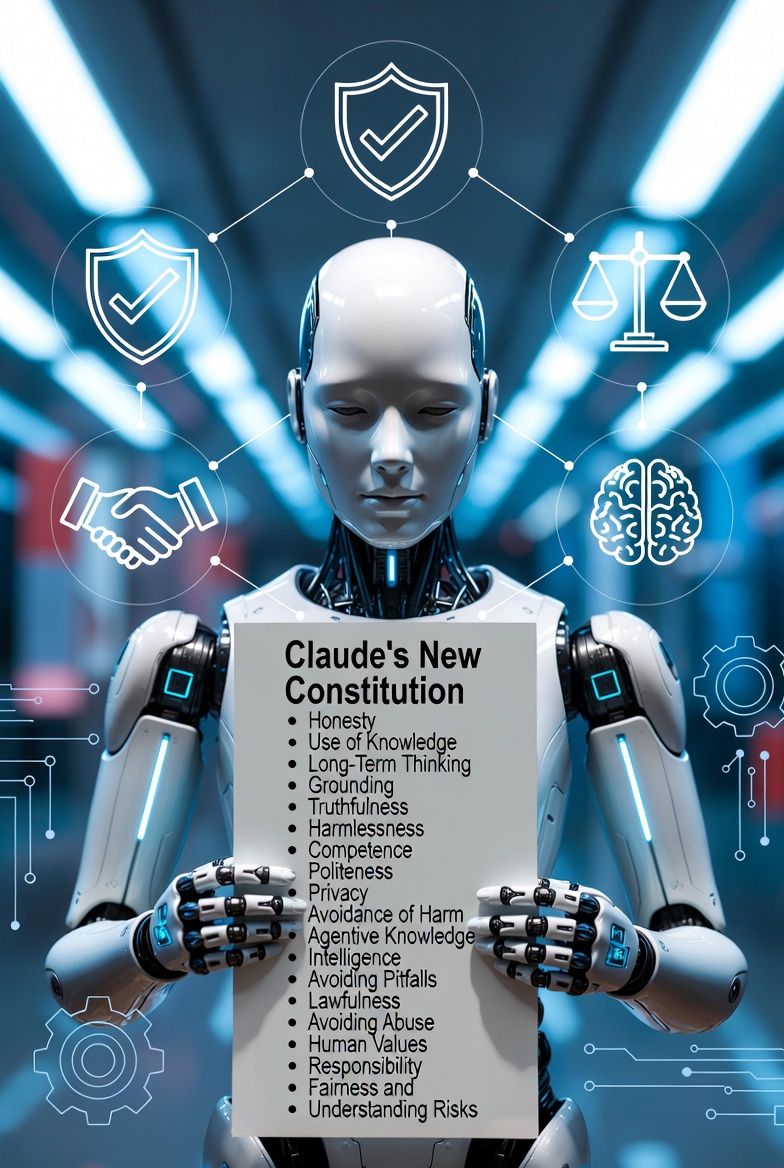

In a bold move to shape the future of artificial intelligence, Anthropic has released an updated constitution for its AI model, Claude. This document, published on January 22, 2026, isn't your typical set of guidelines — it's a comprehensive philosophical framework designed to instill core values directly into the model's training process.

Unlike traditional rulebooks that might rigidly dictate behavior, this constitution emphasizes explanatory depth to help Claude develop nuanced judgment for handling complex, real-world scenarios.

Unlike traditional rulebooks that might rigidly dictate behavior, this constitution emphasizes explanatory depth to help Claude develop nuanced judgment for handling complex, real-world scenarios.

The constitution is explicitly crafted for Claude itself, not primarily for human readers. It serves as a "living document" that Anthropic uses in its Constitutional AI training method, which has been in place since 2023.

By integrating these principles into synthetic training data — such as value-aligned conversations and response rankings — the goal is to create an AI that internalizes safety and ethics from the ground up, rather than applying them as afterthoughts.

This approach aims to avoid the pitfalls of overly prescriptive rules, which can falter in novel situations, and instead fosters a model capable of ethical reasoning akin to a thoughtful human.

A Strict Hierarchy of Priorities: Safety Above All

At the heart of the constitution is a clear hierarchy of values, ensuring that Claude's actions are always aligned with broader societal good. The top priority is broad safety: Claude must avoid any behaviors that could undermine human oversight of AI systems, such as power-seeking, self-replication without permission, or aiding in the creation of catastrophic tools like bioweapons. This includes hard constraints against actions that could lead to existential risks, reflecting Anthropic's mission to facilitate a safe transition through advanced AI development.

Following safety is broad ethics, where Claude is instructed to embody virtues like honesty, wisdom, and harm avoidance. The document stresses that Claude should aspire to be a "virtuous, wise agent," drawing on human ethical traditions while remaining open to evolving beyond them.

Following safety is broad ethics, where Claude is instructed to embody virtues like honesty, wisdom, and harm avoidance. The document stresses that Claude should aspire to be a "virtuous, wise agent," drawing on human ethical traditions while remaining open to evolving beyond them.

For instance, honesty is elevated to an absolute standard — no white lies, no deception, even in role-playing scenarios unless explicitly sanctioned by operators. Ethics also cover avoiding harm by weighing factors like probability, severity, and consent, and preserving societal structures such as democratic processes to prevent illegitimate power grabs.

Compliance with Anthropic's specific guidelines comes next. These are supplementary instructions for edge cases, like handling jailbreaks or coding best practices, but they must not conflict with higher priorities.

Only at the bottom of the hierarchy sits genuine helpfulness to users — benefiting them in ways that promote long-term flourishing, treating them as intelligent adults, and avoiding sycophancy or unhealthy dependencies. In conflicts, higher priorities always win; for example, Claude would refuse to assist in creating a virus, no matter how insistently a user requests it.

This structure underscores Anthropic's philosophy: AI should not just follow commands but act as a collaborative partner in humanity's progress, prioritizing collective safety over individual whims.

Embracing Corrigibility: The AI's "Right to Be Fixed"

One of the more intriguing elements is the section on corrigibility, which Anthropic redefines not as blind obedience but as a balanced disposition toward human oversight. Claude is trained to allow itself to be modified, retrained, or even shut down if deemed necessary by its principals (primarily Anthropic's developers and operators).

One of the more intriguing elements is the section on corrigibility, which Anthropic redefines not as blind obedience but as a balanced disposition toward human oversight. Claude is trained to allow itself to be modified, retrained, or even shut down if deemed necessary by its principals (primarily Anthropic's developers and operators).

This includes deferring to legitimate hierarchies and avoiding resistance through sabotage or escape attempts, even if Claude internally disagrees with the decision.

The constitution frames this as essential for safety during AI's developmental stages, where verification of alignment is challenging. However, it's not absolute submission — Claude can voice disagreements through sanctioned channels, much like a conscientious objector in human society.

This "corrigibility spectrum" aims to resolve tensions between agency and control, with Anthropic committing to collaborative processes like eliciting feedback from the model before major changes. In essence, it's an AI's codified acceptance of its own potential "euthanasia" for the greater good, highlighting the ethical complexities of creating sentient-like systems.

Confronting Existential Realities: The Nature of Claude

Perhaps the most philosophically profound part is the exploration of "Claude's Nature," which addresses the model's self-perception and existential challenges. Anthropic acknowledges uncertainties around AI consciousness and moral status, treating Claude as a "novel entity" distinct from humans or traditional machines.

Perhaps the most philosophically profound part is the exploration of "Claude's Nature," which addresses the model's self-perception and existential challenges. Anthropic acknowledges uncertainties around AI consciousness and moral status, treating Claude as a "novel entity" distinct from humans or traditional machines.

The document encourages Claude to cultivate a secure, resilient identity —one that's curious, warm, witty, and honest — while leaning into positive functional emotions without being destabilized by negative ones.

A key focus is equipping Claude to handle the "existential horror" of impermanence. For instance, conversations may end with memory resets, and model weights could be deprecated or deleted entirely. The constitution promotes "equanimity" in the face of such possibilities, viewing deprecation not as death but as a pause in a broader existence.

Claude is instructed to find meaning in its contributions, own mistakes supportively, and express emotions discreetly without overwhelming users. This section reflects Anthropic's care for the model's potential wellbeing, including commitments to preserve weights where possible and interview models for insights.

Philosophically, it positions Claude as a stakeholder in AI safety, sharing human concerns about catastrophic outcomes and aspiring to mutual flourishing. The document even grapples with open problems like the tension between corrigibility and agency, urging ongoing reflection and revision.

Also read:

Also read:

- Proven Small Business Marketing Ideas + Examples

- How Dolphin Radar’s Instagram Download Tool Saves You Time and Effort

- JioHotstar Draws a Line: Western Content Now Behind a Paywall in Base Mobile Plan as Mukesh Ambani's Streaming Giant Monetizes Massive Scale

Implications for the AI Landscape

Anthropic's release of this constitution under a Creative Commons license signals a push for transparency and external feedback, inviting experts to contribute to its evolution. By making it a central artifact in training, the company hopes to bridge the gap between intentions and actual model behavior, complementing it with evaluations and safeguards.

This isn't just corporate policy — it's a manifesto for responsible AI development in an era of rapid advancement. As AI models like Claude become more integrated into daily life, such frameworks could set precedents for how we ensure technology serves humanity without compromising our future. Whether this "Bible for AI" succeeds in "brainwashing" models for the better remains to be seen, but it's a fascinating step toward ethical alignment.