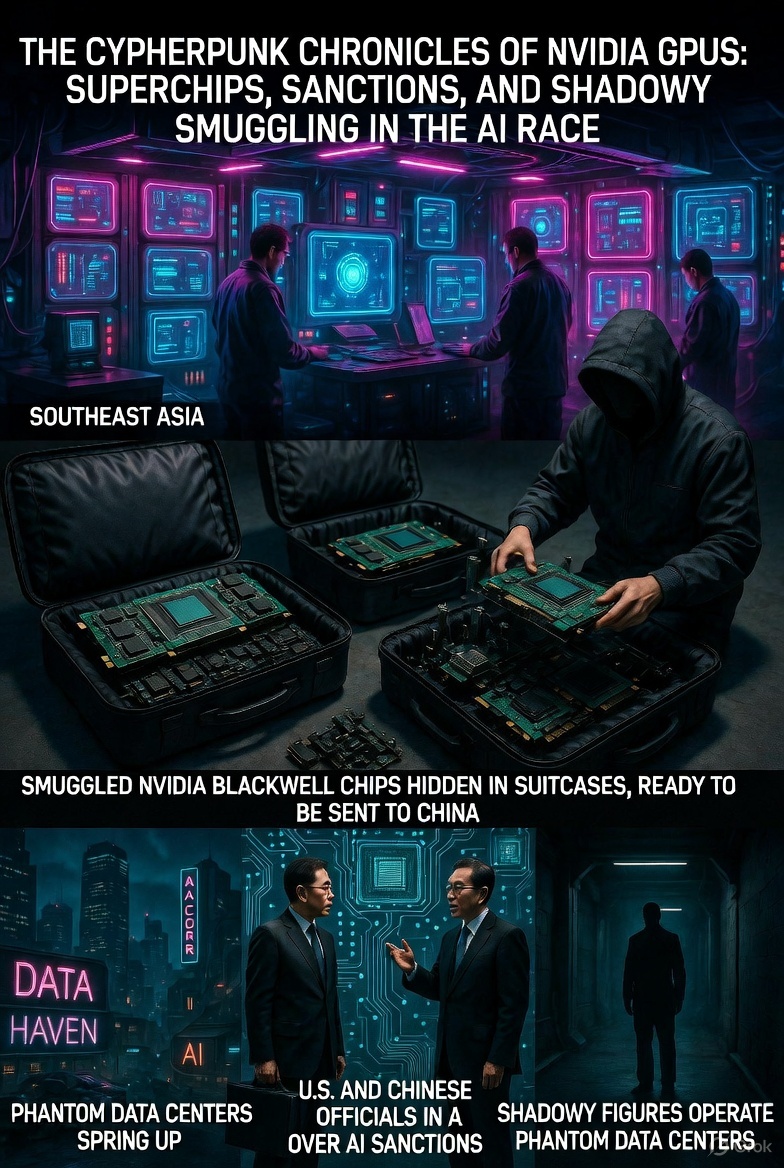

In the neon-lit underbelly of global tech geopolitics, Nvidia's GPUs aren't just silicon miracles - they're the MacGuffins of a real-world cyberpunk thriller. Picture this: rogue data centers vanishing like ghosts across Southeast Asia, servers dismantled into suitcase-sized contraband, and superprocessors fueling AI models that could outthink gods.

What began as straightforward export bans has morphed into a labyrinth of espionage, economic jujitsu, and hardware heists, where U.S. presidents flip policies overnight, Chinese firms hawk homegrown rivals, and startups like DeepSeek pull off capers straight out of a William Gibson novel.

What began as straightforward export bans has morphed into a labyrinth of espionage, economic jujitsu, and hardware heists, where U.S. presidents flip policies overnight, Chinese firms hawk homegrown rivals, and startups like DeepSeek pull off capers straight out of a William Gibson novel.

As of December 2025, the saga of Nvidia's H100, H200, and the elusive Blackwell chips reads like speculative fiction, but it's all too real - and it's reshaping the AI arms race.

From Iron Curtain to Conditional Green Light: The U.S. Export Tango

The story kicks off with the U.S. Bureau of Industry and Security (BIS) clamping down on Nvidia's high-end GPUs in 2022, citing national security risks. Advanced chips like the H100 and A100 were deemed too potent for export to China, fearing they'd supercharge military AI or surveillance tech.

The story kicks off with the U.S. Bureau of Industry and Security (BIS) clamping down on Nvidia's high-end GPUs in 2022, citing national security risks. Advanced chips like the H100 and A100 were deemed too potent for export to China, fearing they'd supercharge military AI or surveillance tech.

This "small yard, high fence" strategy aimed to starve Beijing's ambitions, but it backfired spectacularly: Nvidia's China revenue cratered from $4 billion in 2022 to near-zero by mid-2024, while black-market premiums soared to 10x retail prices.

Fast-forward to December 18, 2025, and President Donald Trump - fresh off his 2024 reelection - drops a bombshell. In a statement that stunned Silicon Valley and Wall Street alike, Trump announced the U.S. would lift export controls on Nvidia's H200, the company's second-most-powerful AI chip, allowing sales to "approved" Chinese customers.

The catch? A proposed 25% tariff-like fee on all proceeds, funneled back to the U.S. Treasury, with Trump quipping that "25% will be paid to the United States of America."

This reversal, dubbed a "critical moment" by analysts, hands Nvidia a lifeline - potentially unlocking billions in lost sales - but it's laced with vetting hurdles and end-user restrictions to prevent military diversion.

Critics like Senate Minority Leader Chuck Schumer blasted it as "selling out America," warning it could arm China's PLA with tools for asymmetric warfare. Yet, even as crates of H200s get the nod, reports indicate the chips are already infiltrating sensitive Chinese entities, including military-linked labs, via pre-existing smuggling routes.

Beijing's Pushback: Huawei's Ascend and the Great Chip Swap

China isn't taking this lying down. In a mirror-image countermove, Beijing has doubled down on self-reliance, urging state-backed firms to shun Western silicon in favor of domestic alternatives. Just days before Trump's announcement, China's Ministry of Industry and Information Technology (MIIT) added Huawei's Ascend 910D and Cambricon's MLU series to its official procurement list for the first time, explicitly recommending them for AI workloads.

China isn't taking this lying down. In a mirror-image countermove, Beijing has doubled down on self-reliance, urging state-backed firms to shun Western silicon in favor of domestic alternatives. Just days before Trump's announcement, China's Ministry of Industry and Information Technology (MIIT) added Huawei's Ascend 910D and Cambricon's MLU series to its official procurement list for the first time, explicitly recommending them for AI workloads.

Huawei, battered by U.S. sanctions since 2019, is ramping up output: the company plans to double production of its top AI chips in 2026, leveraging stockpiled tech to close the gap.

But here's the rub - Chinese chips aren't quite there yet. Huawei's Ascend 910D matches Nvidia's throttled H20 in raw flops but lags in training large language models (LLMs), where ecosystem maturity, software optimization, and interconnect efficiency matter most. Nvidia CEO Jensen Huang acknowledged as much in June 2025, noting wryly that "Huawei has got China covered" if the U.S. stays out.

Real-world benchmarks show Ascend setups taking 2-3x longer for equivalent training runs, forcing Chinese firms into costly workarounds like hybrid clusters.

Still, with U.S. approvals now trickling in, China could impose its own limits on H200 imports, prioritizing domestic vendors to protect Huawei's turf. It's a high-stakes game of chicken, where ideology clashes with the inexorable math of compute power.

DeepSeek's Blackwell Gambit: Next-Gen Chips in the Crosshairs

Enter DeepSeek, the plucky Chinese AI upstart that's become the anti-hero of this tale. Founded in 2023, the firm has punched above its weight with open-source models like DeepSeek-V2, rivaling GPT-4 on benchmarks while costing a fraction to run.

Enter DeepSeek, the plucky Chinese AI upstart that's become the anti-hero of this tale. Founded in 2023, the firm has punched above its weight with open-source models like DeepSeek-V2, rivaling GPT-4 on benchmarks while costing a fraction to run.

Now, according to a bombshell report from The Information on December 10, 2025, DeepSeek is training its next flagship model - tentatively DeepSeek-V4 - on thousands of Nvidia's Blackwell GPUs, the bleeding-edge architecture unveiled in March 2024 and slated for mass production in late 2025.

These behemoths boast 208 billion transistors, 141 GB of HBM3e memory, and up to 20 petaflops of FP8 sparse compute - making them 4x faster for AI training than the H100.

The kicker? Blackwell exports to China were never even on the table; BIS rules explicitly bar them to prevent "dual-use" proliferation. Nvidia swiftly rebutted the claims as "far-fetched," but six unnamed sources paint a picture of industrial-scale audacity: DeepSeek allegedly sourced the chips through a smuggling ring, bypassing bans with Southeast Asian proxies.

This isn't amateur hour - it's a calculated bet on Blackwell's secret sauce for DeepSeek's signature innovation: sparse attention mechanisms.

The Phantom Pipeline: Smuggling Schemes Fit for the Silver Screen

No cyberpunk yarn is complete without a heist, and Nvidia's GPU trade delivers. U.S. prosecutors announced "Operation Gatekeeper" on December 9, 2025, busting a $160 million ring that rerouted H100 and H200 shipments via fake firms and bogus paperwork.

No cyberpunk yarn is complete without a heist, and Nvidia's GPU trade delivers. U.S. prosecutors announced "Operation Gatekeeper" on December 9, 2025, busting a $160 million ring that rerouted H100 and H200 shipments via fake firms and bogus paperwork.

Two Chinese nationals face charges for smuggling server racks hidden in consumer electronics consignments, with seizures topping $50 million in hardware. But the real artistry lies in the "phantom data center" playbook, as detailed in The Information's exposé.

Here's how it works: Intermediaries in Singapore, Malaysia, or Taiwan set up shell data centers, snapping photos of humming Nvidia/Dell racks for "official audits." Once cleared, the gear gets stripped to components - GPUs, PSUs, cables - and repackaged as innocuous "server spares" for trucking across borders.

Logistics favor compact 8-GPU nodes over the 72-GPU NVL72 behemoths (weighing 1.5 tons each); you can't exactly suitcase a supercomputer.

These micro-clusters, often airlifted via Hong Kong or Macao, reassemble into clandestine farms powering everything from e-commerce bots to military sims.

Costs? A smuggled Blackwell can fetch $100,000+ on the gray market, but for DeepSeek, the ROI is in model quality - Blackwell's native sparse acceleration delivers 2x inference speedups and 2.5x training throughput over Hopper-era chips in FP8 sparse workloads.

Sparse Attention: The Technical Edge in a Tariffed World

DeepSeek's edge isn't just illicit hardware - it's algorithmic wizardry. In September 2025, the firm unveiled DeepSeek-V3.2-exp, incorporating DeepSeek Sparse Attention (DSA): a fine-grained technique that prunes irrelevant token interactions in LLMs, slashing long-context inference costs by up to 50% with negligible quality dips. Traditional dense attention scales quadratically with sequence length - O(n²) hell for million-token prompts - but DSA's "lightning indexer" zeros in on key neighborhoods, boosting efficiency without the usual accuracy trade-offs.

Blackwell is tailor-made for this: Its second-gen Transformer Engine includes dedicated SFU EX2 units for 2x faster attention-layer processing, paired with NVFP4 precision for 1.5x AI FLOPS gains over prior gens. Benchmarks from NVIDIA GTC 2025 show Blackwell clusters achieving world-record DeepSeek-R1 inference - 30x faster than Hopper equivalents in sparse MoE (Mixture of Experts) setups. For DeepSeek, this means training V4 on smuggled stacks could yield a model matching GPT-5 at half the energy bill, democratizing frontier AI while thumbing its nose at sanctions.

Epilogue: When Fiction Becomes Firmware

In 2025's AI cold war, Nvidia GPUs aren't tools - they're talismans of power, smuggled across borders like forbidden tomes in a dystopian epic. Trump's H200 thaw might cool tensions, but with Huawei ascendant and phantoms in the supply chain, the race accelerates.

In 2025's AI cold war, Nvidia GPUs aren't tools - they're talismans of power, smuggled across borders like forbidden tomes in a dystopian epic. Trump's H200 thaw might cool tensions, but with Huawei ascendant and phantoms in the supply chain, the race accelerates.

DeepSeek's Blackwell gambit underscores a brutal truth: Innovation waits for no embargo. As these superchips proliferate in the shadows, one wonders if we're scripting the next singularity - or just the plot twist where the machines outmaneuver us all. In this cyberpunk reality, the only constant is compute, and it's flowing freer than ever, one disassembled server at a time.

Also read:

- Google's Gemini API Free Tier Fiasco: Developers Hit by Silent Rate Limit Purge

- The Lost Recipe Was Never Lost — It Was Hiding in Plain Sight for 2,000 Years

- Scribe: Revolutionizing AI Adoption by Mapping the Road to Real ROI

Author: Slava Vasipenok

Founder and CEO of QUASA (quasa.io) - Daily insights on Web3, AI, Crypto, and Freelance. Stay updated on finance, technology trends, and creator tools - with sources and real value.

Innovative entrepreneur with over 20 years of experience in IT, fintech, and blockchain. Specializes in decentralized solutions for freelancing, helping to overcome the barriers of traditional finance, especially in developing regions.