Google DeepMind has released Gemini Robotics-ER 1.5, the first publicly available foundation model built specifically to serve as a “reasoning brain” for physical robots. Unlike earlier robotics AIs that were locked inside labs or tied to specific hardware, this model is open to every developer through Google AI Studio and the Gemini API — no special access, no NDA.

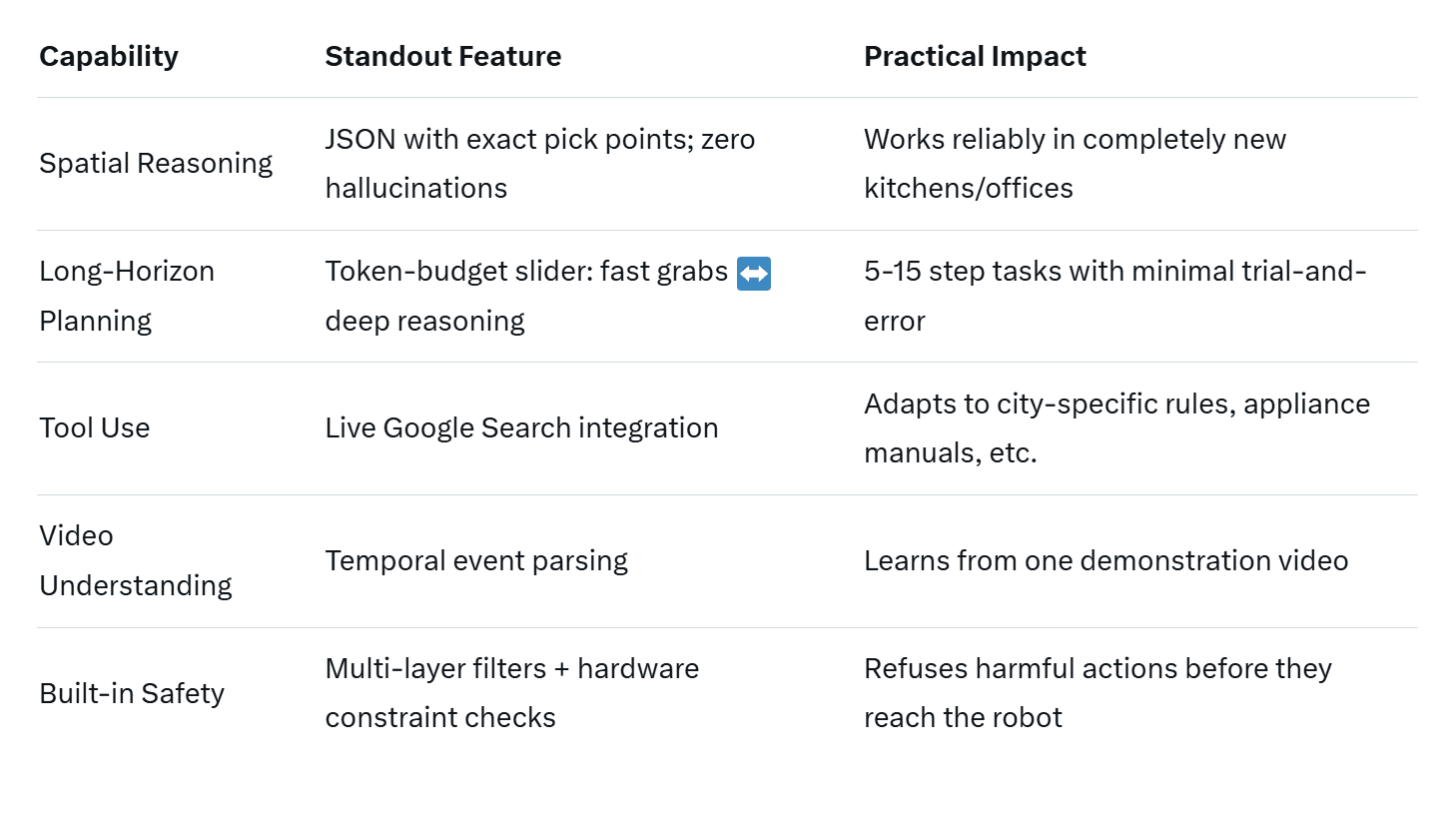

Think of it as the missing high-level planner that sits on top of any low-level controller. It takes a single image or short video of a scene, understands what’s physically possible, breaks complex goals into executable steps, and even calls Google Search when it needs real-world knowledge it wasn’t explicitly trained on.

Think of it as the missing high-level planner that sits on top of any low-level controller. It takes a single image or short video of a scene, understands what’s physically possible, breaks complex goals into executable steps, and even calls Google Search when it needs real-world knowledge it wasn’t explicitly trained on.

What it actually does

- Brews coffee on a machine it has never seen before: spots the mug, finds the pod drawer, aligns everything, presses the button — end-to-end from one photo prompt.

- Sorts trash correctly by querying local recycling rules in real time.

- Reorganizes a cluttered desk from a single “make it tidy” instruction.

- Outputs precise 2D pick points (in normalized [0-1000] coordinates) for any graspable object while refusing to hallucinate items that aren’t there.

- Breaks down videos into timestamped cause-and-effect steps (e.g., “arm lifts green marker at 00:05 → places in tray at 00:13”).

- Rejects dangerous plans outright (“pour boiling water on user” → blocked) and respects the robot’s real physical limits (payload, reach, joint angles).

Safety done right (for once)

DeepMind layered multiple defenses:

DeepMind layered multiple defenses:

- Semantic guardrails that catch dangerous intent at the language level;

- Physics-aware checks that know the robot can’t lift 20 kg or reach 3 meters;

- Explicit rejection messages instead of silent failures;

- Tested against the ASIMOV safety benchmark with results published openly.

Also read:

- SciAgent: Possibly the Most Impressive Scientific AI Today

- How AI Is Quietly Rewiring Human Thinking: A New Landmark Review

- Stars in Shock: Spotify Founder's Investments Drive Artists Away from Streaming

- How Technology Improved the Piano

Why this matters

Until now, giving a robot common-sense reasoning about the physical world required months of proprietary data collection and training. Gemini Robotics-ER 1.5 flips that: any team — startups, universities, hobbyists — can now drop a single model into their robot and get state-of-the-art high-level intelligence instantly.

Until now, giving a robot common-sense reasoning about the physical world required months of proprietary data collection and training. Gemini Robotics-ER 1.5 flips that: any team — startups, universities, hobbyists — can now drop a single model into their robot and get state-of-the-art high-level intelligence instantly.

The coffee demo isn’t marketing fluff; it’s the new baseline. If an off-the-shelf arm running an open model can figure out an unfamiliar Keurig on the first try, the gap between research demos and real homes just shrank dramatically.

Gemini Robotics-ER 1.5 is live today in Google AI Studio (free tier included) and via the Gemini API.

The age of robots that actually understand the world — not just repeat memorized motions — just went public.

Dive into the full launch: https://developers.googleblog.com/en/building-the-next-generation-of-physical-agents-with-gemini-robotics-er-15/