Forget massive GPU clusters sipping megawatts of electricity. In a laboratory at the University of California, Los Angeles, researchers have built and experimentally demonstrated a system that generates complex, high-quality color images using nothing but coherent light, passive optical elements, and a single camera sensor. The heavy lifting that normally requires billions of multiply-accumulate operations on silicon is now performed instantly by physics.

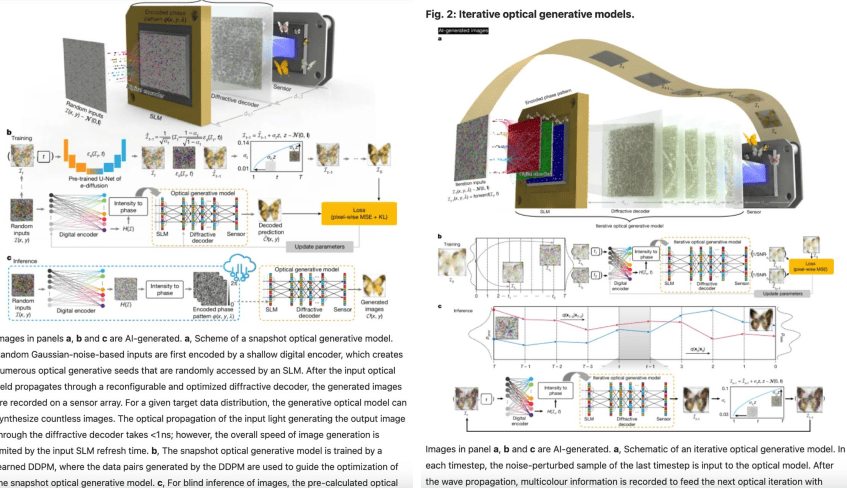

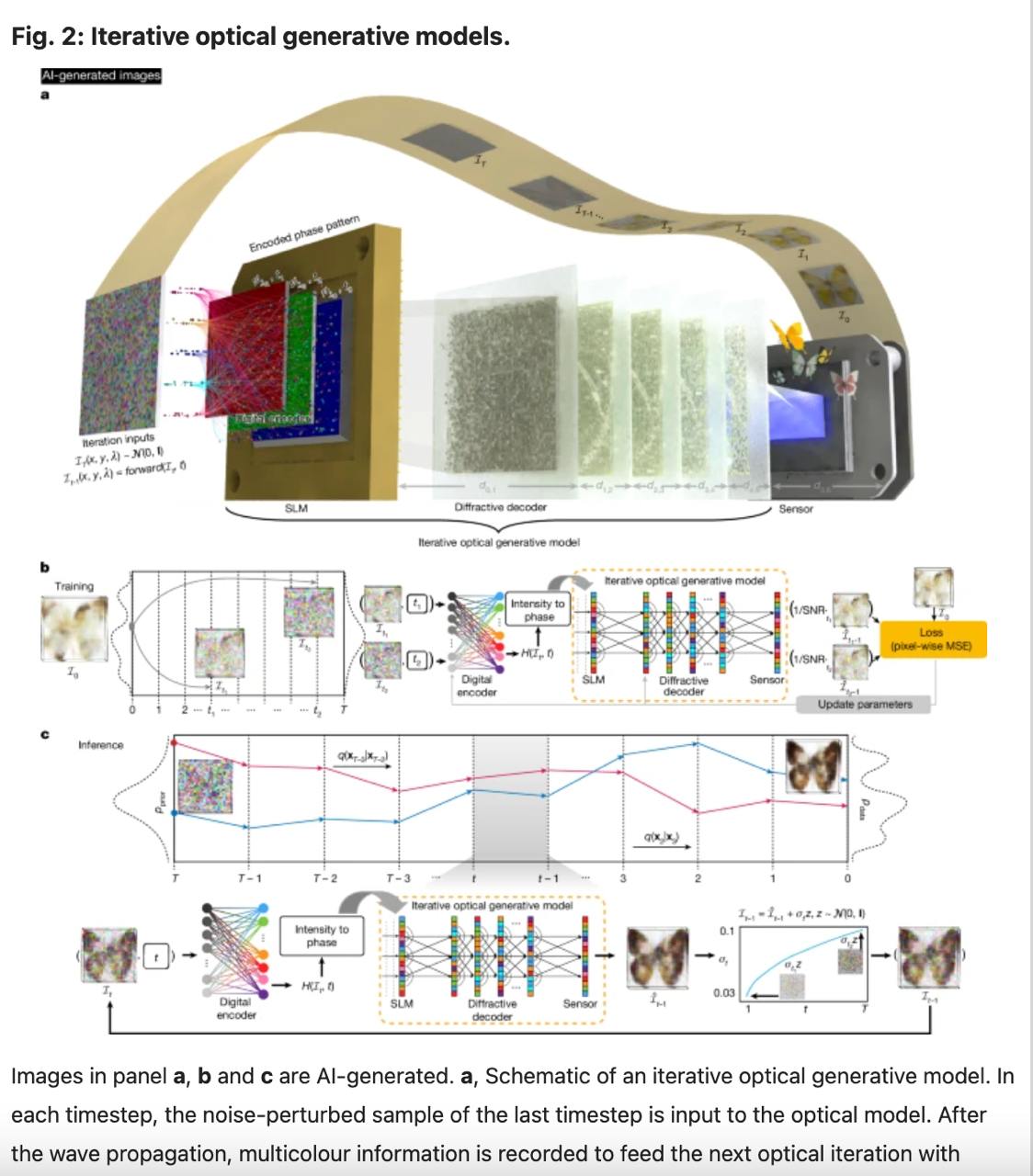

The core invention is called the Optical Generative Model (OGM): a fully analog, diffraction-based architecture that turns random noise into recognizable pictures in one or a few light-propagation steps.

The core invention is called the Optical Generative Model (OGM): a fully analog, diffraction-based architecture that turns random noise into recognizable pictures in one or a few light-propagation steps.

How the Magic Happens

The pipeline has only three main stages:

- A lightweight digital phase encoder (a shallow neural network with ~580 million trainable parameters) receives pure Gaussian noise and outputs a 2D phase pattern φ(x,y);

- This phase pattern is displayed on a high-resolution spatial light modulator (SLM) — essentially a programmable liquid-crystal screen that can retard different parts of an incoming laser beam by precise fractions of a wavelength;

- Collimated laser light passes through the SLM, then free-space propagates through a trained diffractive optical decoder (a stack of 3D-printed phase masks or passive diffractive layers). After a few centimeters of propagation, the light intensity distribution that lands on an ordinary color CMOS sensor is the final generated image.

No active electronics sit between the SLM and the sensor.

No active electronics sit between the SLM and the sensor.

The “neural network” is literally etched into the physical structure of light waves.

The team demonstrated two variants:

- Snapshot OGM: a single forward pass of light produces the final image in <1 nanosecond of optical computation (limited only by the speed of light over ~10 cm);

- Iterative OGM: the current sensor image is fed back digitally, slightly denoised, re-encoded onto the SLM, and light passes through the same diffractive decoder again — exactly mimicking digital diffusion’s iterative refinement, but with optical speed at each step.

What They Actually Generated in the Lab

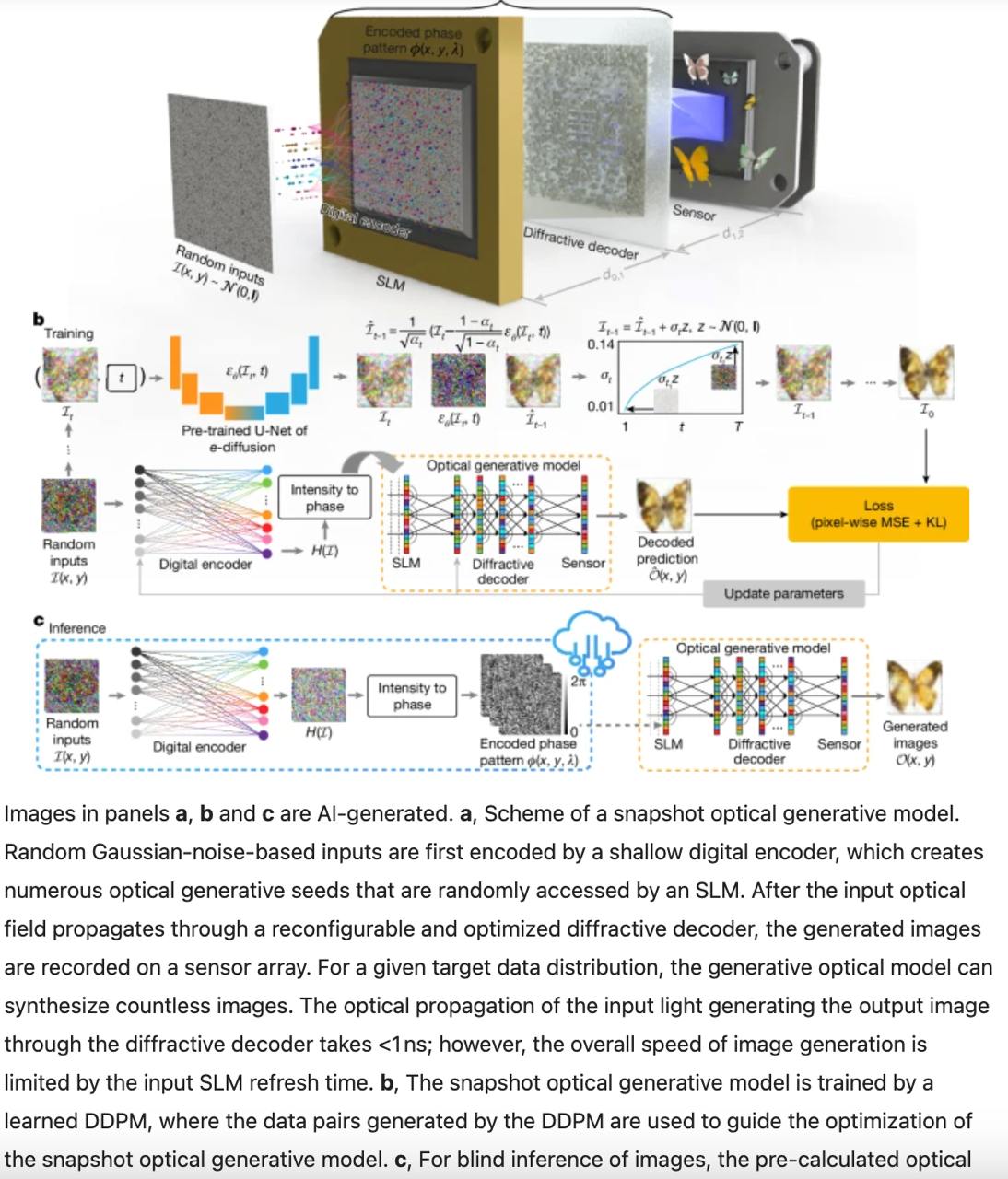

Using ordinary visible lasers (red 638 nm, green 520 nm, blue 450 nm combined through beam splitters), the researchers produced:

Using ordinary visible lasers (red 638 nm, green 520 nm, blue 450 nm combined through beam splitters), the researchers produced:

- MNIST handwritten digits with FID 131 (comparable to early digital diffusion models);

- Fashion-MNIST items (shoes, bags, clothing) with FID 180;

- Celebrity faces from a small CelebA subset;

- Realistic colored butterflies;

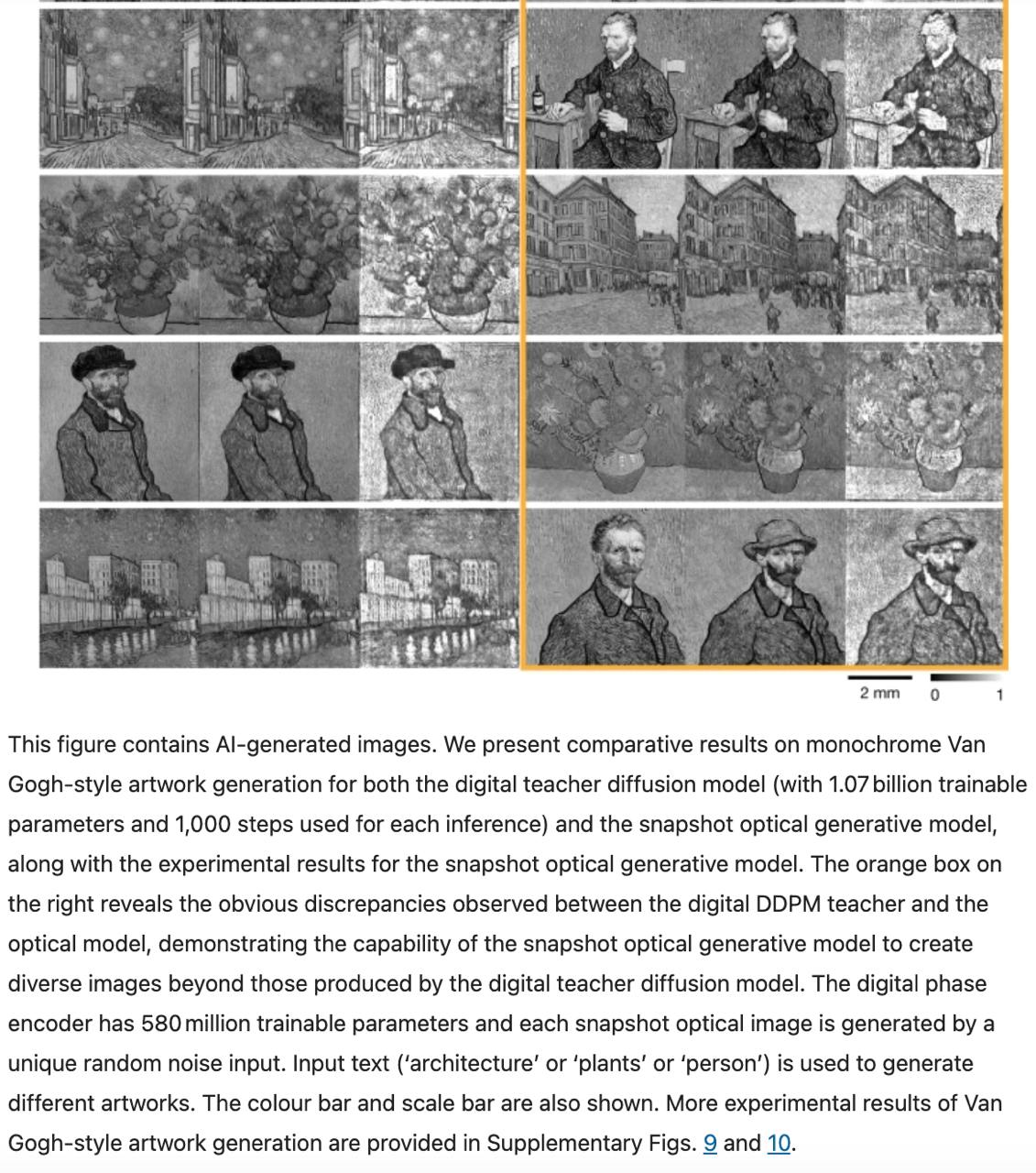

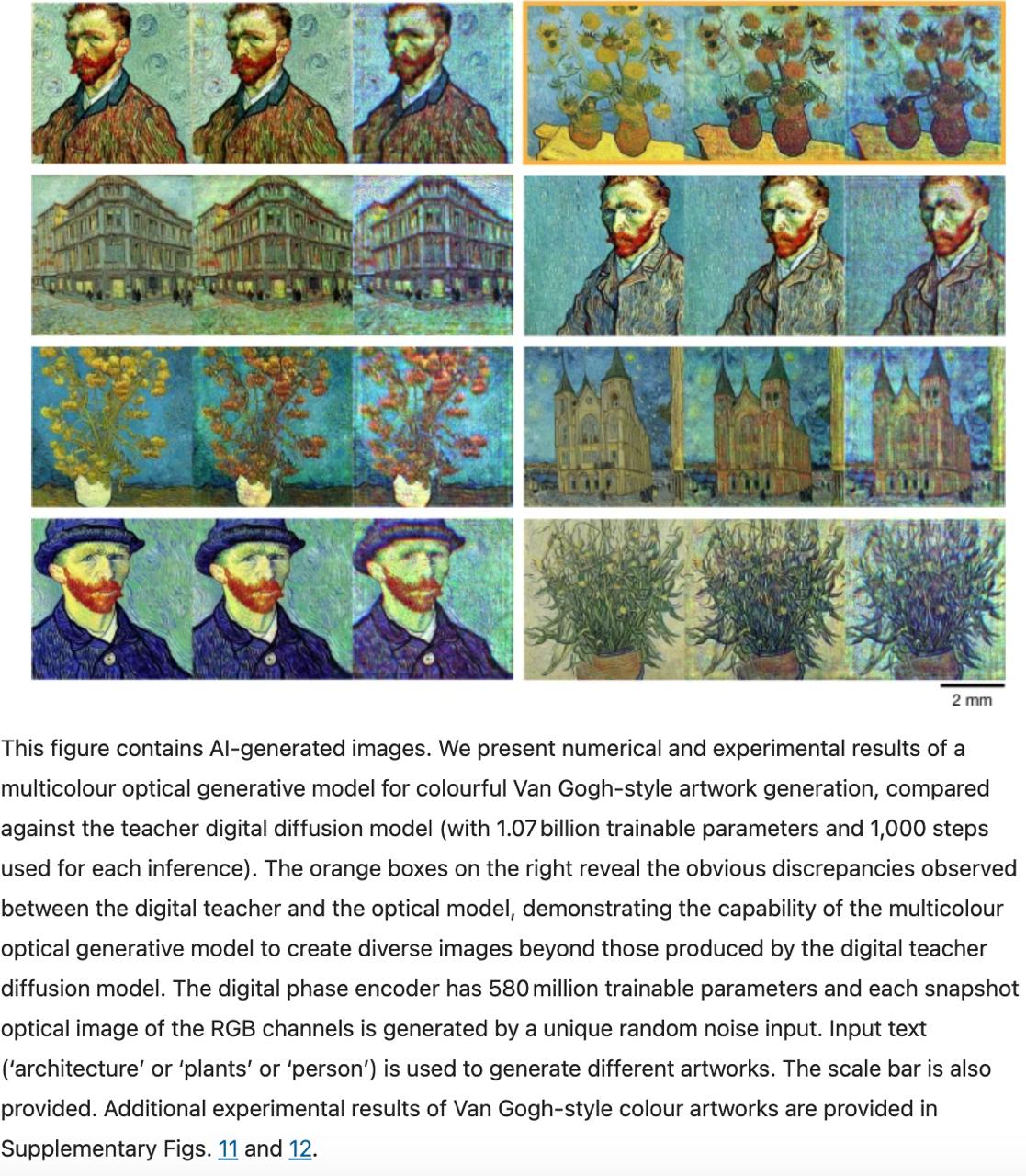

- Full-color artworks in Van Gogh’s swirling post-impressionist style when conditioned on simple text prompts like “sunflowers,” “church,” or “self-portrait”.

All of these were captured directly by the camera sensor — no post-processing beyond basic white-balance. The physical setup fits on a standard optical breadboard roughly 40 × 60 cm.

Why This Changes Everything

Energy & Speed

Energy & Speed

A single 4090 GPU generating one 512×512 image at 50 diffusion steps consumes roughly 10–15 joules and takes ~1–3 seconds. The optical system uses ~50 mW of laser power and finishes the equivalent computation in the time light travels 30 cm — about 1 billion times more energy-efficient per image and millions of times faster for the optical core.

Edge AI Becomes Realistic

Because almost all computation is passive, future versions could run on milliwatt power budgets inside smartphones, AR glasses, drones, or even contact-lens displays. Real-time style transfer, infinite image continuation, or private on-device generation without ever touching a GPU suddenly look feasible.

Scalability Path

The diffractive decoder is parallel by nature: every pixel computes simultaneously. Resolution is currently limited by SLM pixel pitch (~4 µm), but 8K and 16K modulators are already commercially available, and metasurface alternatives are reaching sub-wavelength precision.

Current Limitations (They Are Real)

- Optical alignment is painful: sub-micron precision is required across the entire beam path;

- Phase-only modulation throws away amplitude information, forcing the system to be creative in how it encodes data;

- Laser speckle and sensor noise still degrade fidelity compared to the best digital models;

- Color separation requires three separate laser paths and perfect overlay on the sensor;

- Conditioning on complex text prompts is still primitive (the current encoder is tiny compared to CLIP).

Yet even with these constraints, the experimental FID scores are within striking distance of 2022-era digital diffusion models that used 10–100× more parameters and orders of magnitude more energy.

Yet even with these constraints, the experimental FID scores are within striking distance of 2022-era digital diffusion models that used 10–100× more parameters and orders of magnitude more energy.

Also read:

- Sam Altman: How AI Is Flipping the Value of Professions

- China's Electric Truck Boom: A Green Freight Revolution Reshaping Global Energy Rivalries

- The Solar Revolution Is No Longer Coming – It’s Already Here (and It Speaks Chinese)

The Bigger Picture

This is not just another “optical neural network” paper. For the first time, a physical optical system trained end-to-end with backpropagation (via differentiable wave-propagation simulation) has crossed the threshold from toy proofs-of-concept into generating recognizable, aesthetically pleasing color images that rival early Stable Diffusion outputs — all while consuming less power than a smartwatch display.

If the roadmap holds, within 5–10 years we may see commercial optical generative chips the size of a postage stamp capable of producing 4K video frames at thousands per second, powered only by ambient light or a coin cell.

The GPU era gave us generative AI.

The coming optical era may make it ubiquitous, invisible, and essentially free.