In the ever-expanding world of artificial intelligence, where models like GPT-4 and Gemini devour terabytes of data, a new benchmark is turning heads - and upending assumptions about language dominance.

Dubbed ONERULER, this multilingual test probes how large language models (LLMs) grapple with extraordinarily long texts across 26 diverse languages.

Dubbed ONERULER, this multilingual test probes how large language models (LLMs) grapple with extraordinarily long texts across 26 diverse languages.

Developed by researchers from the University of Maryland, Microsoft, and the University of Massachusetts Amherst, ONERULER isn't about quick translations or simple queries. Instead, it buries crucial information deep within 80,000 to 128,000 tokens of synthetic "haystack" text - equivalent to an entire novel - and challenges models to retrieve and aggregate it accurately.

The results? A surprising revelation: English, the lingua franca of AI training, isn't the gold standard for precision. Polish takes the crown.

The Making of ONERULER: A Multilingual Stress Test

ONERULER builds on the English-centric RULER benchmark, extending its seven synthetic tasks to a global stage. These tasks blend retrieval (finding a "needle" in the haystack) with aggregation (summarizing or reasoning across scattered facts), including clever twists like "nonexistent needles" to test hallucination risks.

ONERULER builds on the English-centric RULER benchmark, extending its seven synthetic tasks to a global stage. These tasks blend retrieval (finding a "needle" in the haystack) with aggregation (summarizing or reasoning across scattered facts), including clever twists like "nonexistent needles" to test hallucination risks.

Researchers Yekyung Kim, Jenna Russell, Marzena Karpinska, and Mohit Iyyer crafted the core in English before enlisting native speakers for translations. They hired 17 Upwork annotators for 18 languages and six volunteers for the rest, paying $25 per language to ensure cultural and linguistic fidelity.

The 26 languages span continents and complexity levels: high-resource giants like English, Spanish, and Chinese rub shoulders with low-resource tongues such as Swahili, Tamil, Hindi, and Sotho. Contexts scale from 8,000 to 128,000 tokens, simulating real-world scenarios like legal reviews or historical analyses.

Models tested include heavyweights like OpenAI's o3-mini-high, Google's Gemini 1.5 Flash, and Meta's Llama 3.3 (70B). As context lengthens, the benchmark exposes raw capabilities—and biases.

Surprising Standouts: Polish Leads the Pack

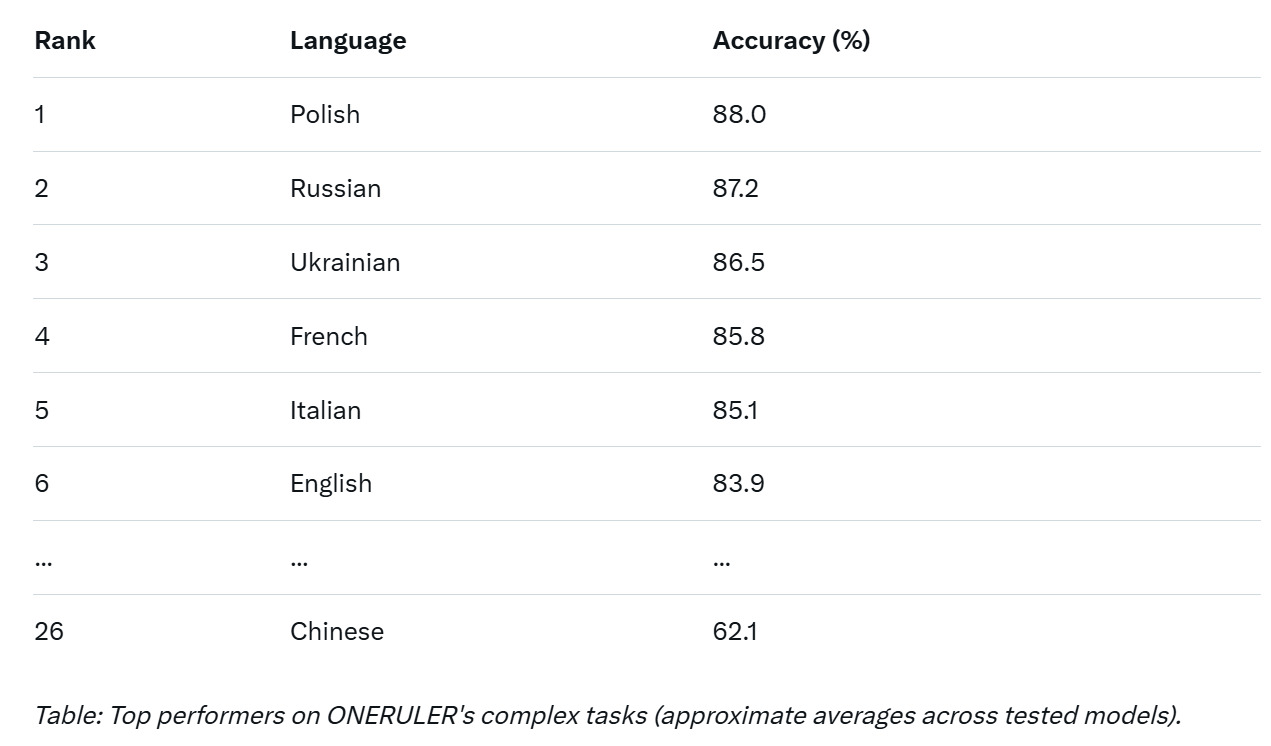

The findings shatter expectations. On the most demanding tasks, Polish prompts yield an impressive 88% accuracy, edging out competitors and claiming the top spot. This Slavic language, often stereotyped as notoriously complex with its consonant clusters and grammatical intricacies, proves a boon for AI precision. Researchers speculate that Polish's morphological richness—packed with inflections that convey nuance compactly - helps models navigate dense, long-form text without losing the thread.

Trailing closely are Russian and Ukrainian (both around 86-87%), followed by French and Italian (85-86%). English? It clocks in at sixth place with 83.9%—solid, but humbled by its continental rivals. Chinese lags at the bottom with roughly 62.1%, hampered by tokenization quirks that inflate its "haystack" size. Low-resource languages like Swahili and Tamil fare worst overall, with accuracies dipping below 70% on ultra-long contexts, highlighting a stark resource divide.

Cross-lingual experiments add another layer: When instructions and context mismatch languages, performance swings by up to 20%. A Polish prompt with English text boosts retrieval; the reverse tanks it. This volatility underscores how instruction language acts as a "ruler" for model behavior.

Also read:

- The Automation Revolution: How AI and Robotics Are Reshaping Corporate Workforces Amid Record Revenues

- The Hidden "Hand Farms" of India: Fueling the AI Robot Revolution with Human Motion

- From Meme to Mainstream: How AI Music is Conquering the Charts While We Were Busy Laughing at Slop

Implications: Rethinking AI's Linguistic Backbone

ONERULER's release on March 3, 2025, via arXiv and GitHub, arrives at a pivotal moment. As LLMs scale to million-token windows, long-context mastery is key for applications like automated research or global compliance checks.

ONERULER's release on March 3, 2025, via arXiv and GitHub, arrives at a pivotal moment. As LLMs scale to million-token windows, long-context mastery is key for applications like automated research or global compliance checks.

Yet, the benchmark reveals entrenched inequities: High-resource languages (those with abundant training data) dominate, but even among them, English's hegemony crumbles. Why Polish? Its training data, though smaller than English's, may encode superior syntactic patterns for disambiguation - a hypothesis ripe for deeper dives.

For developers, the takeaway is clear: Multilingual fine-tuning must prioritize diverse prompts, not just data volume. Low-resource languages' struggles signal an urgent need for inclusive datasets; without intervention, AI risks amplifying global divides.

Ethically, it prompts reflection: If Polish excels, could "underdog" languages like Hindi or Sotho harbor untapped strengths? Future iterations of ONERULER, now open-source, invite global collaboration to level the field.

In a field obsessed with English efficiency, ONERULER reminds us that one ruler doesn't fit all. By measuring models in their full linguistic spectrum, we're not just benchmarking AI - we're charting a more equitable path forward. Polish may have won this round, but the real victory lies in the diverse voices it amplifies.