Ten years ago, few believed a GPU company could rule the world. Today, NVIDIA is the most valuable public company on Earth — and the architect of the AI era.

On June 18, 2024, NVIDIA briefly surpassed Microsoft to claim the title of world’s most valuable company with a market cap of $3.34 trillion. By November 2025, it holds steady above $3.6 trillion — more than the GDP of Germany and Japan combined. This isn’t luck. It’s the result of a decade-long bet that paid off in ways even CEO Jensen Huang couldn’t have scripted.

The “Pickaxe Seller” of the AI Gold Rush

In 2012, AlexNet — a deep learning model trained on NVIDIA CUDA-enabled GPUs — crushed the ImageNet competition, proving that parallel computing could accelerate AI training by orders of magnitude. While others saw gaming chips, Huang saw the future of computing.

In 2012, AlexNet — a deep learning model trained on NVIDIA CUDA-enabled GPUs — crushed the ImageNet competition, proving that parallel computing could accelerate AI training by orders of magnitude. While others saw gaming chips, Huang saw the future of computing.

> Fact: The training time for GPT-3 (175B parameters) on CPUs would have taken 355 years. On NVIDIA A100 GPUs? Just 34 days.

Today:

- 99% of AI supercomputers run on NVIDIA GPUs (HPCwire 2025 rankings);

- Over 4 million developers use CUDA;

- 80%+ market share in AI accelerators (Omdia, Q3 2025).

Huang’s Three Masterstrokes

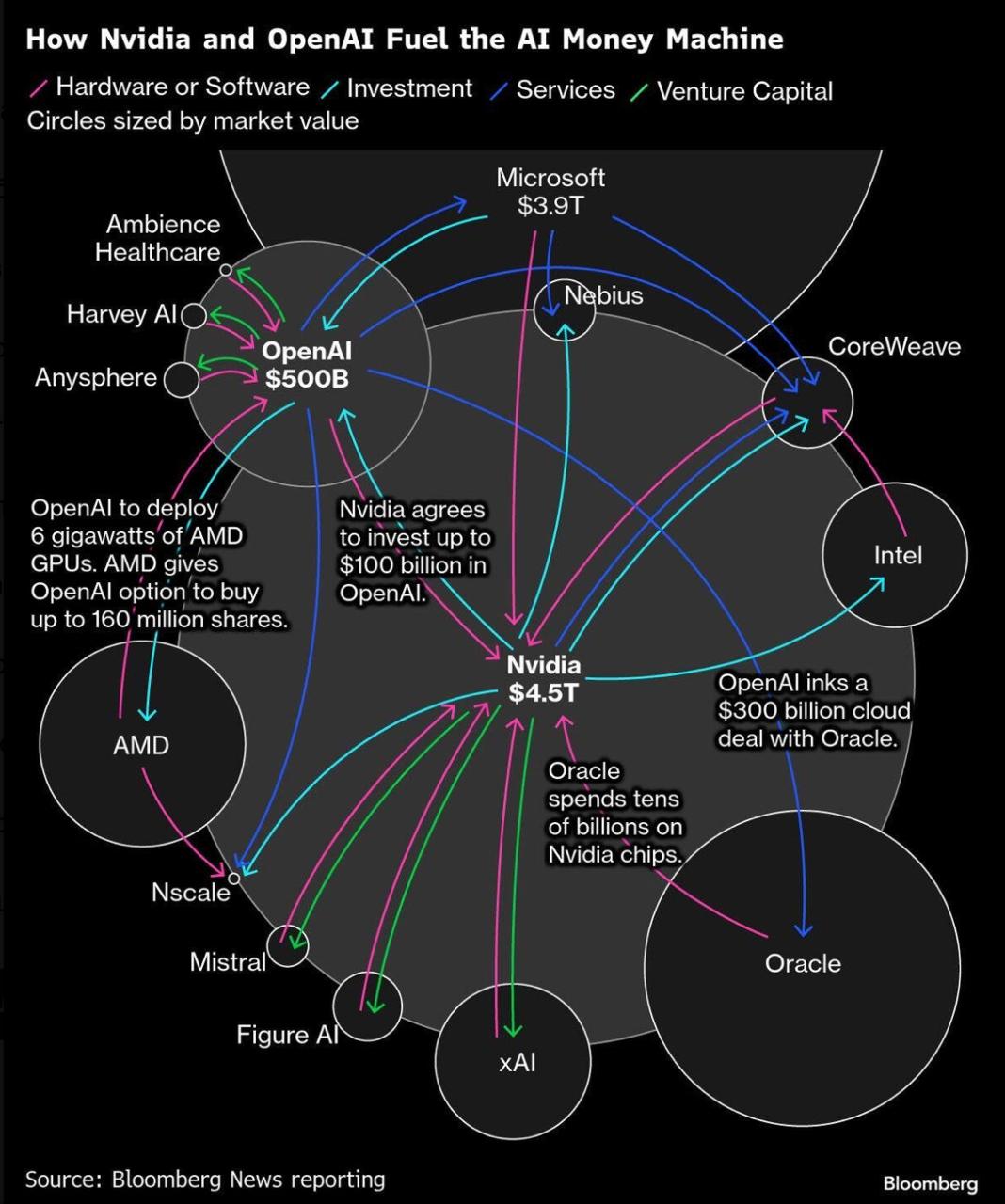

1. Strategic Alliances at Warp Speed

1. Strategic Alliances at Warp Speed

When OpenAI needed compute for GPT-4 in 2023, NVIDIA didn’t just sell chips — it co-engineered the cluster. The result? DGX Cloud, a joint service with Microsoft Azure, AWS, and Google Cloud, launched within 90 days.

> Example: xAI’s Colossus supercluster — 100,000 H100 GPUs — was deployed in 19 days using NVIDIA’s pre-configured racks and InfiniBand networking. Elon Musk called it “the fastest AI training system ever built.”

2. R&D on Steroids

NVIDIA now spends $10 billion annually on R&D — more than Intel and AMD combined.

The Blackwell platform (B200 GPU) delivers:

- 4 petaflops of AI performance;

- 30x faster training than H100;

- 25x better energy efficiency.

> Fact: A single Blackwell GB200 “superchip” (GPU + CPU) consumes 1,200 watts but replaces 60 traditional servers — slashing data center power by 95%.

3. Manufacturing Mastery via TSMC

3. Manufacturing Mastery via TSMC

Huang locked in exclusive access to TSMC’s most advanced nodes:

- 4nm for Hopper (H100);

- 3nm for Blackwell (2025 volume);

- 2nm reserved for Rubin (2026).

> Supply Chain Coup: When global chip shortages hit 2021–2023, NVIDIA secured 40% of TSMC’s CoWoS capacity — leaving rivals scrambling. AMD’s MI300X? Delayed 6 months. Intel’s Gaudi 3? Still in limited sampling.

The Ecosystem Moat: CUDA, cuDNN, and Beyond

AMD has great hardware. So does Intel. But no one touches NVIDIA’s software stack:

- CUDA: 15+ years of optimization

- TensorRT: Inference speedups up to 10x

- NVIDIA AI Enterprise: Certified for 95% of enterprise AI workloads

> Example: Meta’s Llama 3 70B model runs 40% faster on NVIDIA than on AMD ROCm — even with identical hardware. Developers won’t switch for marginal gains.

The Numbers Don’t Lie

Jensen Huang: The Visionary Who Didn’t Blink

In 2017, Huang told analysts:

In 2017, Huang told analysts:

> “The more you buy, the more you save.”

They laughed. Then data centers bought $100 billion worth of GPUs.

He didn’t just ride the AI wave — he built the ocean.

Also read:

- Gamma 3.0: The AI Presentation Startup That's Outpacing Microsoft and Google — and Now Building Websites Too

- Streamline Maintenance with Enterprise Asset Management

- The "Mentally Retarded" AI: How Training on Junk Data Creates Irreversibly Dumb LLMs

- How to Effectively Optimize Your Production Line?

Legacy in Silicon

NVIDIA will enter history books as:

- The company that turned graphics into intelligence;

- The infrastructure backbone of the 21st century;

- The proof that one right bet, executed flawlessly, rewrites economies.

As Huang said at GTC 2025:

> “We are not in the GPU business. We are in the accelerated computing revolution.”

And the revolution has only just begun.