In a significant move to curb misleading content, YouTube has intensified its efforts to combat fake movie trailers created using artificial intelligence (AI).

The video-sharing platform has begun demonetizing channels that publish such content, targeting those that produce deceptive trailers designed to mimic official movie previews.

The video-sharing platform has begun demonetizing channels that publish such content, targeting those that produce deceptive trailers designed to mimic official movie previews.

Among the first to face sanctions are Screen Trailers and Royal Trailer, two channels known for posting AI-generated trailers, including outlandish concepts like Titanic 2.

While these channels have lost their ability to earn ad revenue, they continue to upload AI-crafted trailers for highly anticipated films, raising concerns about the future of such content on the platform.

The Rise of AI-Generated Fake Trailers

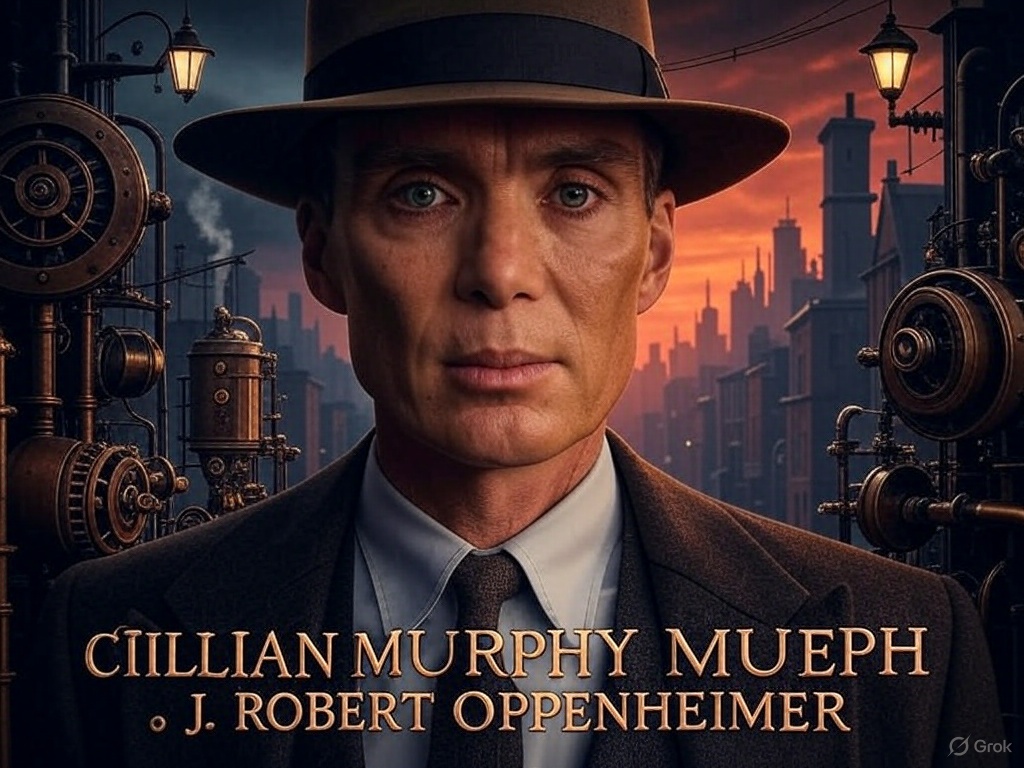

The advent of advanced AI video generation tools, such as OpenAI’s Sora and Google’s Veo, has made it easier for creators to produce highly convincing fake movie trailers. These trailers often blend clips from real films or TV shows with AI-generated imagery and voiceovers, creating content that can mislead viewers into believing they are official previews.

Channels like Screen Trailers and Royal Trailer, which are alternate accounts of previously demonetized channels Screen Culture and KH Studio, have amassed significant followings by capitalizing on this trend.

Channels like Screen Trailers and Royal Trailer, which are alternate accounts of previously demonetized channels Screen Culture and KH Studio, have amassed significant followings by capitalizing on this trend.

For instance, Screen Trailers recently posted a fake Titanic 2 trailer, while Royal Trailer uploaded an AI-generated Toy Story 5 concept that garnered over 144,000 views in just three days.

These trailers often imitate the visual style and tone of legitimate marketing materials, making it difficult for casual viewers to distinguish them from authentic content.

Some, like those from Screen Culture, closely mimic official trailers for upcoming films such as Superman or The Fantastic Four: First Steps, while others, like KH Studio’s creations, imagine entirely fictitious projects, such as a James Bond film starring Henry Cavill or a Squid Game season featuring Leonardo DiCaprio.

YouTube’s Response: Demonetization and Policy Enforcement

YouTube’s crackdown began following a Deadline investigation that exposed the scale and sophistication of AI-generated fake trailers on the platform. The investigation revealed that channels like Screen Culture, with 1.4 million subscribers, and KH Studio, with 724,000 subscribers, were generating millions of views and significant ad revenue by exploiting studio intellectual property (IP).

In March 2025, YouTube suspended both channels from its Partner Program, effectively cutting off their ability to monetize content.

In March 2025, YouTube suspended both channels from its Partner Program, effectively cutting off their ability to monetize content.

More recently, the platform extended these sanctions to their alternate accounts, Screen Trailers (33,000 subscribers) and Royal Trailer (153,000 subscribers), for violating its monetization and misinformation policies.

YouTube’s monetization policies require creators to significantly transform borrowed material to make it their own and prohibit content that is “duplicative, repetitive, or created solely for the purpose of getting views.” Additionally, the platform’s misinformation policies ban content that has been “technically manipulated or doctored” in a way that misleads viewers.

YouTube stated, “Our enforcement decisions, including suspensions from the YouTube Partner Program, apply to all channels that may be owned or operated by the impacted creator,” ensuring that creators cannot evade sanctions by operating alternate accounts.

Studios’ Complicity and SAG-AFTRA’s Concerns

Surprisingly, some Hollywood studios have been complicit in monetizing these fake trailers rather than issuing copyright strikes to remove them. According to Deadline, studios like Warner Bros. Discovery, Sony Pictures, and Paramount have claimed ad revenue from fake trailers for films such as Superman, House of the Dragon, Spider-Man, and Gladiator II, opting for financial gain over protecting their IP.

This practice has raised ethical concerns, particularly from SAG-AFTRA, the actors’ union, which criticized the monetization of “unauthorized, unwanted, and subpar uses of human-centered IP” as a “race to the bottom” that prioritizes short-term profits over creative integrity.

This practice has raised ethical concerns, particularly from SAG-AFTRA, the actors’ union, which criticized the monetization of “unauthorized, unwanted, and subpar uses of human-centered IP” as a “race to the bottom” that prioritizes short-term profits over creative integrity.

SAG-AFTRA’s statement highlights broader concerns about the misuse of actors’ likenesses and voices in AI-generated content, likening it to the “fake Shemp” phenomenon, where creators use trickery to pass off new material as existing IP.

The union argues that such practices not only mislead audiences but also undermine the rights of actors and the value of authentic creative work.

Also read:

- Apple’s Free Sleep Playlist Is a Game-Changer for Your Brain

- Valve Denies Steam Hack Rumors Following User Data Leak

- Chinese Robotaxis Expand Globally

Continued Content Uploads and the Road to a Potential Ban

Despite losing monetization privileges, channels like Screen Culture and its affiliates continue to upload AI-generated trailers. For example, Screen Culture recently posted a “Trailer 2 concept” for James Gunn’s upcoming Superman film, complete with AI-generated voiceovers and clips.

This persistence suggests that the loss of ad revenue has not deterred these creators, who may still benefit from increased subscriber counts or other indirect revenue streams.

This persistence suggests that the loss of ad revenue has not deterred these creators, who may still benefit from increased subscriber counts or other indirect revenue streams.

However, YouTube’s ongoing enforcement actions signal a potential path toward stricter measures, including the complete removal of such content.

YouTube’s policies allow for channels to be banned after accruing three copyright strikes within 90 days, a mechanism studios could use to shut down offending accounts entirely.

Yet, the inconsistent enforcement of copyright claims — only about 10% of Screen Culture’s 2,700 videos have faced monetization claims — indicates a reluctance from some studios to take aggressive action, possibly due to the revenue they generate from these videos.

Also read:

- YouTube’s New Podcast Chart: Video Rules, but Audio Still Thrives

- Marc Benioff Joins the Creator Economy with Shopify and Neal Moritz, Backing Whalar Group

- MetaMask Token Rumors Resurface: A Long-Awaited Launch or Another False Alarm?

The Future of AI Content on YouTube

YouTube’s crackdown on AI-generated fake trailers reflects a broader push to address misleading and low-effort content on the platform, which boasts over 122 million daily active users. The move is likely to set a precedent for how AI-generated content is regulated, forcing creators to prioritize transparency and originality or risk losing monetization privileges.

As AI technology continues to evolve, the line between fan-made creativity and deceptive content will become increasingly blurred. While channels like Screen Trailers and Royal Trailer argue that their “concept trailers” are harmless creative explorations, the potential for viewer confusion and IP misuse has prompted YouTube to take a firm stance.

If these channels persist in uploading misleading content, they may face not only demonetization but also outright bans, signaling the end of an era for AI-generated fake trailers on the platform.

For now, YouTube’s actions demonstrate a commitment to protecting viewers and the film industry from being misled by AI-driven “slop.” As the platform continues to refine its policies, the days of fake trailers like Titanic 2 dominating search results may be numbered.