The idea of an "AI Manhattan Project" has been gaining traction at the highest levels in the U.S., evolving from a bold metaphor into a tangible vision.

But behind the grandiose comparisons lies a critical question: what would such a project look like in practice?

But behind the grandiose comparisons lies a critical question: what would such a project look like in practice?

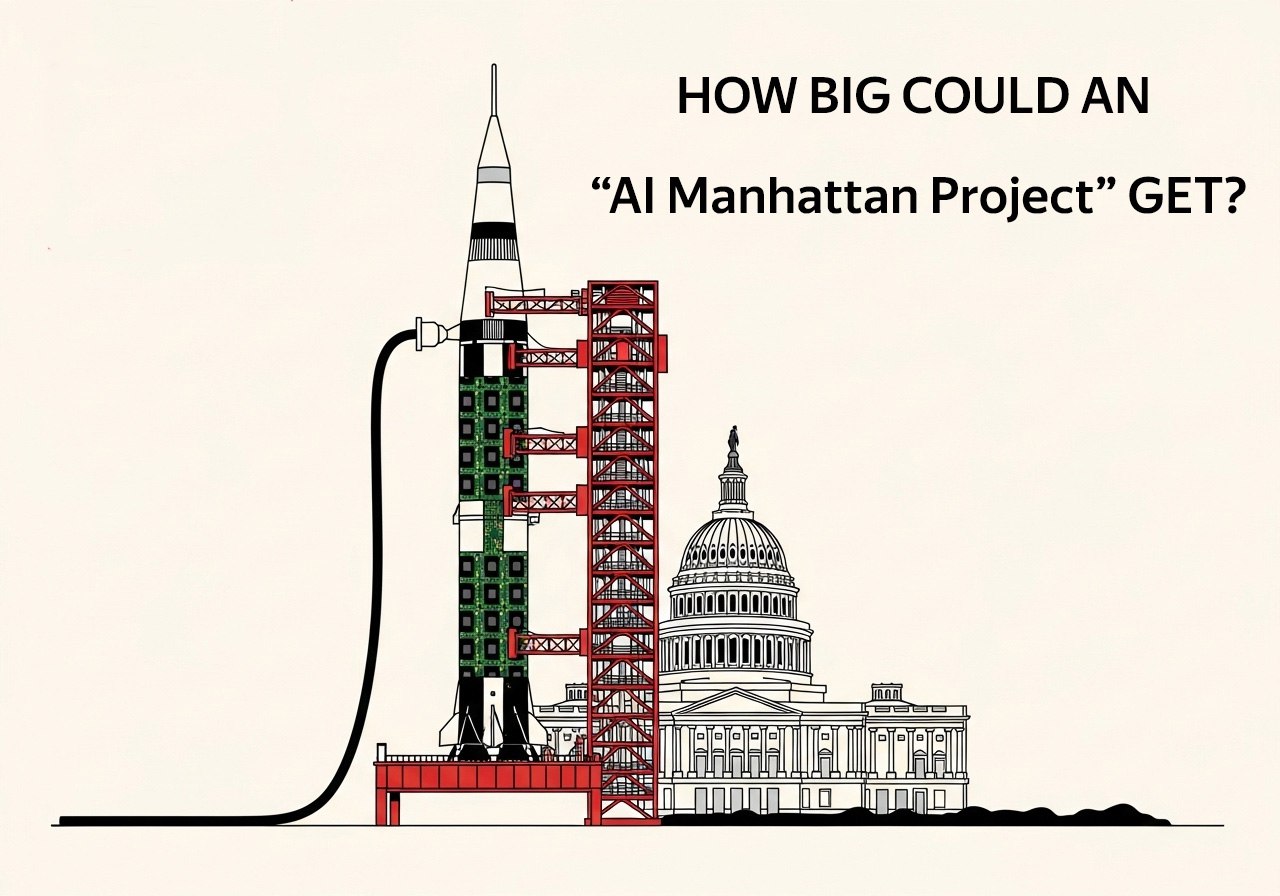

Analysts at Epoch AI, a nonprofit research institute focused on tracking AI development through trends in compute, data, and algorithms, have crunched the numbers to estimate the scale of this endeavor if the U.S. government consolidates private-sector resources and invests a share of GDP comparable to the peak of the Apollo program.

A Computational Colossus

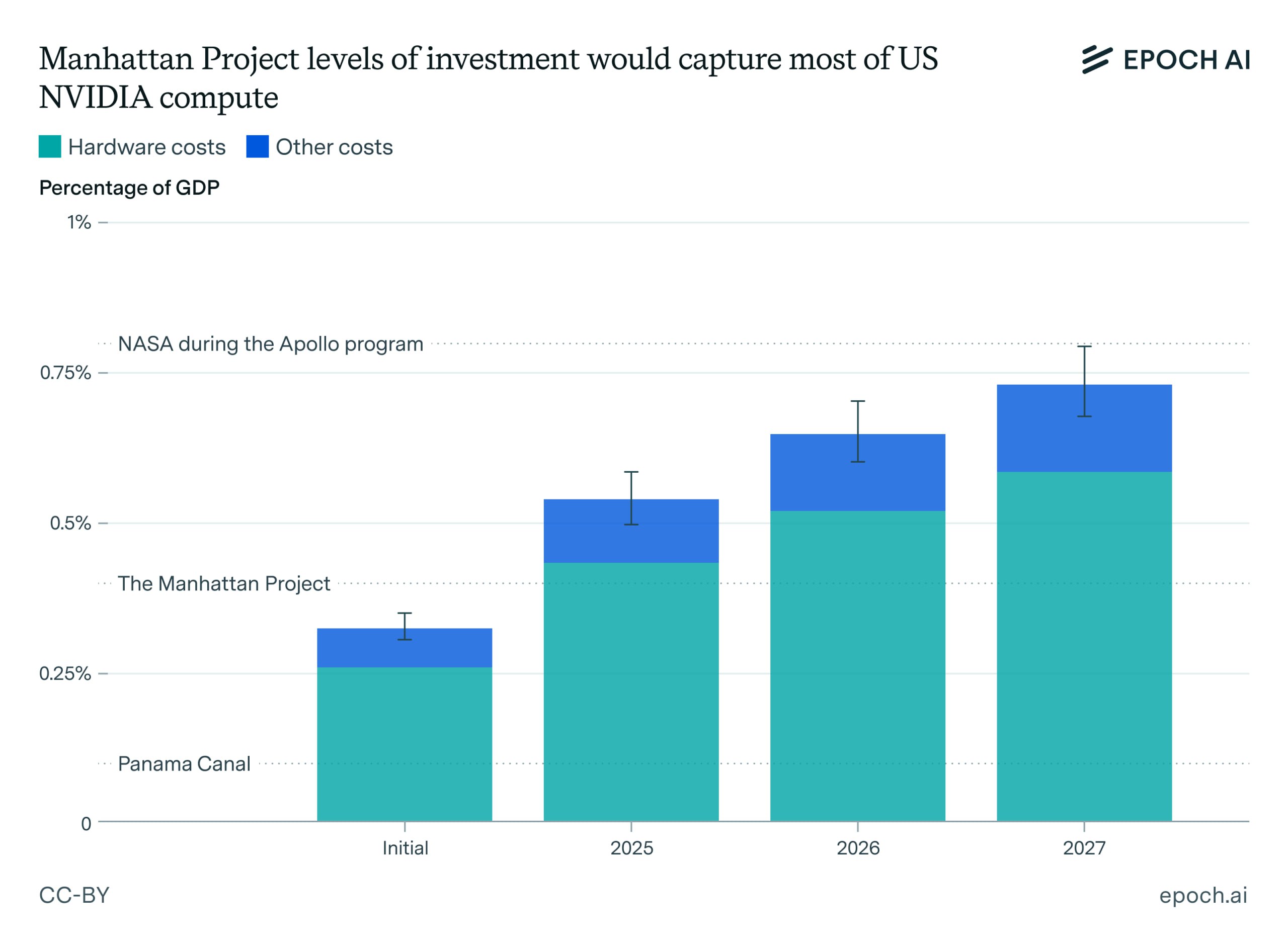

The projections are staggering. By the end of 2027, an AI Manhattan Project could enable a training run for a model with a computational power of approximately 2 × 10²⁹ FLOPs. To put this in perspective, that’s roughly 10,000 times the compute used to train GPT-4 — a leap that, based on current trends, wasn’t expected for several more years.

Such a project, funded at the level of the Apollo program (about 0.8% of U.S. GDP, or $244 billion in today’s dollars), could acquire and unify a cluster equivalent to 27 million NVIDIA H100 GPUs. This figure aligns with extrapolations of NVIDIA’s current U.S. revenue from chip sales, underscoring the feasibility of marshaling such resources with sufficient political will.

Can the Grid Handle It?

Powering 27 million GPUs would require approximately 7.4 gigawatts of electricity—more than the entire city of New York consumes. Surprisingly, this isn’t the main hurdle. Analysts note that the U.S. is already planning to add 8.8 gigawatts of capacity by 2027 through new natural gas power plants, many of which are earmarked for data centers. With legislative tools and coordination, the government could concentrate these resources in a single location, ensuring energy isn’t a bottleneck.

The Challenges

Of course, the plan isn’t without risks. Geopolitical tensions, particularly around Taiwan, could disrupt chip supplies, as the island produces a significant share of the world’s advanced semiconductors. Scaling up compute by thousands of times also isn’t as simple as flipping a switch—it requires time for iterative testing and engineering refinements. These are primarily technical, not resource-based, constraints.

Also read:

- The Empire Strikes Coin: China’s Digital Yuan Makes a Comeback (Offshore Edition)

- Narco-Cartels Begin Utilizing Starlink Technology

- Donald Trump Claims Paramount Settlement Far Exceeds $16 Million

A Leap Within Reach

Despite the challenges, Epoch AI’s analysis suggests that with coordinated effort and investment, the U.S. could achieve a transformative breakthrough in AI far sooner than anticipated. This isn’t just a theoretical exercise—it’s a calculated possibility that could redefine the global AI race. The question now is whether the political and industrial will can match the vision.