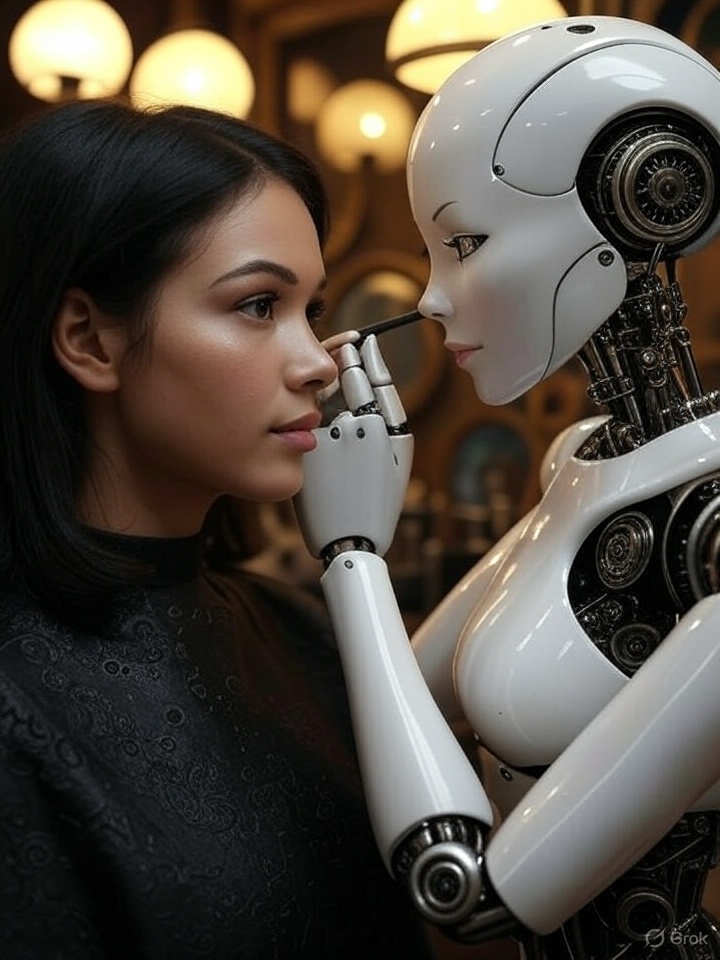

In an era where selfies dominate social media and beauty standards are more pervasive than ever, a surprising trend has emerged: people are consulting ChatGPT for beauty advice instead of professionals.

Armed with a selfie and a question, users are flocking to AI chatbots for tips on enhancing their appearance, from skincare routines to surgical recommendations.

Armed with a selfie and a question, users are flocking to AI chatbots for tips on enhancing their appearance, from skincare routines to surgical recommendations.

Praised for its blunt “honesty,” ChatGPT might analyze a photo and suggest “sharpening those cheekbones” or “considering blepharoplasty.” But behind this digital mirror lies a troubling reality — one that reflects not expertise, but the internet’s toxic beauty culture.

The allure is undeniable. ChatGPT offers instant, seemingly objective feedback at no cost. Unlike a cosmetologist, it doesn’t require appointments or hefty fees. Upload a photo, ask for advice, and within seconds, you get a tailored response.

Social media platforms like X are buzzing with users sharing their AI-generated beauty tips, with some claiming the bot’s suggestions — ranging from makeup hacks to cosmetic procedures — have saved them thousands of dollars. Others appreciate the “no-filter” approach, as the AI doesn’t sugarcoat its assessments. But this convenience comes with a hidden cost.

Social media platforms like X are buzzing with users sharing their AI-generated beauty tips, with some claiming the bot’s suggestions — ranging from makeup hacks to cosmetic procedures — have saved them thousands of dollars. Others appreciate the “no-filter” approach, as the AI doesn’t sugarcoat its assessments. But this convenience comes with a hidden cost.

ChatGPT isn’t a trained dermatologist or aesthetician. It’s a large language model, trained on vast swaths of internet data—data that includes toxic beauty forums, airbrushed advertisements, and the relentless “buy-to-transform” messaging of the beauty industry. When you ask it for advice, it doesn’t draw from medical expertise or personalized care.

Instead, it regurgitates patterns from the content it’s been fed, often amplifying unrealistic standards and subtle pressures to conform. That “honest” suggestion to “fix” your jawline or “brighten” your skin? It’s less a diagnosis and more a reflection of the internet’s obsession with perfection.

The consequences are more than skin-deep. Beauty is deeply personal, tied to self-esteem and identity. When an AI delivers a laundry list of “improvements,” it risks reinforcing insecurities rather than alleviating them. A cosmetologist might approach a client with empathy, considering their unique features and needs.

The consequences are more than skin-deep. Beauty is deeply personal, tied to self-esteem and identity. When an AI delivers a laundry list of “improvements,” it risks reinforcing insecurities rather than alleviating them. A cosmetologist might approach a client with empathy, considering their unique features and needs.

ChatGPT, on the other hand, lacks nuance — it can’t read emotional cues or understand the psychological impact of its words.

Worse, its advice can lead users down dangerous paths. Anecdotes on X reveal users taking AI suggestions to extremes, from unverified skincare concoctions to researching invasive procedures without professional guidance.

One user shared how ChatGPT recommended a chemical peel based on a selfie, only to later discover from a dermatologist that it could have caused severe skin damage.

This trend also highlights a broader issue: the monetization of insecurity. The beauty industry has long profited by making people feel inadequate, and AI is becoming its latest tool.

ChatGPT may not directly sell products, but its suggestions often align with commercialized beauty ideals — think anti-aging creams, contouring palettes, or cosmetic surgeries. By presenting these as “solutions,” it inadvertently fuels a cycle where users feel compelled to spend to “fix” themselves.

And while the chatbot itself is free, the procedures and products it recommends are not. Users on X have reported spending hundreds, even thousands, chasing AI-driven beauty fixes, only to end up dissatisfied or worse, harmed.

And while the chatbot itself is free, the procedures and products it recommends are not. Users on X have reported spending hundreds, even thousands, chasing AI-driven beauty fixes, only to end up dissatisfied or worse, harmed.

The irony is stark: people turn to AI for empowerment, hoping to bypass gatekeepers and take control of their beauty journey. Yet, they’re often left navigating a minefield of misinformation and unattainable standards. Experts warn that relying on AI for beauty advice can lead to misguided decisions, from incorrect skincare regimens to unnecessary procedures.

Dr. Sarah Thompson, a dermatologist quoted in a recent WebMD article, emphasized that “AI lacks the clinical judgment to assess skin conditions accurately. A selfie can’t replace a professional evaluation.” Posts on X echo this sentiment, with some users lamenting how AI’s generic advice led to wasted money or exacerbated skin issues.

So, what’s the alternative? Cosmetologists and dermatologists remain the gold standard for personalized, safe beauty advice. They offer expertise grounded in science, not algorithms trained on Reddit threads and Instagram filters.

For those drawn to AI’s accessibility, experts suggest using it as a starting point — perhaps for general skincare tips — but always cross-referencing with professionals. Regulatory bodies are also stepping in, with discussions on X highlighting calls for stricter guidelines on AI-generated medical and beauty advice to protect consumers.

The rise of ChatGPT as a beauty consultant underscores a deeper cultural issue: our obsession with quick fixes and external validation. It’s tempting to see AI as a neutral arbiter, free from human bias. But it’s not. It’s a mirror of the internet’s flaws—amplifying the same pressures that make us question our worth in the first place.

Also read:

Also read:

- Gabe Newell’s Starfish Neuroscience Aims to Revolutionize Brain-Computer Interfaces with New Chip

- Stan Store Secures Major Investment from Steven Bartlett for a Double-Digit Stake: A Game-Changer for Creator-Focused Platforms

- Humanoid Robot Battles Become Reality in China: Mecha Fighting Arena Competition Kicks Off in Hangzhou

Instead of curing insecurity, it risks monetizing it, one selfie at a time. The next time you’re tempted to ask a chatbot how to “fix” your face, consider this: true beauty advice doesn’t come from an algorithm.

It comes from expertise, empathy, and a mirror that reflects more than just your flaws.