The robotics industry is on the brink of a revolution, and at its heart lies RoboBrain 2.0, an open-source AI model poised to redefine how robots perceive, plan, and interact with the physical world.

Developed as a cornerstone for the next generation of humanoid robots, this advanced model is already generating buzz for its versatility and accessibility, offering a foundation that could transform robotics as we know it.

A Multifaceted AI for Real-World Robotics

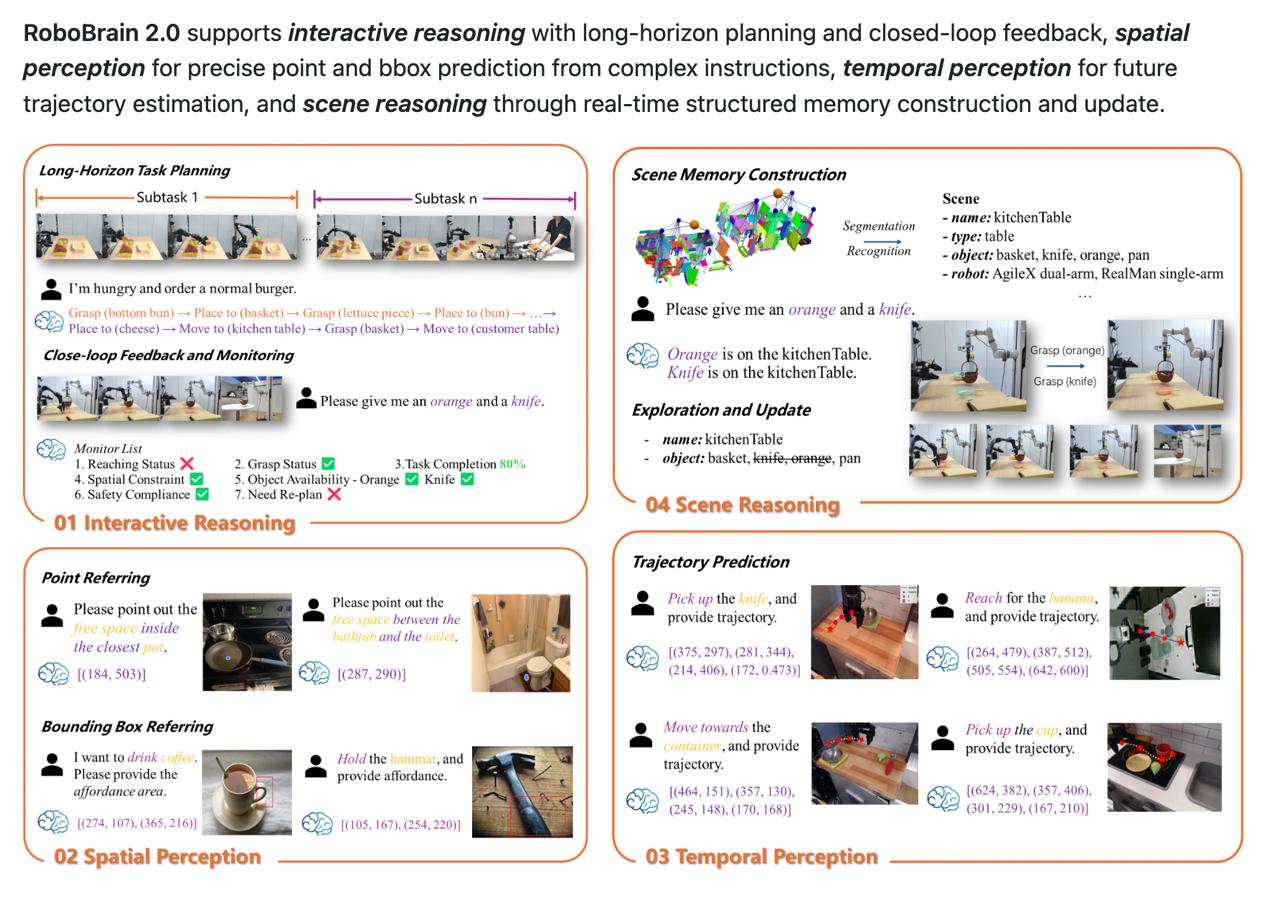

RoboBrain 2.0 is designed to tackle a wide array of tasks, from environmental perception to seamless robot control. Its capabilities include:

RoboBrain 2.0 is designed to tackle a wide array of tasks, from environmental perception to seamless robot control. Its capabilities include:

- Real-Time Planning, Perception, and Action: The model excels at breaking down complex tasks, understanding spatial relationships, and executing actions in dynamic environments.

- Ease of Integration: With a lightweight 7B parameter architecture, it’s optimized for integration into real-world projects and robotic systems, making it a practical choice for developers and engineers.

- Fully Open-Source: Available for free under an open-source license, it invites global collaboration and innovation.

This model is being hailed as a potential game-changer, with many predicting it could lay the groundwork for advanced humanoid robots to become a common sight by 2027.

Cutting-Edge Architecture

RoboBrain 2.0’s architecture is a testament to its sophisticated design:

RoboBrain 2.0’s architecture is a testament to its sophisticated design:

- Multi-Modal Input Processing: It handles images, long videos, and high-resolution visual data, making it adept at interpreting complex scenes.

- Text Understanding: The model processes intricate textual instructions, converting them into actionable insights.

- Input Pipeline:

- Visual Data: Fed through a Vision Encoder and an MLP Projector, which transform raw visuals into token embeddings.

- Text Data: Converted into a unified token stream for consistent processing.

- LLM Decoder: This core component performs reasoning, constructs plans, determines coordinates, and identifies spatial relationships, enabling robots to operate with human-like intelligence.

This blend of vision and language processing allows RoboBrain 2.0 to bridge the gap between abstract instructions and concrete actions, a critical step in bringing AI into the physical realm with confidence.

A Glimpse into the Future

With its robust capabilities and open-source nature, RoboBrain 2.0 is fueling optimism about the rapid advancement of robotics. The potential for mass production of sophisticated humanoid robots by 2027 feels increasingly plausible, as the model’s design supports scalability and real-world applicability. As AI steps out of the digital world and into the physical, RoboBrain 2.0 is leading the charge with a bold, collaborative approach.

Getting Started

Ready to explore this groundbreaking technology?

You can dive in with these simple steps:

You can dive in with these simple steps:

bash

git clone https://github.com/FlagOpen/RoboBrain2.0.git

cd RoboBrain

# build conda env.

conda create -n robobrain2 python=3.10

conda activate robobrain2

pip install -r requirements.txt

Also read:

- Chinese AI Companies Smuggle Petabytes of Data in Suitcases to Bypass U.S. Restrictions

- Mubi Faces Boycott Threats Over Ties to Israeli Defense Industry

- What Fuels 'Dad TV'? A Peek into Fathers' Viewing Habits

RoboBrain 2.0 isn’t just an AI model — it’s a movement toward a future where robots are smarter, more accessible, and integrated into our daily lives. As the global community builds upon this open-source foundation, the possibilities for innovation are limitless.